Abstract

This paper introduces a new tool called the CREATE system, designed to help students develop their ability to manage collaborative discourse. It begins by defining what is meant by the term collaboration, why it is an essential skill, and the many problems associated with collaboration. The author proposes the CREATE system and activities like it as a potential solution to these problems. The paper then describes system features, shows examples of the system in use, presents findings from early evaluations of learning effectiveness and user satisfaction, discusses the potential for scaling, as well as the limitations of the system and future work. It concludes by pointing out the need to develop innately human higher-order skills that computers cannot emulate, such as collaboration. Computer supported collaborative learning (CSCL) researchers need to rethink what it means to learn in online contexts in order to begin to place greater value on the development of higher-order collective thinking skills and to work to develop tools and practices that help instructors and students better manage learning processes for themselves and their groups.

KEY WORDS: Computer supported collaborative learning (CSCL), collective cognition, collaboration, soft skill development, shared regulation, socio-metacognition, higher education, online learning innovation

1. INTRODUCTION

Technology is making us increasingly interconnected, pushing us to collectively think about and make sense of this information together. As a result, the ability to search for, evaluate, and make sense of information with others in order to build new knowledge is becoming increasingly important. Yet, people, regardless of age, largely lack these skills and formal education does not place enough emphasis on helping students develop them (Barron, 2003; Borge and Carroll, 2014; Borge and White, 2016; Kozlowski and Ilgen, 2006; Kuhn, 2009). The fact that the development of these skills is not being emphasized in education is a big problem with negative consequences for our society. Thus, there is a need to develop new ways to meet the needs of students to prepare them for the information age: (a) to prepare them to think about and discuss information with others in ways that lead to higher-order collective thinking (b) to teach them strategies to guard against isolated ways of thinking, and (c) to help them develop habits of mind that question the credibility of information regardless of who shares it especially when the information aligns with their world view.

To address this need, the author and her colleague, Todd Shimoda, designed and developed a system called CREATE and have been conducting research to test its utility. CREATE stands for Collective Regulation & Enhanced Analysis Thinking Environment. The aim of the system is to go beyond providing a place to collaborate toward ensuring for the development of necessary collaborative sense-making skills, i.e., collective information synthesis and knowledge negotiation.

The paper argues that students need to understand and manage collaborative knowledge-building processes and presents the CREATE system as a means to do so. The CREATE system was developed as a result of an iterative design-based approach. The paper describes the system architecture, provides real-world examples of the system in use, and discusses findings related to learning outcomes and student feedback. It then discusses evaluation of the system, including strengths and weaknesses, and concludes by considering the ways in which the system and related activities meet the needs of the next generation of online students.

2. DEFINING COLLABORATION

The term collaboration has been widely used to refer to a variety of different things and has therefore led to many misconceptions as to what the term means. In this paper, collaboration refers to “the synchronous activity that occurs as individuals engage in collective thought processes to synthesize and negotiate collective information in order to create shared meaning, make joint decisions, and create new knowledge” (Borge and White, 2016). This definition is in alignment with those used by other scholars who study collaborative learning (Hakkarainen et al., 2013; Roschelle and Teasley, 1995; Stahl, 2006). It is important to distinguish collaborative learning from other social forms of learning (i.e., whole class discussions or threaded discussion forums), because although collaboration may be possible in the aforementioned contexts, it is not common.

2.1 What Is Known about Collaboration?

Collaboration provides many benefits to learners. It allows students the opportunity to engage in more sophisticated forms of higher-order thinking around subject matter, which can lead to deeper understanding and the creation of new knowledge (Borge and White, 2016; Chi and Wylie, 2014). As Chi and Wylie (2014) explain, during collaboration students are pushed to be more cognitively active, by engaging in collective sense-making. Collaboration can lead to innovation as groups are better able to generate solutions to complex problem-based tasks than individuals (Kerr and Tindale, 2004). However, most people do not understand what it means to collaborate, which is why true collaboration, where people work to synthesize shared information and negotiate what the group knows, rarely happens and why there are so many problems that result from it (Barron, 2003; Borge and White, 2016; Borge and Carroll, 2014; Kerr and Tindale, 2004; Kozlowski and Ilgen, 2006; West, 2007).

In their paper on team effectiveness, Kozlowski and Ilgen (2006) show how lack of collaborative skills often leads to negative societal outcomes which implies a need to develop these skills for the public good. Drawing from a variety of real-world examples Kozlowski and Ilgen point out a critical tension in our society: Collaboration has become a critical factor for success in the modern world, yet our society is primarily focused on individualized learning practices and reward systems. They argue that collaborative sense-making skills can be learned with proper training that provides “clear learning objectives, assessment of skill levels, practice, and feedback” (Kozlowski and Ilgen, 2006).

2.2 An Innovative Approach to Supporting Collaborative Processes: The CREATE System

The idea of regulating interactions is not new to the field of CSCL, but many interventions in CSCL focus on regulating collaborative interactions for students rather than with students. One of the most common approaches used by CSCL scholars is to optimize collaborative processes during activity by structuring, prompting, or constraining collaborative activity (Dillenbourg and Hong, 2008).

Though this form of external regulation can help students collaborate better and learn more about a domain, there is a need to develop tools to help students learn how to regulate collaborative activity for themselves (Järvelä and Hadwin, 2013). The CREATE system uses domain content learning as a means for students to improve collaborative discourse processes. This is a new genre of CSCL technology: computer-supported collaborative training.

The root concept behind the CREATE system was to build a collaborative training system that helps to develop socio-metacognitive expertise: the expertise needed to understand, monitor, and regulate collaborative sense-making processes (Borge and White, 2016). The system was intended to help students develop enough socio-metacognitive expertise to allow them to monitor and regulate their own collective thinking practices and improve their team's collaborative processes.

The CREATE system is an interactive online environment that serves as a place for students to carry out synchronous, online collaborative discussions and evaluate them afterward. The system itself is made up of three modules: the chat module, user module, and administration module. The chat module supports online collaborative activities; the user module allows individuals to update user information and track group activity; and the administration module allows an instructor to decide what information students see in different parts of the discussion modules.

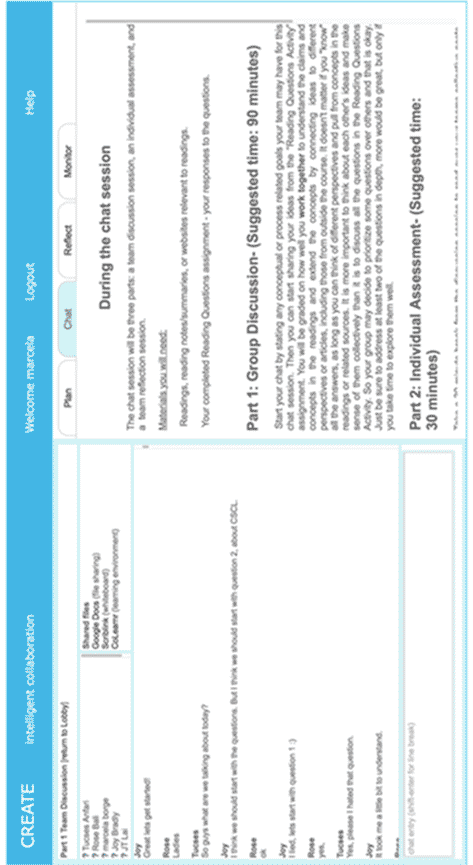

The collaborative and reflective activities occur in the chat module. The chat module consists of a discussion window on the left panel, activity window on the right, and four tabbed workspaces above the activity window: plan, chat, reflect, and monitor (see Figure 1). The discussion window remains open constantly so students can communicate throughout their activity while the tabbed workspaces are opened one at a time in the activity window.

FIG. 1: Screenshot of the chat module

The tabbed workspaces represent the regulatory process that groups will learn to carry out over time as they work to improve their collaborative discussions. The plan tab helps teams organize meetings. The chat tab displays the group's ongoing discussion as well as discussion instructions. Typical discussion instructions in CREATE include the general topic to be covered, a description of the different required parts and length of the discussion, and when and how to use the reflect and monitor tabs.

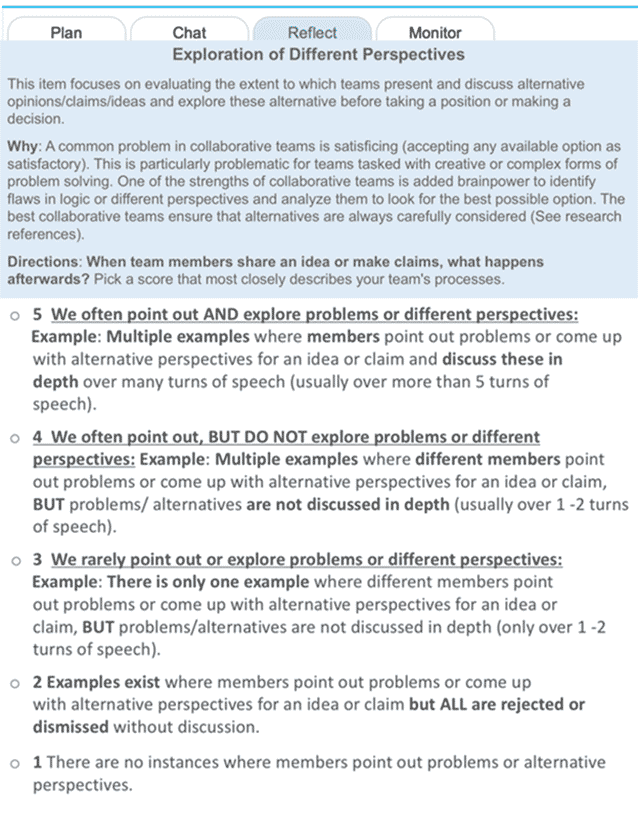

After students complete their discussion activity they move onto the reflect tab. The main aim of the reflect tab is to help translate collaborative learning theory into reflective tools that students can use to think about and evaluate their own processes. This type of support can help students to reduce problems associated with gap analysis, a type of thinking process that is necessary for effective regulation (Nesbit, 2012). The purpose of gap analysis is to compare current activity to desired activity so as to identify changes that need to be made to achieve desired outcomes.

Gap analysis is difficult in the context of collaborative process learning because most students do not know enough about collaboration to (a) accurately evaluate their current processes or (b) know what desired processes look like. Moreover, in focus groups conducted while gathering requirements for the system, instructors discussed feeling unprepared to support collaborative activity, stating that they lacked sufficient expertise to know the difference between effective and ineffective collaboration. Thus, the reflect tab was designed as a means to provide the expertise needed to carry out gap analysis for collaborative improvement. The reflect tab translates collaborative learning theory into self-reflection items that students can use to understand what desired collaborative practices look like and how to use this information to evaluate their own practices.

When students open the reflect tab, they see an interactive list of desired communication process items (see top of Figure 2). When students click on an item, the system describes what it is, why it is important, and then instructs students to scroll through the discussion pane to examine the discussion and look for specified patterns. These patterns match rubric scores from 1 to 5 (see bottom of Figure 2). Students are also asked to provide evidence from the chat transcripts to justify their scores for each item. Once individuals finish entering what they perceive to be the team's scores and rationale for these scores, they proceed to the final feature in the discussion module, the monitor tab.

FIG. 2: Screenshot of the alternative perspectives item in the reflect tab

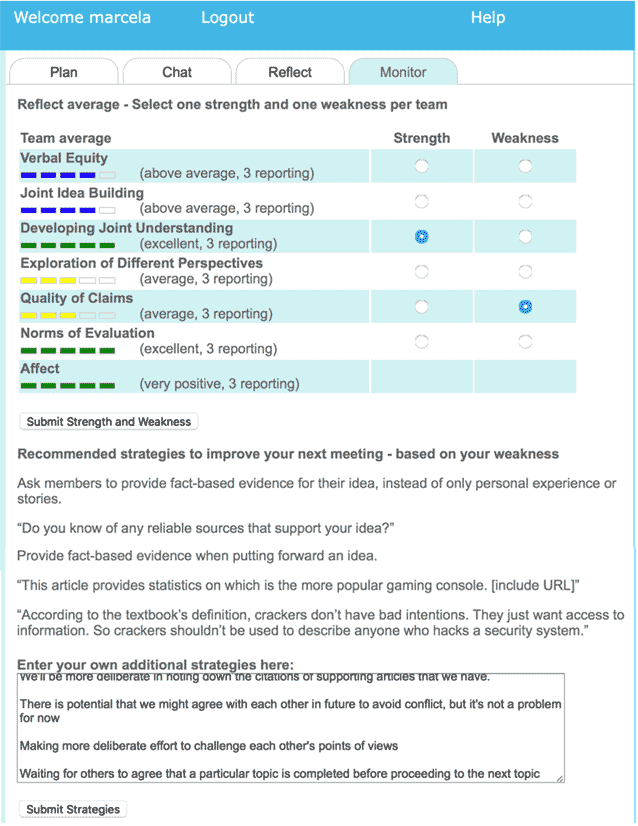

The monitor tab shows the team's average scores for each of the reflection items. The team can use this information to select the team's greatest strength and weakness with regard to their collective sense-making activity. On the basis of what they select as their weakness, the system provides suggestions for strategies they can use to improve the quality of their sense-making discourse for their next discussion session. Users can then decide which strategy or strategies they think would work best for their team from those suggested or create their own and enter it into the system (see Figure 3).

FIG. 3: Screenshot of the Monitor Module

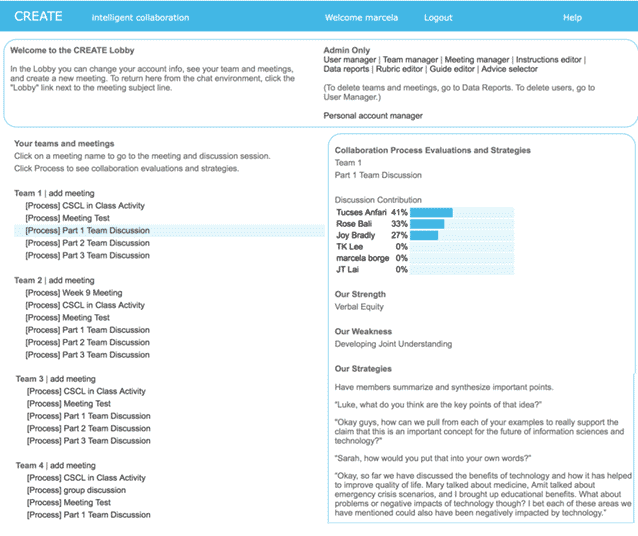

User modules are available in the CREATE Lobby, the landing page where users are taken after logging into the system. Besides normal user controls (i.e., modifying personal information, adding groups, discussions, etc.), there are awareness features for students and instructors. Students can see the teams they belong to and the discussion meetings their teams have created; whereas, instructors can see all of the teams in their course and can keep tabs on all of their discussions. Clicking on the process link for a team's previous meeting will pull up awareness features: member contribution percentages, group process evaluations, and the team's selected strategies for improving (see Figure 4).

FIG. 4: Screenshot of awareness features in the User Module

The administration module is restricted to course administrators and designed to provide them with as much control as possible over their students' CREATE experience. This module allows for management of all aspects of the users, teams, content, and data. Not only can instructors create and manage team membership, but they can revise or create entirely new discussion instructions, reflection items, reflection rubrics, and strategy selection advice. In this way, an instructor can decide to support students' ability to collectively monitor and regulate any higher-order thinking process they deem important. However, the system includes default settings and information intended to provide additional collaborative expertise for students and instructors.

The administration module contains models of optimal collective thinking processes that are articulated to users through the reflective features in the system. For example, the system default is to include two core capacities that define collaborative discourse quality: information synthesis and knowledge negotiation. These capacities are each broken down into three communication patterns that can be evaluated based on the reflective items that guide students' analysis of group process (Table 1). These items include verbal equity, idea building, developing joint understanding for information synthesis, and exploration of alternative perspectives, quality of claims, and social norms for knowledge negotiation. Instructors and students can browse these items, read why they are important, and learn to identify them in real conversation.

TABLE 1: Pragmatic model of collaborative discourse competence

| Communication Aims | Definition | Positive Examples | Negative Examples |

Information Synthesis |

|||

| Distribution of Verbal Contributions | The extent to which all members are contributing to the discussion process | Team verbal contributions are almost perfectly equitable | One member contributes most turns of speech and at least one member is barely contributing |

| Developing Joint Understanding | The extent to which teams ensure ideas are understood as intended by speakers by rewording, rephrasing, or asking for clarification. | Team takes time to reword another member's idea to check for understanding or ask another member to explain an idea by elaborating further, and also synthesize major decisions or multiple ideas of members. | The team does not show any instances where a member tries to reword, summarize, or confirm another member's idea or decision, or a possible team action. |

| Joint Idea Building | The extent to which team elaborates/adds to other contributions to ensure ideas are not ignored or accepted without discussion. | Team members add to another's idea over a large number of turns AND do not show instances of ignoring others or adding unrelated ideas. | Members either ignore others and pose different suggestions that do not connect to the original idea or they simply accept the idea and move on. |

| Knowledge Negotiation |

|||

| Exploring Alternative Perspectives | The extent to which teams present and discuss alternative opinions/claims/ideas | Team members point out problems or come up with alternative perspectives for an idea or claim and discuss these in depth over many turns of speech. | There are no instances where members point out problems or alternative perspectives. |

| High Quality Claims | The extent to which teams provide sophisticated, fact-based rational | Claims are supported by course readings or online content AND include sophisticated, logical rationale or weighing of differing options. | When members make claims they do not include any rationale, evidence, or weighing of options. |

| Constructive Discourse | The extent to which teams adhere to social norms during evaluation that show that members' and their ideas are respected and valued | Responses are professional and respectful with at least one instance where a person acknowledges the reasonableness of an opinion or claim before pointing out flaws or counter arguments. No examples members attack a member's intelligence or character, make disrespectful comments about the idea, or use inappropriate or offensive language. | Members may repeatedly engage in extremely inappropriate or offensive language (i.e., blatant profanity, vulgarity, racism, sexism, etc.), or there are examples where a member attacks another member's intelligence or character (e.g., “you don't know what you're talking about”), or make disrespectful comments about member's ideas (e.g., “that is stupid”). |

The system default also includes a list of problems that interfere with each of these items, along with related strategies to prevent or correct problems (for access to these and other guides and resources see https://sites.psu.edu/mborge/helpful-resources/). For example, under knowledge negotiation, quality of claims, one listed problem is, “Members are suggesting an idea without backing it up or providing reasons.” To address this problem, the system includes a variety of strategies housed in the guide editor and examples, as follows:

Strategy: Ask members to provide reasons for their ideas. Push members to share their rationale.

Example 1: “What do you think is the best idea we have come up with and why?”

Example 2: “Why do you think that won't work?”

Strategy: Try to persuade each other when proposing ideas.

Example 1: “That's a good point, because different cultures have different ideas about what makes for quality of life.”

There is also an advice selector, in the administrative features, with preset rules about which strategies and examples the system should suggest for each reflection item based on the average team score. Thus, the rubric, guide, and advice modules are interconnected: The rubric sets the reflection items and defines optimal performance; the guides editor provides a model for regulation items, strategies, and examples directly connected to the reflection items; and the advice selector proposes rules for conditional advice giving in the system.

Administrators can also view and download data tables for team meetings that include all the discussion entries (or turns of speech, numbered) per meeting by team members, the time when the entries were submitted, and the individual reflection scores and justification statements for each team member. These reports are designed to export easily to an Excel spreadsheet for manual or automated coding, allowing for the possibility of future software tools to be easily added to the system.

2.3 CREATE System in Use

It is important that students prepare for CREATE discussions, much like people prepare for a meeting by completing work they will report on in advance of the meeting. To help students prepare, instructors should provide two to three hard questions that push students to go beyond surface reading toward higher-order thinking about course content, i.e., connecting to real-world practice or synthesizing readings to draw implications. Students are required to submit individual responses to these questions prior to the discussion and then take part in a conversation, in real time, to share their perspectives, synthesize ideas, and negotiate what is known in the field.

In examining the discussions that occur in the CREATE system, it has become evident that the system offers many different types of learning opportunities for students. The synchronous nature of the activity pushes students to react to each other and course concept ideas as they emerge. This type of dynamic activity often leads students to recognize gaps in understanding or state things in ways that represent their current thinking allowing the instructor to more fully evaluate student understanding. Many online students are also paired with students from different countries providing them with opportunities to examine concepts from cross-cultural perspectives. Many students also Figure out that improving collaborative practices requires that they look up other articles to connect text concepts to information out in the world. As a result, students are often sharing additional articles and personal insights, which they discuss in the context of the required readings. For example, undergraduate students in an Information Sciences and Technology course shared a variety of resources when discussing ideas related to the impacts of computer algorithms and data analytics on society, as shown in the text below:

| Line | ID | Contribution |

| 14 | 89 | The concept I pulled from the reading is the Google's PageRank algorithm. I have always wondered how the search engine knows the best answers. You can actually make your website searchable using keywords that most people will look for. |

| 15 | 89 | It's called Search Engine Optimization (SEO). |

| 16 | 89 | https://www.pagerank.net/ |

| 17 | 88 | Oh yeah! That is super interesting! My mom has a business that completely utilizes that, like the page ranking within Google. |

| 18 | 89 | Oh, that's nice! I did not know about it! I also liked the Amazon review mechanism, too. |

| 19 | 88 | I read a really good article about it (page ranking) a couple weeks ago… I'll see if I can't find it while we talk, but it kind of talks about the negative side to that, how Google utilizes the fact that you're not going to look at anything past the second or even third page, and manipulates it so that money from ads and click-throughs flow into them instead of providing you with the most accurate answer. |

| 20 | 88 | one sec, not done |

| 21 | 88 | and then also how certain “website mills” take complete advantage of that, like about.com and how.com, where it's just a slew of writers looking up what keywords are the most profitable (keywords also lead to ad clicks) and just create content based on that and not actual information. |

| 22 | 88 | I think it was written by the guy who started DuckDuckGo. |

| 23 | 89 | That is absolutely correct! I do not even click on the second page lol. |

| 24 | 88 | Lol, I know, you never think about it, until you do, and then you wonder what was on page 10 and would it have made your paper better… |

In the course of their five discussions, this team shared 15 additional resources that were not required reading and made connections between course content and the real world. These types of discussions can help students find course content more meaningful and identify with the ideas and conflicts that ensue. However, a down side to this type of information sharing is that the types of examples and sources can vary. This particular team used many third-party references or newspaper articles and did not challenge each other to find better sources because the grading only assessed whether sources were provided, not the quality of those provided.

When it comes to improving habits of mind, the CREATE system can only do so much, but it serves as a shared experience that instructors can use to identify problematic patterns, bring them to the students' attention, and push students to be more critical of sources. With such support over time, students can develop better habits of mind.

Of course students also spend time evaluating the quality of their own conversations in the CREATE system and working to improve them over time. For example, the following team was originally scored as deficient in evaluating different perspectives and providing high-quality claims. For their next discussion they each came prepared with different articles, but in doing so, lost sight of the conversation. They recognized this problem during their reflection (line numbers are provided for ease of referencing):

| Line | ID | Contribution |

| 1 | 109 | I'm not certain about you guys, but I think our verbal equity might have dropped a bit with this discussion. |

| 2 | 108 | Yeah...it's always hard for me, because I believe we all bring in a lot of thoughts, and then I lost my connection in between the discussion. Maybe I can get a better connection...LOL |

| 3 | 114 | I can understand that. Personally, I think it's sometimes hard to keep up with others' research and also put fourth our own. |

| 4 | 109 | but I definitely feel we peaked on different perspectives. |

| 5 | 108 | I agree. I believe that we are improving on opening difference of opinions. I feel we always can improve our ability to research and provide facts to back our arguments. I believe we will never hit the ceiling on that. |

| 6 | 109 | Yeah, I don't think CREATE is really well suited for this purpose. Maybe some sort of asynchronous chat would be better… |

| 7 | 114 | Haha, well maybe we should try to slow down a little and make sure everybody understands what we are talking about. I feel since we all had different perspectives this time, we all had our own research that we were trying to show. |

| 8 | 108 | That's a good plan. I like that. |

Student 109 points out that the team may not have all contributed to the conversation as equally as they did last time (line 1). The students discuss this new pattern of communication that has emerged from their desire to improve other patterns they identified in their last discussion, namely, providing alternative perspectives and quality of claims (lines 2–5). As students discuss their new strengths and weaknesses (lines 4–5), student 109 argues that synchronous chats, like CREATE may not be the best suited for in-depth conversation. Student 114 disagrees, he argues the problem was they were so focused on sharing information that they did not stop to make sure they understood what everyone was saying (line 7). This is an important move because rather than blaming their problems on an external problem, i.e., the synchronous nature of the system, 114 pushes the team to focus their attention on their actual communication processes in order to modify and improve them. Thanks to 114, the team identifies the real problem and comes up with new strategies they can use to improve the quality of their processes.

2.4 Evaluating Learning Effectiveness and Student Satisfaction

Studies thus far show that teams can significantly improve the quality of their discussions when using the activities in the CREATE system: Over time, students become better at extending each other's ideas, checking for understanding, monitoring talk time, providing alternative perspectives, providing high-quality claims, and following better social norms when critiquing each other (Borge et al., 2015). Teams that focus closely on the existing patterns of communication and make efforts to modify these over time, like the group from our previous example, tend to improve more than those who do not.

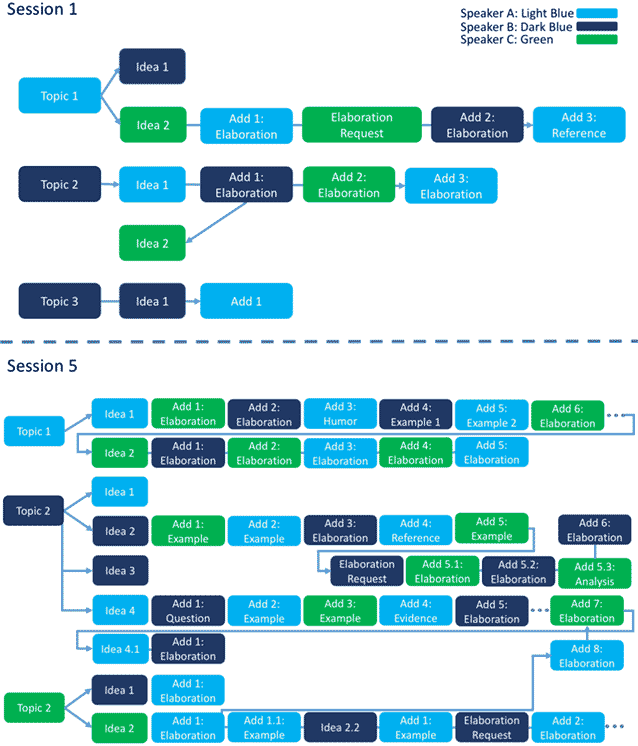

As teams work to improve their patterns of communication, the form of the entire discussion can change overtime. For example, Figure 5 shows a team's conversation moves from Session 1 and compares it to their final session, Session 5. Though the team discusses three topics and spends the same amount on the activity each time in the beginning of the course, the team spends far less time expanding on each topic and makes less sophisticated discussion moves as compared to the end of the course.

FIG. 5: Comparison of patterns of communication from Session 1 to Session 5

Over the last two iterations of the study the author's team asked students to argue for keeping or eliminating the CREATE sessions, based on their experience, and to explain their rationale. Students were encouraged to be honest so the design team could gather information needed to better meet their needs. Fifty out of the 80 students responded to the survey. Sixty-eight percent of students argued for keeping the activities and 16% argued for eliminating them; the other 16% were neutral. Of those that argued for keeping the activities, most recognized the costs posed by these activities but felt the added benefits were worth the extra effort:

“The discussions in CREATE made us push, made us uncomfortable, made us grow in ways that we typically don't engage, because it is awkward and other courses allow us to hide from our tendencies to not want to engage in structured dialogue by way of giving us tasks that do not involve others as means of testing our comprehension of materials.”

Whereas, those who argued for keeping the activities wanted richer more progressive learning experiences, students who argued against keeping the CREATE activities expressed their desire for easier more traditional learning experiences: “I believe a quiz would be easier on individual's schedules and make them absorb more materials from the course.” These students may see the course as a task to be completed to advance their career. As such, they may see the CREATE activities as added effort with no meaningful payoff for their immediate goals.

There was a small number that argued against the activities, but did seem to value the learning opportunities they presented. These students felt that the reading material for the course was too basic to promote good conversation and the pre-discussion questions were not difficult enough. These students, on average, had a larger amount of knowledge and experience within the domain as compared to the majority of students who enrolled in introductory courses. As such, they perceived less benefits from the discussions.

This feedback is important because it suggests that, overall, the system is meeting the needs for the majority of students, especially those looking for high-quality learning experiences. However, it also highlights the importance of choosing adequately difficult reading material, placing students in teams with compatible background expertise, and crafting pre-discussion questions that push for deeper analysis of content.

2.5 Potential for Scaling

In partnership with the Teaching and Learning with Technology unit at Penn State University, the author is currently in the process of expanding the CREATE prototype into a multi-user, university-wide system with added usability for the administrative features. Faculty across the university are taking part in early pilots of the system to document instructor needs and inform the design of professional development modules to support instructors as they work to use the CREATE system for the first time. In this way, instructors will have the support they need to embed CREATE activities into their courses and use the system to meet their own instructional goals. As part of this process a variety of resources have been developed to help instructors better support collaborative activities (see https://sites.psu.edu/mborge/helpful-resources).

Though the system provides support to help students monitor and regulate ongoing collaborative discourse, the instructor still maintains a critical role in the learning process. Instructor feedback is an important factor in helping teams learn how to improve over time. The reason why instructor feedback is important is because students can be quite inaccurate with their self-assessments. Similar to research findings of competence awareness (Kruger and Dunning, 1999), students have a tendency to believe they are more competent than they actually are. However, when an instructor gives them a more realistic assessment, students can work to make sense of the discrepancy in scores and improve deficient processes. This leads us to one of the biggest obstacles for scaling: instructor effort.

In order for students to improve, effort is needed from instructors to assess discourse quality and provide feedback for students. Careful assessment of all six items takes anywhere from 30 min to 1 h per team. So, for a class of about 40 students, 13 teams, it would take about 6.5–13 h, to assess group processes. This is fairly equivalent to the amount of time it would take to evaluate individual written papers, 10–20 min per student, for 40 students, 6.7–13.3 h.

Though providing feedback is important, there is flexibility with regards to how often it is provided. Overall the quality of feedback is important (Rosé and Borge, in press) but does not need to be provided for each discussion session in order for students to improve. Students can still improve over multiple sessions as long as they receive feedback after session one and again prior to their last session.

The author's group has also examined the possibility of automated assessment in collaboration with a machine learning expert (Rosé and Borge, in press). The current method of discourse evaluation proved too difficult for a computer to reliably compute without a human in the loop. The computer was extremely reliable at coding line-by-line posts, but aggregating these micro-codes to assess the bigger patterns of communication could only be reliably done for specific populations after calibration with a human coder to. However, it might be possible to automate some aspects of the assessment to reduce time spent by the instructor and also increase instructor awareness of teams that are in need of extra coaching and support.

3. DISCUSSION

The CREATE system was designed to address many of the problems associated with collaborative discourse and collective regulation with the aim to develop technological support to help students regulate their own conversations and improve the quality of collective sense-making. Early evaluation of the system is promising, but more work remains to be done in this area. Initial findings show that the system succeeds at pushing students to pay more attention to their communication processes and Figure out ways to improve quality over time.

It was clearly helpful for students to unpack their conversations as objects of thought to try to understand how specific tendencies negatively impact their group's collective thinking processes. These discussions often led students to propose strategies that included changing the way they prepared for discussion as they came to realize the importance of taking notes and seeking out information about the readings on the Internet. Making these realizations for themselves may do more for changing their learning practices than being told to take notes and seek out information from every instructor, in every class, because these students now have the experience to understand how and why it is important.

CREATE and activities like it can help students shift from passive recipients of knowledge to agents of change: changing the way they understand and apply core concepts for themselves and others. However, for systems like CREATE to succeed it is important to reconsider what it means to learn in online contexts, begin to place greater value on developing higher-order collective thinking skills, and work to develop tools and practices that help instructors and students better manage learning processes for themselves and their groups.

Work on the CREATE system is still in early stages. Expanding the system will require examining how different instructors use CREATE in order to design the system to meet the needs for a range of users. The models of collaborative competence that are embedded in the system will also need iterative testing and improvement. Nonetheless, this innovation and technologies like it could help to develop habits of mind that will be in demand in decades to come and help educators move beyond teaching facts toward teaching lifelong skills.

ACKNOWLEDGMENTS

I thank my design and development partner, Todd Shimoda, for his many contributions to this project. I also thank Yan Shiou Ong for leading the evaluation efforts to help inform the utility of the system. Finally, I am indebted to all of the students that participated in the research over the years. Thank you for allowing my group to examine your interactions and for giving constructive, thoughtful feedback on the system and activities. This project was partially funded by the National Science Foundation (Grant No. IIS-1319445) and the Center for Online Innovation in Learning at Penn State.

For those instructors that are interested in providing activities like those housed in the CREATE system, the author provides access to all of the guides and reflective materials on her website: https://sites.psu.edu/mborge/helpful-resources/. Though slightly more difficult to manage, it is possible to carry out similar activities in an online chat space by providing discussion activities and paper-based reflective tools (Borge et al., 2015).

REFERENCES

Barron, B. (2003), When smart groups fail, J. Learning Sci., vol. 12, no. 3, pp. 307–359.

Borge, M. and Carroll, J.M. (2014), Verbal equity, cognitive specialization, and performance, Proc. of 18th Int. Conf. on Supporting Group Work, New York: ACM, pp. 215–225.

Borge, M., Shiou Ong, Y.S., and Rosé, C.P. (2015), Activity design models to support the development of high quality collaborative processes in online settings, in Computer-Supported Collaborative Learning Conf., 2015 Conf. Proc., Gothenburg: International Society of the Learning Sciences, Inc., Vol. 1, pp. 427–434.

Borge, M., Ganoe, C., Shih, S., and Carroll, J. (2012), Patterns of team processes and breakdowns in information analysis tasks, in Proc. of ACM 2012 Conf. on Computer Supported Cooperative Work, New York: ACM, pp. 1105–1114. DOI=10.1145/2145204.2145369 https://dl.acm.org/citation.cfm?doid=2145204.2145369

Borge, M. and White, B. (2016), Toward the development of socio-metacognitive expertise: An Approach to developing collaborative competence Cognit Instr., vol. 34, no. 4, pp. 1–38. http://www.tandfonline.com/doi/full/10.1080/07370008.2016.1215722

Chi, M.T. and Wylie, R. (2014), The ICAP framework: Linking cognitive engagement to active learning outcomes, Educ. Psychologist, vol. 49, no. 4, pp. 219–243.

Dillenbourg, P. and Hong, F. (2008), The mechanics of CSCL macro scripts, Int. J. Comput.-Support. Collab. Learning, vol. 3, no. 1, pp. 5–23.

Hakkarainen, K., Paavola, S., Kangas, K., and Seitamaa-Hakkarainen, P. (2013), Sociocultural perspectives on collaborative learning: Toward collaborative knowledge creation, in C. Hmelo-Silver, C. Chinn, C. Chan, and A. O'Donnell, Eds., The International Handbook of Collaborative Learning, New York: Routledge, pp. 57–73.

Järvelä, S. and Hadwin, A. F. (2013), New frontiers: Regulating learning in CSCL, Educ. Psychol., vol. 48, no. 1, pp. 25–39.

Kerr, N.L. and Tindale, R.S. (2004), Group performance and decision making, Annu. Rev. Psychol., vol. 55, pp. 623–655.

Kozlowski, S.W. and Ilgen, D.R. (2006), Enhancing the effectiveness of work groups and teams, Psychol. Sci. Public Interest, vol. 7, no. 3, pp. 77–124.

Kruger, J. and Dunning, D. (1999), Unskilled and unaware of it: how difficulties in recognizing one's own incompetence lead to inflated self-assessments, J. Personality Social Psychol., vol. 77, no. 6, pp. 1121–1134.

Kuhn, D. (2009), Do students need to be taught how to reason? Educ. Res. Rev., vol. 4, no. 1, pp. 1–6.

Nesbit, P.L. (2012), The role of self-reflection, emotional management of feedback, and self-regulation processes in self-directed leadership development, Hum. Resource Dev. Rev., vol. 11, no. 2, pp. 203–226.

Roschelle, J. and Teasley, S. D. (1995), The construction of shared knowledge in collaborative problem solving, Comput. Support. Collab. Learning, Berlin: Springer, pp. 69–97.

Rosé, C.P. and Borge, M. (in press), Measuring Engagement in Social Processes that Support Group Cognition. in Salas, E. and S. Fiore (Eds). From Neurons to Networks, Oxford University Press.

Stahl, G. (2006), Group cognition: Computer support for building collaborative knowledge, Cambridge, MA: MIT Press. Retrieved from http://gerrystahl.net/elibrary/gc/.

West, G.P. (2007), Collective cognition: When entrepreneurial teams, not individuals, make decisions, Entrepreneurship Theory Practice, vol. 31, no. 1, 77–102.

-

Borge Marcela, Aldemir Tugce, Xia Yu, How teams learn to regulate collaborative processes with technological support, Educational technology research and development, 70, 3, 2022 Crossref

Comments

Show All Comments