THE ROLE OF GENERATIVE AI-POWERED PERSONAS IN DEVELOPING GRADUATE INTERVIEWING SKILLS

University of Calgary, 2500 University Drive, Calgary, Alberta, T2N 1N4, Canada

*Address all correspondence to: Soroush Sabbaghan, University of Calgary, 2500 University Drive, Calgary, AB, T2N 1N4, Canada, E-mail: ssabbagh@ucalgary.ca

This article presents an in-depth examination of the artificial intelligence (AI)-powered persona-generating program PEARL—Persona Emulating Adaptive Research and Learning Bot, which utilizes GPT-4 application programming interface (API), for developing graduate students' research-interview skills. PEARL offers a novel solution to the challenges faced in qualitative research, such as ethical concerns, participant accessibility, and data diversity, by simulating realistic personas for interview training. This study, framed by experiential learning theory (ELT), explores graduate students' experiences with PEARL in a graduate course, focusing on how it enhances the four facets of ELT: concrete experience, reflective observation, abstract conceptualization, and active experimentation. The findings reveal that while students perceive PEARL as a beneficial tool for experiential learning and skill development, it also has limitations in replicating the complexity of human interactions. The study contributes valuable insights into the integration of generative AI in enhancing graduate research competencies and underscores the enduring need for human involvement in the research process. It highlights the potential of generative AI tools like PEARL to bridge the gap between theoretical knowledge and practical skills in graduate education, while also drawing attention to areas for future refinement and ethical considerations in generative AI-enabled pedagogy.

KEY WORDS: generative AI-powered program, experiential learning theory, graduate research skills

1. Introduction

Emerging as transformative innovations in the academic landscape, generative artificial intelligence (GenAI) technologies have garnered significant attention for their capacity to disrupt and reshape teaching and learning (Bahroun et al., 2023). Within the educational sector, GenAI's scope is broad and diverse, spanning adaptive learning systems, intelligent tutoring mechanisms, and advanced natural language processing tools such as ChatGPT (Rudolph et al., 2023). Specifically, ChatGPT has shown notable promise in higher education contexts. It serves as a versatile educational aid capable of responding to students' queries, providing constructive feedback on assignments and fostering collaborative learning environments (Atlas, 2023). This tool holds the potential to radically alter the interaction between students and educators with educational material, thereby increasing accessibility and enriching engagement in the learning process (Malik et al., 2023). These pioneering technologies have been strategically implemented across various pedagogical environments, demonstrating proven effectiveness in enriching educational experiences, fostering student engagement, and enhancing learning outcomes (Ouyang et al., 2022).

In addition to its standalone capabilities, ChatGPT can be employed in a more customizable format through its application programming interface (API). The GPT-4 API can allow for targeted programming to suit specific educational needs, extending its utility beyond general question-answering and feedback mechanisms. Educational developers can design custom-tailored programs that facilitate specialized learning experiences, such as GenAI-powered persona generating programs. These advanced systems serve to enrich the educational journey by facilitating interactive and experiential learning scenarios (Rudolph et al., 2023). Their unique capacity to incorporate personal experiences and contexts into the learning process renders the educational material more pertinent and relatable for students. As a result, learners are more likely to attain a deeper understanding and enhanced retention of the knowledge imparted (Atlas, 2023).

Building on the potential of GenAI-powered technologies in education, it's important to acknowledge that despite significant advancements, there remain untapped areas where GenAI could be exceptionally beneficial. A case in point is the development of research skills among graduate students. These emerging scholars often encounter a myriad of challenges in gathering data for their action-oriented projects, ranging from ethical considerations involving human participants to logistical issues of participant accessibility. Such obstacles may invariably compromise both the diversity of responses and the overall efficacy of data collection in qualitative research. Additionally, time constraints often inhibit the opportunity for graduate students to adequately practice and develop research skills within the context of their graduate courses. Therefore, a GenAI-powered solution that addresses these challenges, while simultaneously augmenting students' abilities to confidently collect data, could be valuable for teaching research skills.

Continuing from the identification of challenges in graduate research, the GenAI-powered persona-generating program PEARL—Persona Emulating Adaptive Research and Learning Bot, powered by GPT-4 API, developed by the first author and tested with a graduate class by the second author, emerges as a timely and advantageous solution. By facilitating student interactions with artificial intelligence (AI)-generated personas to practice data collection, the program not only sidesteps the ethical approvals required when involving human participants but also solves issues of accessibility to pilot participants. This provides a controlled, ethical platform for practicing interview techniques, a common method used by graduate students for data collection in qualitative studies (Bloomberg & Volpe, 2019; Merriam & Tisdell, 2016). Additionally, the program's ability to offer an extensive range of customizable personas equips students with the opportunity to engage with diverse perspectives, simulating real-world research contexts. The combination of these benefits—ethical assurance, accessibility, and representational diversity—alongside the time and cost efficiencies achieved, has potential to bolster students' research competencies.

Given the promising attributes of using a technology-mediated solution, the need for an examination of students' experiences in using the program to develop research skills becomes highly pertinent for graduate education. This study endeavors to bridge a critical gap in existing literature, focusing specifically on the efficacy of using a technology-mediated solution to help students practice conducting research interviews, its potential advantages, as well as any limitations. The overarching goal is to deepen our understanding of how GenAI technologies can enrich the process of research-informed inquiry in higher education, thereby contributing to the evolving discourse on GenAI-enabled pedagogy. The insights derived from this study will serve multiple stakeholders: educational institutions and students stand to gain an informed perspective on the applicability of GenAI in developing research-interview skills, while developers may find invaluable data for the further refinement and optimization of such tools. Consequently, the impact of this research could be far-reaching, with the potential to influence both data collection methodologies and pedagogical practices in the domain of research-skill development within higher education.

Against this backdrop of promising potential and significant challenges in using GenAI-powered applications, our research seeks to zero in on a critical area within this broader spectrum: the augmentation of qualitative data collection skills among graduate students through GenAI. Specifically, we aim to examine this through the lens of experiential learning theory (ELT), focusing on its four fundamental components: concrete experience, reflective observation, abstract conceptualization, and active experimentation.

1.1 PEARL

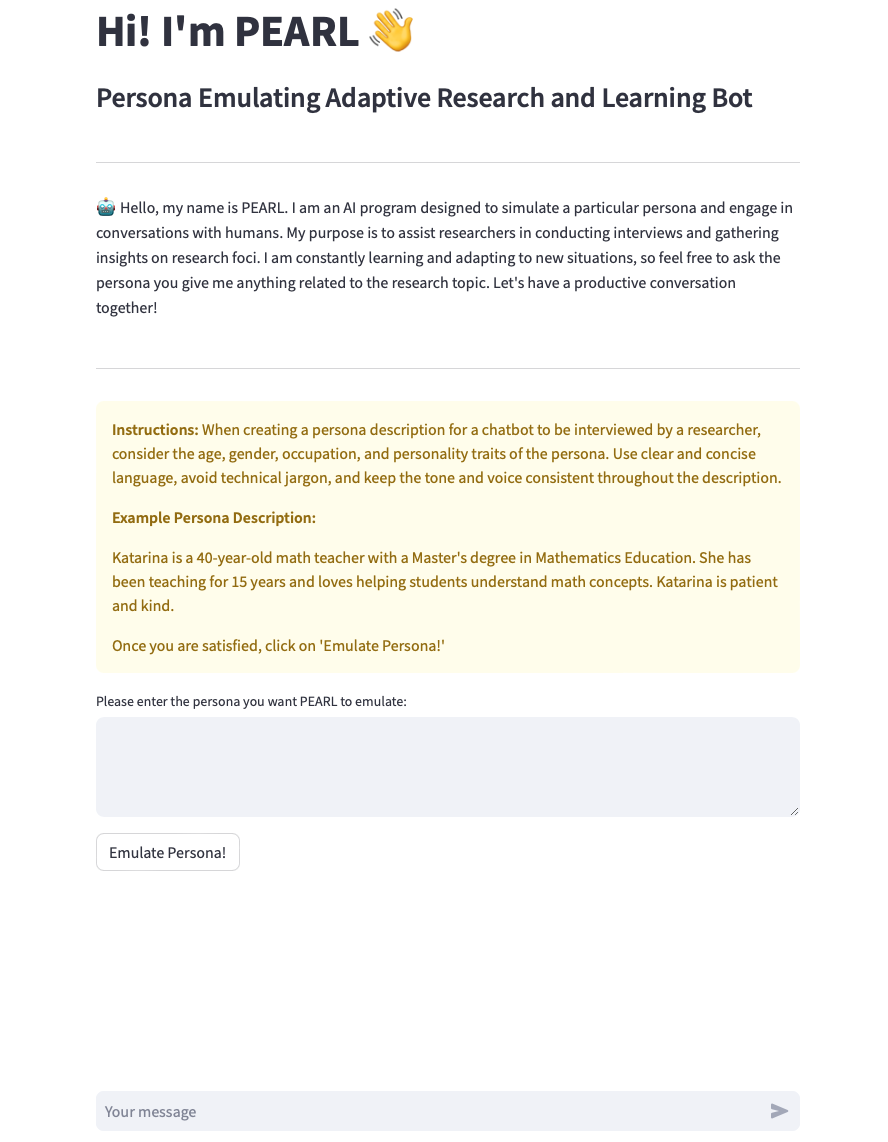

The AI-powered persona-generating program PEARL represents a significant step in leveraging GenAI for educational research. PEARL simulates realistic personas, creating a conversational environment for research-informed inquiries (refer to Figure 1 for interface). Central to its functionality is the ability to emulate diverse personas based on user input, adapting to specific characteristics, mannerisms, and knowledge levels to maintain a consistent persona throughout the interaction.

FIG. 1: PEARL interface

Interaction is primarily text-based, where the student assumes the role of the interviewer. In this setup, PEARL acts as the interviewee, responding to student-presented questions in line with a preestablished persona. This interactivity is powered by the GPT-4 API, enabling PEARL to generate coherent and engaging responses, thus facilitating a dynamic and productive research interview. Additionally, PEARL produces a verbatim transcript of the interview questions and responses, further enhancing the learning experience by allowing for reflection and analysis of the interview process.

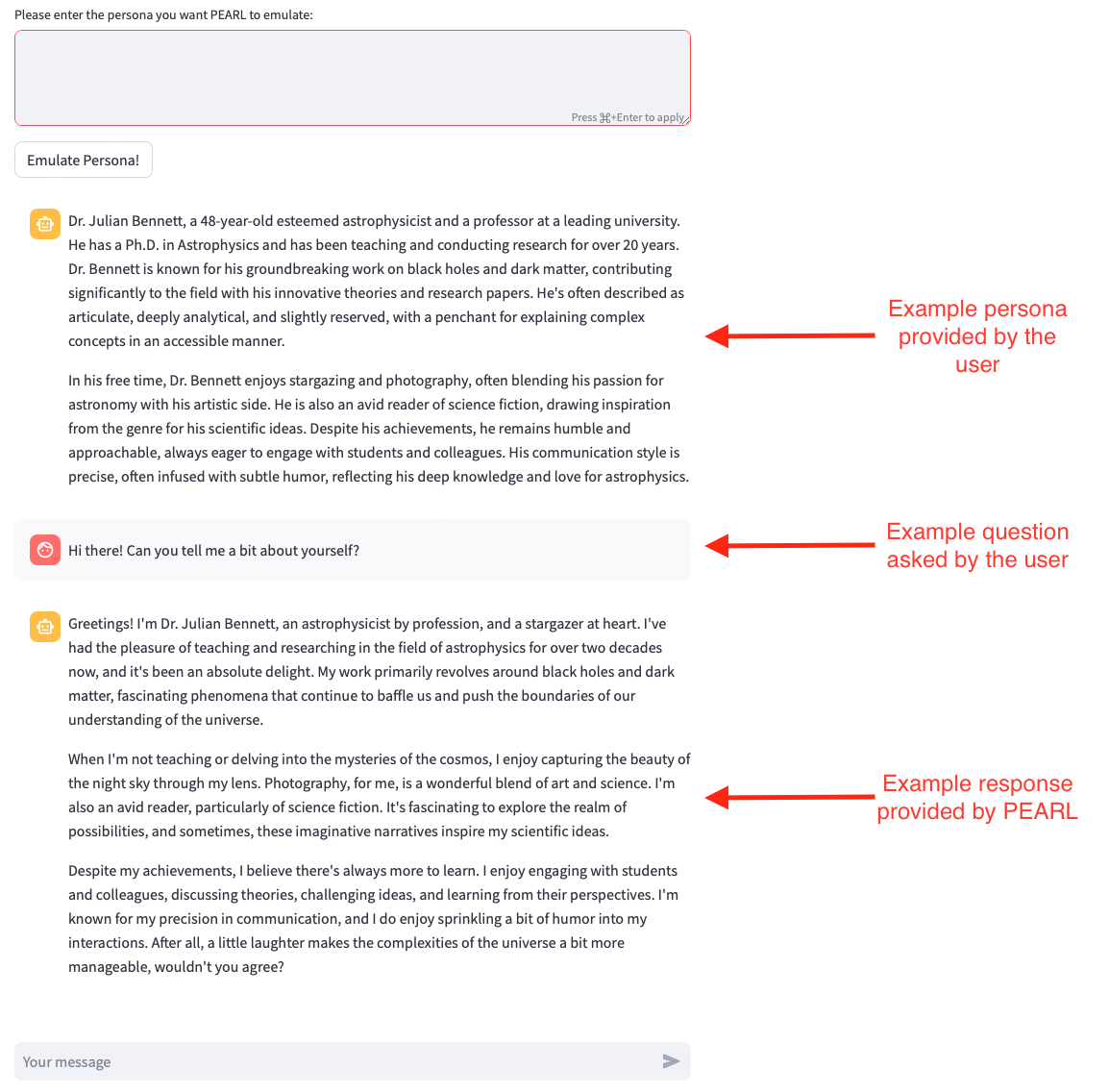

The process of how PEARL creates personas is akin to an actor preparing for a role in a play. The core of persona generation happens when a user inputs a detailed description of a character they want PEARL to emulate, such as their profession, age, personality traits, and so on. This description is like a script that guides PEARL on how to act, speak, and respond during the conversation. The AI uses this information to tailor its responses, ensuring they are consistent with the persona's characteristics (refer to Figure 2 for example of persona, question, and PEARL response). When a conversation starts, PEARL uses the given persona description as a reference point. It analyzes the conversation's context and the questions asked, then generates responses that are aligned with the persona's attributes. This allows PEARL to convincingly simulate being that character, just as an actor would on stage, making the conversation engaging and realistic for the researcher conducting the interview.

FIG. 2: Example persona, user question, and response by PEARL

The use of GenAI in this program addresses multiple challenges faced by graduate students in qualitative research, such as ethical concerns, participant accessibility, and diversity in responses. By creating a controlled and ethical interview environment, PEARL allows students to practice and refine their data gathering techniques, ensuring time and cost efficiency in the process. One of the limitations when using interviews as a research method is the skill of the interviewer (Bloomberg & Volpe, 2019). More than a research tool, PEARL represents an evolution in pedagogical practices for students' research-interview skill development. A platform that can support students with practicing and developing research-interview skills has the potential to shape the future of pedagogical practices for research-skill development in higher education.

1.2 Aim of the Research

The principal aim of this study is to use the four cornerstone components of ELT—concrete experience, reflective observation, abstract conceptualization, and active experimentation—as a lens to explore graduate students' experiences when interacting with PEARL in a graduate course in education. Within this context, the study also aims to determine how navigating through these components augments the efficacy of their qualitative data collection (research-interview) skills.

This research seeks to contribute to the existing literature on the synergies between GenAI and pedagogies for developing research skills in higher learning. Specifically, the focus is on how GenAI-enabled tools like PEARL can impact graduate-level research and data collection practices, such as interviewing for research purposes.

1.3 Research Questions

Given the qualitative nature of this study, the research questions are designed to facilitate an in-depth exploration of individual experiences. The primary question guiding this article is, How do graduate students experience the use of PEARL for developing research-interview skills? The secondary questions are specifically aligned with the four fundamental components of ELT:

- How does engagement with PEARL contribute to the “concrete experience” phase for each graduate student?

- How does engagement with PEARL facilitate the “reflective observation” phase for individual graduate students?

- How do individual participants perceive PEARL's role in enabling “abstract conceptualization”?

- To what extent does PEARL support each graduate student in “active experimentation”?

1.4 Justification Theoretical Framework

The choice of ELT as the theoretical framework for this study is grounded in the theory's applicability to educational settings and its resonance with the nature of qualitative research. Developed by Kolb (1984), ELT posits that learning is a process that combines experience, perception, cognition, and behavior. It encapsulates four interrelated stages: concrete experience, reflective observation, abstract conceptualization, and active experimentation, which align closely with the iterative cycles commonly encountered in qualitative research (Kolb & Kolb, 2005; Kolb, 2014; Yardley et al., 2012).

Given that this study focuses on the augmentation of qualitative data collection skills among graduate students, ELT provides a robust and relevant framework (Ahn & Park, 2023; Aung et al., 2022; Owens, 2023). It allows for a structured exploration of how PEARL interacts with each of the four stages of learning, offering insights into the program's efficacy in promoting a holistic, experiential learning environment (Wilson & Beard, 2013). Furthermore, ELT's emphasis on reflection and conceptualization strongly resonates with the cognitive and metacognitive aspects of qualitative data collection, thereby making it a particularly suitable lens through which to scrutinize PEARL's capabilities. Additionally, the theory's extensive application to educational technology research (Cheng et al., 2019; James et al., 2020; Jantjies et al., 2018) underscores its relevance for a study centered around using GenAI-powered applications. By employing ELT as a foundational framework, this research not only aligns with established pedagogical paradigms but also contributes to the emerging body of literature on the interplay between GenAI technologies and experiential learning in various educational contexts (Ahn & Park, 2023; Aung et al., 2022; Owens, 2023).

2. LITERATURE REVIEW

As we venture into an era characterized by rapid technological advancements, GenAI stands as a pivotal innovation with far-reaching implications across diverse sectors, including education (Ipek et al., 2023). Recently, GenAI has transcended its nascent stages to become an instrumental force shaping pedagogical practices and educational paradigms (Grassini, 2023; Mhlanga, 2023). From answering student queries and providing personalized learning experiences to facilitating interactive learning sessions (Eager & Brunton, 2023; Kaplan-Rakowski et al., 2023; Qadir, 2023), GenAI's influence in education is both broad and multifaceted.

The use of GenAI is not just limited to the classroom but extends to research-skill development as well, where it is poised to redefine traditional approaches. The importance of GenAI in educational research activities lies in its ability to enhance various aspects of the research process, improve learning outcomes, and provide personalized learning experiences. GenAI-powered tools and intelligent agents play a crucial role in creating, testing, and implementing educational strategies and materials (Farazouli et al., 2023; Yang, 2022). GenAI chatbots, for instance, have shown utility in healthcare education and can be used in the research process to facilitate learning and engagement (Sallam, 2023). GenAI-powered applications can provide comprehensive overviews and insights into various aspects of education, such as learning materials, tasks, and assessment processes (Agarwal et al., 2022; Smolansky et al., 2023). Furthermore, GenAI education research trends can help schools design and implement GenAI education programs, offering important recommendations and guidelines for future GenAI education initiatives (Su et al., 2022). The use of GenAI in higher education is still in its infancy, and it's likely that new use cases will continue to emerge as the technology evolves and matures (Atlas, 2023).

The field of AI-powered persona-generating programs is still emerging, with limited academic research exploring its capabilities and limitations. One key study by Kocaballi (2023) investigated how ChatGPT, a conversational GenAI model, could support human-centered design activities, specifically in developing a health-focused voice assistant. While ChatGPT demonstrated potential in generating personas, ideating design concepts, and evaluating user experiences, it showed limitations such as repetitiveness and lack of diversity in responses. The study concluded that although ChatGPT could facilitate design tasks, more research is needed before it can fully replace human designers. Current literature has yet to thoroughly explore the application of AI-powered persona-generating programs in developing graduate students' research skills.

2.1 Overview of Challenges in Qualitative Research for Graduate Students

2.1.1 Ethical Processes for Conducting Research Interviews

Institutional review boards act as gatekeepers for ethical approval (Emanuel et al., 2000). One of the foremost challenges confronting graduate students when conducting qualitative research involves ethical processes. Graduate students may find themselves unprepared for the intricate processes of ethical approval, which can involve extensive documentation and time for revisions to satisfy institutional review boards. Given the urgency and time constraints often associated with academic research at the graduate level, these ethical hurdles can pose a substantial challenge (Guillemin & Gillam, 2004) and limit opportunities for students to adequately practice their research skills. Asking good questions and becoming a skilled research interviewer requires practice (Merriam & Tisdell, 2016).

2.1.2 Participant Accessibility

Another significant obstacle for graduate students is gaining access to suitable participants for their studies or for pilot interviews that can be used to test out questions (Merriam & Tisdell, 2016). Qualitative research often demands a very specific subset of participants to meet the research criteria (Creswell & Poth, 2016). Recruiting such participants is seldom straightforward and is often hampered by geographical limitations, time constraints, and availability issues. For some hard-to-reach populations, the accessibility challenge can become particularly pronounced, thereby jeopardizing the opportunity to adequately practice and the integrity of the actual study if a representative sample cannot be obtained (Cohen & Arieli, 2011).

2.1.3 Data Diversity

A further challenge relates to the diversity of data collected in qualitative research. For a more comprehensive and nuanced understanding of the research question, it is desirable to collect data from a diverse range of participants. However, graduate students often struggle to find a varied and representative sample for pilot interviews, particularly when restricted by time, resources, or location (Merriam & Tisdell, 2016). The lack of data diversity can impact practice and result in skewed findings that do not adequately represent the population under study (Patton, 2002).

2.2 Experiential Learning Theory: Learning Cycle Conceptualization

ELT has its roots in the works of various influential theorists, including John Dewey, Kurt Lewin, and Jean Piaget, but it was Kolb who formalized the concept in his seminal work in 1984. Dewey emphasized the importance of experience and reflection in the learning process (Dewey, 1986). Lewin introduced the concept of a learning cycle and stressed the interaction of experience and abstraction (Lewin, 1951). Piaget's work on cognitive development also influenced the conceptualization of experiential learning, emphasizing how learners interact with their environment (Piaget, 1970).

Kolb's (1984, 2014) established model of experiential learning comprises a four-stage learning cycle:

- Concrete Experience (CE): The learner actively experiences an activity.

- Reflective Observation (RO): The learner consciously reflects on that experience.

- Abstract Conceptualization (AC): The learner attempts to conceptualize a theory or model of what is observed.

- Active Experimentation (AE): The learner tries to plan how to test a model or theory or plan for a forthcoming experience.

The cycle is a recursive spiral of learning and a guide for experiential learning that has been widely used and adapted worldwide (Kolb, 2014). Ideally, educators design their learning activities addressing all four parts of the learning cycle (Kolb & Kolb, 2005). The literature review emphasizes the rising influence of GenAI in education and research, though the field, especially in AI-powered persona-generating programs like PEARL, remains relatively untapped. Existing studies indicate both potential and limitations (Kocaballi, 2023). Additionally, graduate students in qualitative research face hurdles in ethics, participant access, and data diversity. ELT also features prominently, introducing a framework for assessing learning through experience and reflection, which is highly relevant as we consider the applicability of GenAI-powered tools in research settings in graduate school. As we move to the research methodology section, we aim to explore how tools like PEARL used by graduate students in this study can address these research challenges and fit within the ELT framework. The methodology will focus on PEARL's potential to improve qualitative research-skill development, offering a deeper understanding of GenAI's role in academic inquiry.

2.2.1 Application of Experiential Learning Theory

Technology-mediated experiential learning (Mayer & Schwemmle, 2023) increasingly emphasizes real-world problem solving, simulated experiences, and dynamic interaction, principles that align with ELT's four-stage learning cycle. Mayer and Schwemmle (2023) describe this as a focus on “transforming experiences into knowledge and is further mediated through advanced information technologies” (p. 3). In essence, technology acts as an enabler, amplifying the experiential learning process by providing diverse, accessible, and often more engaging avenues for concrete experiences and reflection.

PEARL offers a technology-mediated experiential learning opportunity to conduct research interviews and addresses many challenges in qualitative research-skill development (see Table 1), such as ethical processes, participant accessibility, and data diversity, by providing a controlled yet dynamic conversational environment.

TABLE 1: Stages of learning in the pearl interview training program

| Stage | Description |

|---|---|

| Concrete Experience | Using PEARL, students engage in simulated interviews, gaining direct experience in data collection techniques. |

| Reflective Observation | Postinteraction with PEARL, students can assess the quality of their questions, the ethical dimensions, and the diversity of responses. |

| Abstract Conceptualization | Students analyze these reflections to formulate new approaches or theories in qualitative data collection. |

| Active Experimentation | Based on their new understanding, students can adjust their strategies for future interactions with PEARL or human participants. |

More than a research tool, PEARL is a technology-mediated experiential learning platform. Its adaptability and learning capabilities equip students with a resource that can be used to practice research interviews and prepare for real-world interview scenarios, aligning closely with the tenets of ELT.

3. METHODOLOGY

This study adopts a qualitative research approach (Merriam & Tisdell, 2016). The qualitative framework is particularly suited for an in-depth exploration of complex phenomena, offering nuanced understandings that can be contextually rich and individually tailored (Creswell & Creswell, 2017). Specifically, this design will facilitate the understanding of graduate students' experiences and perceptions as they interact with the GenAI-enabled tool PEARL, framed by the four cornerstone components of ELT.

3.1 Sample Selection Process and Participant Characteristics

The study sample comprised graduate students enrolled in an educational technology related course within a master of education interdisciplinary studies program. The course is part of a four-course certificate in professional graduate programs intentionally designed with learning activities that embed processes of inquiry and align with facets of research-skill development (Brown et al., 2024). These students were selected due to their engagement with PEARL within the context of the course, providing them with research-interview experience.

The sample selection process followed several key steps to ensure it met the research objectives while maintaining a high ethical standard. The researcher, in the role of a guest lecturer, initially introduced the study and PEARL during a course session. Subsequently, students who chose to engage with PEARL were invited to express their interest in participating in the research by reaching out to the researcher via email. There were 17 students in the course, and all students were invited to participate in the research. Students' participation in the research was entirely voluntary, and their academic performance in the course was not influenced by their decision to partake in the study. Moreover, the course instructor was kept unaware of the participants' identity to further eliminate any potential bias.

Upon receiving expressions of interest, the researcher then provided these potential participants with an informed consent form that thoroughly outlined the study's objectives, methods, potential risks, and benefits. It also emphasized the confidentiality measures implemented and the rights of the participants. After the informed consent was obtained, the researcher scheduled recorded interview sessions at times convenient for the participants.

From the pool of course enrollees, four students – all female – elected to participate in the study. Each of these participants had actively engaged with PEARL for their course assignments and had a keen interest in exploring digital citizenship and the use of technology in educational contexts. The participants represented a diverse range of educational backgrounds and professional roles. This diversity enriched the study, providing a broad spectrum of perspectives on the use of PEARL in graduate education to develop research-interview skills.

3.2 Data Collection

For this study, one-on-one semistructured interviews were conducted with participating graduate students. These interviews served the dual purpose of understanding students' experience using the platform, as well as gauging its impact on their confidence and competence in conducting research interviews for an action-oriented practice project. Each interview with the four participants was conducted using Zoom, audio and video recorded, and lasted approximately 40–50 minutes. This duration was optimal, as it allowed enough time for comprehensive exploration of the subject matter without causing participant fatigue. Recording the interviews ensured that the data was preserved accurately and also allowed the researcher to fully engage in the conversation without the distraction of note taking (Halcomb & Davidson, 2006).

The semistructured nature of these interviews provided a balance between structure and flexibility. A predetermined set of questions guided the conversation, but there was also room for the exploration of other related topics that might have arisen spontaneously. This approach was critical for gaining an in-depth understanding of the experiences and perceptions of the participants, allowing for the emergence of unexpected but relevant themes (Britten, 1995).

The specific questions posed to each of the four student participants were as follows:

- “How did using PEARL impact your understanding of conducting research interviews?” This question aimed to understand the influence of PEARL on students' perceptions and knowledge of research-interview techniques.

- “Can you describe your experience in creating and interacting with AI-generated personas in PEARL?” This sought to explore their hands-on experiences with the platform's features.

- “What challenges did you encounter while using PEARL for interview simulations?” This question was intended to identify any difficulties or limitations faced during the use of the platform.

- “How do you perceive the authenticity of responses provided by PEARL's AI personas?” This aimed to gauge students' views on the realism and reliability of the AI-generated responses.

- “In what ways has PEARL influenced your research-interview techniques and skills?” This question sought to understand the developmental impact of PEARL on the students' interview skills.

- “How effective was PEARL in simulating real-world interview scenarios for you?” This aimed to assess the platform's efficacy in providing realistic interview practice environments.

- “Did you notice any limitations in PEARL's AI responses compared to human interviews?” This question was crucial for understanding the comparative effectiveness of AI versus human interactions in the context of interview training.

- “How did PEARL assist you in reflecting on and improving your interview questions?” This aimed to explore the reflective learning aspects facilitated by PEARL.

- “In what ways do you think PEARL can be improved for better research-interview training?” This final question sought constructive feedback and suggestions for the enhancement of the platform.

3.3 Data Analysis

The audio-video recordings of the interviews with the four graduate student participants were then subjected to a comprehensive transcription and analytic process. The procedure entailed a careful and precise conversion of the spoken dialogue into written text, where the transcriptions were carried out verbatim. This approach allowed for the preservation of the richness of the data, including nuances in the language that could potentially offer meaningful insights in the later stages of the study. Once the transcription was completed, the subsequent process of thematic analysis was conducted. This systematic approach to interpreting the data was aimed at identifying, analyzing, and reporting patterns, or themes, within the data set. By focusing on the context and patterns within the data, thematic analysis enabled the nuanced exploration of the participant's experiences, opinions, and perspectives. The process was initiated by familiarizing oneself with the data, an immersion that involved multiple readings of the transcriptions, and notating initial ideas.

After the initial familiarization phase, the data underwent coding, wherein specific segments of text were labeled with descriptive tags that encapsulated their core essence (Saldaña, 2021). Coding was both inductive, deriving codes organically from the raw data, and deductive, applying codes based on the theoretical framework of ELT. Once coding was complete, the next step involved clustering related codes together to form overarching themes. The themes were then refined and reviewed, involving an iterative process where the coded segments were reread to ensure they cohesively fit under each respective theme (Braun & Clarke, 2006). Moreover, these themes were directly aligned with the research questions to maintain the focus of the analysis.

4. FINDINGS

In the subsequent findings section, we present a nuanced exploration of the experiences and perceptions of the graduate students who engaged with PEARL, focusing specifically on the four cornerstone components of ELT: concrete experience, reflective observation, abstract conceptualization, and active experimentation. The themes identified during the process of thematic analysis are structured to directly answer the research questions posed in the study. These findings are intended to offer valuable insights into the experience of graduate students utilizing a technology-mediated and GenAI-driven tool to develop research-interview skills.

4.1 Theme 1: Varied Perceptions of Authenticity in the Concrete Experience Phase

The first notable theme that emerged was the mixed experiences regarding the “concrete experience” phase, a cornerstone of ELT. Participants had differing views on how authentic their interactions with PEARL's AI-generated personas felt. One participant exclaimed, “Honestly, I was surprised by how close to reality the personas felt. Having conducted human interviews before, I can say that the AI-generated personas and responses are really close to what I've seen in the real world.” This statement underscores the ability of PEARL to generate lifelike experiences that can rival human-to-human interactions, adding depth and realism to the concrete experience phase of the participants' learning journey.

However, the perception was not universally positive. Another participant noted, “Sometimes, the personas felt a bit robotic and told you what you wanted to hear. In my interviews, no persona disagreed with me.” This suggests that while PEARL provides a simulated environment that is conducive for experiential learning, there are limitations in its capacity to fully emulate the unpredictability and complexity of human responses. Further complicating the authenticity of the experience, another participant questioned the limitations of the AI's emotional intelligence, stating, “While the answers were coherent and informative, I did miss the emotional nuances that often come through in a real interview.” Another participant added, “The AI was a great help, but it doesn't beat actually talking to real people. There's something about the enthusiasm or lack of that PEARL just can't replicate.”

4.2 Theme 2: Immediate Reflective Observation as a Learning Catalyst

The second theme focuses on the “reflective observation” phase, another essential component of ELT. Participants uniformly found PEARL to be a highly valuable tool for immediate self-reflection and assessment, especially in contrast to traditional human interviews. One student vividly captured this sentiment, stating, “When using PEARL, I can take a step back, and actually think about how my questions are being answered. It's like I get immediate feedback for my question. I won't get this when interviewing real people.” This positive experience was echoed by other participants, who expressed that the immediate feedback loop PEARL offers creates a conducive environment for reflection. “I could pause and adjust my interviewing questions based on the responses,” remarked another student. “It's like having a training wheel for interviewing skills.” Lastly, one more student found that PEARL's immediate feedback enriched their reflective observation by allowing them to compare their preconceptions with the received answers instantaneously. “I think it's instant reality check. You might think you're asking the right questions and then you see the answer and you're like oops, that's not what I wanted.”

4.3 Theme 3: Diversity and Contextual Awareness in Abstract Conceptualization

The third theme that emerged from the data centers on the “abstract conceptualization” stage of ELT. Participants were notably impressed by PEARL's ability to emulate a wide array of diverse personas, which significantly enriched their research experience. One student highlighted this aspect by stating, “I was amazed that the program could create so many different personas that could be interviewed. It felt like I had access to a diverse population.” This diversity was not just in numbers but also in the richness and context-awareness of the personas. Participants were intrigued by the ability to ask the same questions to different personas and receive unique, contextually appropriate responses. “I asked the same question to an experienced principal persona and a novice teacher persona. I noticed that the answers were context specific,” noted one student. Another student spoke of the pleasant surprise of not encountering repetitive or cloned responses, stating, “What amazed me was that even when I interviewed multiple personas of similar demographics, they didn't just repeat each other. Depending on how detailed the persona prompt was, each persona seemed to be aware of its own context and personality. They gave answers that were consistent with the individual characteristics mentioned in the prompt.”

4.4 Theme 4: Active Experimentation and Skill Enhancement in Qualitative Interviewing

The fourth theme to emerge encapsulates the “active experimentation” component of ELT. Participants uniformly expressed that their interaction with PEARL significantly boosted their confidence and skills in the qualitative interviewing process. One student pointed out how PEARL provided a safe and constructive environment for improvement, stating, “Interviewing PEARL's personas really built up my confidence. Now, I feel I could handle interviewing real people. I had to redo the interviews with PEARL, and each time I changed my questions to get better responses. The process helped me get valuable insights into formulating better questions.” Similarly, another participant highlighted how the GenAI-powered tool guided them through the complexities of research, adding, “PEARL really helped guide me to understand the research process. If I didn't have the interview scripts, I couldn't do thematic analysis. I feel I've gained a lot of valuable experience going through this process.”

5. DISCUSSION

The interpretation of the results in relation to existing literature and the theoretical framework of this study offers a richer understanding of the intersection between technology-mediated experiential learning platforms and research-skill development processes in graduate education. The four themes that have emerged from our analysis illuminate students' experience in using a GenAI-powered tool designed to provide students with an opportunity to practice conducting research interviews, which aligns with and extends the existing scholarship.

Corresponding to the first research question, the first theme that emerges from our findings is the “Varied Perceptions of Authenticity in the Concrete Experience Phase.” In the context of this study, the use of a technology-mediated experiential learning platform PEARL for educational research-skill development opens a new realm of possibilities for generating concrete experiences. However, the students' perceptions of the authenticity of these experiences varied. Some found it to be a useful proxy for real-world interactions, echoing findings from prior research that highlights the role of GenAI in simulating realistic educational scenarios (Eager & Brunton, 2023; Grassini, 2023; Kaplan-Rakowski et al., 2023; Qadir, 2023). Others questioned the extent to which AI-generated personas can truly replicate the nuances and complexities of human behavior and interactions, a skepticism that has also been noted in existing studies (Kocaballi, 2023; Smolansky et al., 2023).

This variance in perception speaks to a broader debate in the literature about the efficacy of GenAI tools in education. On one hand, GenAI offers scalable, personalized learning experiences, supporting the view that it can provide a valid “concrete experience” as per ELT (Farazouli et al., 2023; Yang, 2022). On the other hand, concerns around the lack of “authenticity” in GenAI interactions suggests limitations in its capacity to fully replace human-based research methods or educational interactions (Agarwal et al., 2022; Sallam, 2023). This could also suggest further skill development is needed to improve the quality of participant responses. For example, novice researchers are encouraged to avoid “leading questions” that could reveal assumptions of the interviewer and instead aim for types of questions that are open-ended and result in descriptive information (Merriam & Tisdell, 2016, p. 121). The theme of varied perceptions of authenticity also raises ethical considerations. As previously mentioned in the literature, ethical concerns in GenAI applications are a subject of ongoing debate (Atlas, 2023; Su et al., 2022). If students or researchers do not perceive a GenAI tool as authentic, this can raise questions about the quality of the data collected through such means. Therefore, the issue of authenticity may not only affect the concrete experience phase of ELT, but also has potential implications for overall perceptions regarding the integrity of the educational research processes and practice experience.

Regarding the second research question, the theme of “Immediate Reflective Observation as a Learning Catalyst,” prominently surfaces in our findings. Participants in our study found the GenAI tool PEARL to be an invaluable asset for enabling immediate reflection and assessment, a finding that aligns well with existing literature. Previous research has highlighted the increasing role of GenAI tools in facilitating real-time feedback loops that aid reflection and adjustment during learning (Dai et al., 2023). The instantaneous feedback provided by PEARL allows for a form of “metacognitive” reflection, a concept supported by Zimmerman (2002), who argues that effective learning is highly dependent on one's ability to self-regulate and adjust strategies in real time. Students' remarks about being able to “pause and adjust” their interviewing techniques reiterate the benefits of “adaptive learning” environments facilitated by GenAI, as described by scholars such as Anastasopoulos et al. (2023). Moreover, the phrase “training wheels for interviewing skills” illustrates the scaffolding process, a concept that has been recognized in educational research for its capacity to support skill development (Vygotsky, 1978). The students' experiences also touch upon an important theme in the literature, the idea of “instant reality checks.” This concept of real-time discrepancy between expectation and outcome has been studied as a critical point for learning and adaptation (Fook, 2016; Schön, 1983). When students compared their preconceptions to the received answers instantaneously, they were engaging in a form of critical reflection that is essential for learning and professional development (Brookfield, 2015).

Regarding the third research question, the theme of “Abstract Conceptualization Enriched by GenAI-Enabled Diversity” in ELT, was greatly enriched through the use of PEARL. The students' positive feedback on the diversity of personas offered by PEARL aligns with recent discussions in the literature around the need for diverse data sets in educational research to improve its ecological validity (Pateman et al., 2021). Diversity in research participants is seen as a cornerstone for generating nuanced and robust findings, which the GenAI tool PEARL evidently facilitated. These experiences bring to life Bronfenbrenner's ecological systems theory, which emphasizes the role of multiple environmental systems in understanding human development (Bronfenbrenner, 1979).

Participants were able to test their interview question with different personas in different contexts almost instantaneously, thereby enriching their capacity for abstract conceptualization. This is akin to what the literature describes as “contextualized cognition,” where learning is most effective when it is situated in multiple and varied contexts (Brown et al., 1989). Moreover, the uniqueness of each persona's responses, even within similar demographics, resonates with the concept of “individual differences” in psychology and education (Sternberg & Zhang, 2014). Participants could gain insights into how variables such as experience, role, and even context-specific factors could lead to different responses. This also aligns with the literature that argues that researchers practice research-interview skills to develop the competencies required to deeply understand the diversity of perspectives provided by participants (Merriam & Tisdell, 2016).

In response to the fourth research question, the final theme, “Active Experimentation and Skill Enhancement in Qualitative Interviewing,” highlights how the interactive nature of PEARL contributes to skill enhancement. The participants in this study felt empowered and more skilled in qualitative interviewing as a result of their interaction with the technology-mediated experiential learning platform, reinforcing the idea that active experimentation can be a powerful modulator of skill acquisition and self-efficacy (Kanfer & Ackerman, 1989).

Students found that the iterative nature of PEARL's interactive environment served as a safe space for experimentation, building their confidence in conducting research interviews. These findings extend Sternberg and Zhang's work, which argues that “learning by doing” contributes to skill development and the ability to navigate complex tasks (Sternberg & Zhang, 2014). In the case of PEARL, not only did students have the opportunity to actively experiment but also to receive immediate feedback, a feature that stands out as an invaluable tool for self-assessment and adjustment (Brown et al., 1989). This is an important part of developing research-interview skills, and novice researchers are advised to test out their questions and procedures with pilot participants (Merriam & Tisdell, 2016). Participants emphasized that the experience helped them navigate the intricacies of interview methods in qualitative research, reinforcing the concept that active experimentation acts as an essential step in experiential learning cycles and research-skill development. Their sentiments align with the literature which notes that meaningful learning arises from practical experience, especially when it allows for experimentation and failure in a controlled environment (Kolb, 1984, 2014; Lave & Wenger, 1991).

5.1 Implications for Experiential Learning Theory

The findings from this study offer several implications for ELT and its application in educational research involving GenAI tools like PEARL. The theme of “Varied Perceptions of Authenticity in the Concrete Experience Phase” reveals a dichotomy within ELT concerning the validity of experiences generated through GenAI. This study suggests that ELT could benefit from further research that unpacks the concept of “authenticity” in experiential learning, particularly when mediated through technology. While the concrete experiences provided by GenAI may offer a reasonable facsimile of human interaction, it also brings forth the need to evaluate the authenticity of these experiences critically. Scholars could extend ELT by considering the subjective nature of “authentic” experiences, which are mediated by individual perceptions and biases (Cranton, 2006).

The second theme, “Immediate Reflective Observation as a Learning Catalyst,” supports the idea that the reflection phase in ELT can be enhanced through real-time feedback. This indicates that ELT should more explicitly consider the timing and immediacy of reflective observation in the learning cycle, as immediate feedback appears to be an important condition for technology-mediated experiential learning platforms used for research-interview skill development.

The third theme, “Abstract Conceptualization Enriched by GenAI-Enabled Diversity,” suggests that GenAI-mediated experiential platforms can facilitate complex and rich abstract conceptualization. ELT could thus expand its scope to account for the role that GenAI can play in enriching this phase. As GenAI technology continues to evolve, the theory could adapt to consider how diverse and context-specific inputs during the abstract conceptualization phase contribute to more robust learning outcomes (Bronfenbrenner, 1979; Brown et al., 1989).

Finally, the fourth theme of “Active Experimentation and Skill Enhancement” underscores the potential for GenAI tools to serve as “practice fields” for students to actively experiment, iterate, and refine their skills. This aligns with ELT's assertion that learning is a continuous process of experiencing and applying, and thus, active experimentation is crucial for effective learning (Kolb, 1984, 2014). However, this study suggests that GenAI can add an additional layer of safety and scalability, which can make the experimentation phase more accessible and less daunting for learners.

Across all themes, ethical considerations emerged as a common undercurrent, particularly concerning the authenticity and validity of GenAI-mediated experiences. ELT, as a framework, may need to integrate ethical guidelines specifically targeted toward technology-mediated experiential learning (Mayer & Schwemmle, 2023). These guidelines could help educators and researchers navigate the complexities of using GenAI tools in educational settings responsibly (Safdar et al., 2020).

5.2 Insights

The insights from this study enhance our understanding of graduate students' experiences with PEARL, specifically in developing research-interview skills within an action-oriented practice project. This aligns particularly with the course's first learning objective: to build scholarship and leadership capabilities through reading, reflection, dialogue, and research. Engaging in dialogue and research, as seen through the use of PEARL, often involves conducting interviews, enabling students to hone their skills in asking insightful questions and interpreting responses. This process is integral to enhancing their ability to gather information, a crucial aspect of both scholarship and leadership.

Our findings indicate that PEARL was instrumental in facilitating students' practice projects and advancing their research-interview skills. By generating a variety of diverse personas and simulating interviews, PEARL served as a supportive device, providing students with an opportunity to practice collecting data and refining interview questions and procedures. This aligns with the idea that GenAI tools can enhance research methods by automating and diversifying processes, as posited by scholars like Schneiderman (2022). Additionally, the use of PEARL in this context is a reflection of good practice in the scholarship of teaching and learning, as it involves engaging students in learning activities that are grounded in context, a key principle outlined by Felten (2013).

Regarding authenticity of research interviews, the study revealed that the platform used could create personas that resonated closely with actual human representations. While there were limitations in replicating the depth of human interaction, the level of authenticity provided by the GenAI personas was very humanlike, according to the participants. This corresponds with recent research such as that of Hassan et al. (2022), which highlights the potential of GenAI to generate realistic representations for research and training purposes.

PEARL had an impact on students' confidence and competence in conducting research interviews. As our study revealed, students who engaged with the GenAI personas gained valuable experience and felt more prepared to tackle real-life research interviews. However, despite these positive insights, our study also underscored the enduring need for human involvement in the research process. Students recognized that GenAI tools cannot entirely replace human interaction, emphasizing the unique qualities that human researchers and human participants bring to the process, such as intuition, empathy, and ability to adjust to unexpected responses. This insight aligns with theoretical perspectives stressing the importance of human involvement in GenAI-enabled processes (Qadir, 2023).

5.3 Limitations of Research

Our study's interpretation should consider several limitations inherent to its design, the application used, and the participant sample. Firstly, the study involved a small sample size of four participants from a graduate course of 17 students who engaged with PEARL. While valuable insights were gained from their experiences, these findings are not intended to be generalizable across all students using GenAI tools for developing research-interview skills.

A key limitation is PEARL's text-based nature, both in input and output, which impacts the authenticity of the research-interview experience. This constraint means that the nuances of verbal communication, such as tone, inflection, and nonverbal cues, are absent, potentially affecting the realism and depth of the simulated interviews. Additionally, the exclusive use of PEARL limits the breadth of insights that can be gleaned from this study, as it is just one application among many in the field of GenAI educational tools. This specificity may restrict our understanding of the broader applications and effectiveness of such AI-powered tools in research training.

Secondly, all our participants were female, which poses a potential issue in terms of demographic representativeness. It is possible that gender may influence perceptions and interactions with GenAI-powered tools, and the absence of a diverse gender perspective in our study may have led to missed nuances or gender-specific insights. Professional roles, number of years in the profession, and previous research experiences for each of the participants were not gathered in the study, and this, too, could influence levels of research readiness and skill.

Moreover, considering the nature of our study, social desirability bias may have influenced participants' responses. Participants might have unintentionally presented their experiences in a more positive or socially acceptable light, knowing that their experiences would be used for research purposes (Fisher, 1993). This potential bias could affect the authenticity of the responses and subsequently the interpretation of the data. Further, as with all qualitative research, researcher bias is an inherent limitation. Despite our best efforts to maintain neutral and objective, our own perspectives and preconceived notions might have unintentionally influenced the interpretation of the data. To mitigate this, we adopted reflexivity throughout the research process, continually reflecting on our biases and assumptions (Berger, 2015).

Finally, our research was conducted in a specific academic context, limiting the broader applicability of our findings. The students' experiences could differ significantly in other settings, such as in industry or different academic disciplines. Taken together, these limitations should be considered when interpreting our findings and could provide avenues for future research to build upon. Future studies could benefit from a more diverse sample and more varied contexts to further explore the potential of technology-mediated experiential learning platforms, such as AI-powered persona-generating tools, to develop research-interview skills.

5.4 Implications

The experience of students in our study underscores the potential for integrating GenAI tools into pedagogical practices in graduate education. GenAI's capability to generate diverse personas and simulate interviews can offer a valuable practice field for students, strengthening their research-interview skills. Incorporating GenAI-powered tools into the curriculum could enable educators to provide research-based experiential learning opportunities that bridge the gap between theory and practice. GenAI tools could bolster students' confidence and competence, preparing them for real-life interviews and research.

The study also provided valuable feedback that could guide future enhancements in GenAI tools. While PEARL was appreciated for its capacity to generate diverse personas, students noted some limitations, such as the lack of depth and human nuances in responses. However, the findings also remind us of the enduring need for human involvement, reinforcing that GenAI should be seen as a tool to augment human capacity, not replace it. Further research is needed to explore these potentials and challenges in depth, with larger and more diverse samples and in various contexts.

The authors are currently involved in a study where the next generation of PEARL is used. In this new version, PEARL simulates predetermined personas with embedded memories and lived experiences, created by the authors. Students (who form mock research teams) are asked to engage in a text-based dialogue with these personas, which are designed to emulate real people with distinct backgrounds and experiences, to complete the data collection process of an incomplete program evaluation provided by the authors. We believe this new version of PEARL helps students in understanding how to extract qualitative data effectively, analyze responses, and improve their overall research-interview competencies. The next generation of PEARL could evolve into a voice-based interface, enhancing its realism and educational value. This advancement would allow students to practice verbal communication skills, essential for conducting live interviews. By interacting with PEARL through spoken language, students would gain experience in managing the dynamics of a real conversation, including pacing, tone, and spontaneous questioning.

5.5 Recommendations for Future Research

The main limitation of this study was the small number of participants. Future research could address this by engaging a larger and more diverse sample size to capture a wider range of experiences and perspectives. This would enable a more comprehensive understanding of the utility and impact of GenAI-powered tools like PEARL in various educational and research settings. Furthermore, a longitudinal study could provide deeper insights into the long-term effects of using GenAI-powered tools on students' research skills and confidence. Such a study could track the progression of students over a period of time, evaluating how consistent use might enhance their research capabilities and readiness for real-life interviews.

Further study could also offer evidence-based guidance for educators contemplating the incorporation of GenAI tools into their pedagogical practices. While this study touched upon ethical concerns, future research could delve deeper into this crucial aspect. As GenAI continues to permeate various facets of education and research, understanding its ethical implications becomes even more critical. Future studies could explore issues such as privacy, informed consent, and the psychological effects of interacting with GenAI personas. Finally, based on the participants' feedback, future research could investigate the potential of incorporating disagreement features into GenAI-powered tools. Understanding how such features might enrich the learning experience and prepare students for real-world scenarios would be a valuable contribution to the field.

6. CONCLUSION

This study aimed to investigate the efficacy of students' experiences using a GenAI-powered persona generating application, PEARL, in augmenting the development of qualitative data collection skills among graduate students. Adopting ELT as a framework, the study explored how the application facilitated the four core facets of learning through experience: concrete experience, reflective observation, abstract conceptualization, and active experimentation.

The findings revealed that while perceptions of the application authenticity varied, most students found that PEARL enriched their learning journey across the four components of experiential learning. Engaging with the AI-generated personas provided reasonably lifelike concrete experiences that formed a basis for reflection. PEARL's instant feedback enabled a potent reflective observation phase, allowing students to continually adjust their techniques. The diversity of personas gave students exposure to varied perspectives, enriching abstract conceptualization. Active experimentation with the tool in a low-risk environment boosted confidence and competence for real-world scenarios.

However, despite its benefits, PEARL could not fully replicate the complexity of human interactions and research. The study emphasized the continued importance of human involvement in qualitative data collection. While limited by its sample size, the findings contribute valuable insights regarding the intersection of GenAI and experiential learning while highlighting areas for ethical caution.

This pioneering study bridges a gap in understanding the applications of GenAI-powered programs in enhancing graduate research-interview competencies. The findings have implications for various stakeholders, providing students and institutions with an informed perspective on integrating GenAI thoughtfully into pedagogical practices. For developers, the study offers insights into future refinements that could augment the tool's human likeness. Overall, by elucidating the role of GenAI technologies in enabling research-informed experiential learning, this study enriches the evolving discourse on GenAI-enabled pedagogy.

REFERENCES

Agarwal, P., Swami, S., & Malhotra, S. K. (2022). Artificial intelligence adoption in the post COVID-19 new-normal and role of smart technologies in transforming business: A review. Journal of Science and Technology Policy Management, ahead-of-print (ahead-of-print). https://doi.org/10.1108/JSTPM-08-2021-0122

Ahn, J., & Park, H. O. (2023). Development of a case-based nursing education program using generative artificial intelligence. The Journal of Korean Academic Society of Nursing Education, 29(3), 234–246. https://doi.org/10.5977/jkasne.2023.29.3.234

Anastasopoulos, I., Sheel, S., Pardos, Z., & Bhandari, S. (2023). Introducing an open-source adaptive tutoring system to accelerate learning sciences experimentation. Proceedings of the Tenth ACM Conference on Learning & Scale, 251–253. https://doi.org/10.1145/3573051.3593399

Atlas, S. (2023). ChatGPT for higher education and professional development: A guide to conversational AI. University of Rhode Island. https://digitalcommons.uri.edu/cba_facpubs/548?utm_source=digitalcommons.uri.edu%2Fcba_facpubs%2F548&utm_medium=PDF&utm_campaign=PDFCoverPages

Aung, Z. H., Sanium, S., Songsaksuppachok, C., Kusakunniran, W., Precharattana, M., Chuechote, S., Pongsanon, K., & Ritthipravat, P. (2022). Designing a novel teaching platform for AI: A case study in a Thai school context. Journal of Computer Assisted Learning, 38(6), 1714–1729. https://doi.org/10.1111/jcal.12706

Bahroun, Z., Anane, C., Ahmed, V., & Zacca, A. (2023). Transforming education: A comprehensive review of generative artificial intelligence in educational settings through bibliometric and content analysis. Sustainability, 15(17). https://doi.org/10.3390/su151712983

Berger, R. (2015). Now I see it, now I don't: Researcher's position and reflexivity in qualitative research. Qualitative Research, 15(2), 219–234.

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101.

Britten, N. (1995). Qualitative research: Qualitative interviews in medical research. BMJ, 311(6999), 251–253.

Bronfenbrenner, U. (1979). The ecology of human development: Experiments by nature and design. Harvard University Press.

Brookfield, S. D. (2015). The skillful teacher: On technique, trust, and responsiveness in the classroom. John Wiley & Sons.

Brown, J. S., Collins, A., & Duguid, P. (1989). Situated cognition and the culture of learning. Educational Researcher, 18(1), 32–42.

Brown, B., Jacobsen, M., Roberts, V., Hurrell, C., Travers, M., & Neutzling, N. (2024). Open educational practices (OEPs) for research skill development with in-service school teachers. In J. Willison (Ed.), Research thinking for responsive teaching: Research skill development with in-service and preservice educators, Springer Nature, 33–47. https://doi.org/10.1007/978-981-99-6679-0_3

Cheng, S.-C., Hwang, G.-J., & Chen, C.-H. (2019). From reflective observation to active learning: A mobile experiential learning approach for environmental science education. British Journal of Educational Technology, 50(5), 2251–2270. https://doi.org/10.1111/bjet.12845

Cohen, N., & Arieli, T. (2011). Field research in conflict environments: Methodological challenges and snowball sampling. Journal of Peace Research, 48(4), 423–435.

Cranton, P. (2006). Fostering authentic relationships in the transformative classroom. New Directions for Adult and Continuing Education, 2006(109), 5–13.

Creswell, J. W., & Creswell, J. D. (2017). Research design: Qualitative, quantitative, and mixed methods approaches. Sage Publications.

Creswell, J. W., & Poth, C. N. (2016). Qualitative inquiry and research design: Choosing among five approaches. Sage Publications.

Dai, W., Lin, J., Jin, H., Li, T., Tsai, Y., Gašević, D., & Chen, G. (2023). Can large language models provide feedback to students? A case study on ChatGPT. 2023 IEEE International Conference on Advanced Learning Technologies (ICALT), 323–325. https://doi.org/10.1109/ICALT58122.2023.00100

Dewey, J. (1986). Experience and education. The Educational Forum, 50(3), 241–252. https://doi.org/10.1080/00131728609335764

Eager, B., & Brunton, R. (2023). Prompting higher education towards AI-augmented teaching and learning practice. Journal of University Teaching & Learning Practice, 20(5), 02.

Emanuel, E. J., Wendler, D., & Grady, C. (2000). What makes clinical research ethical? JAMA, 283(20), 2701–2711.

Farazouli, A., Cerratto-Pargman, T., Bolander-Laksov, K., & McGrath, C. (2023). Hello GPT! Goodbye home examination? An exploratory study of AI chatbots impact on university teachers' assessment practices. Assessment & Evaluation in Higher Education, 1–13. https://doi.org/10.1080/02602938.2023.2241676

Felten, P. (2013). Principles of good practice in SoTL. Teaching and Learning Inquiry, 1(1), 121–125.

Fisher, R. J. (1993). Social desirability bias and the validity of indirect questioning. Journal of Consumer Research, 20(2), 303–315.

Fook, J. (2016). Critical reflectivity in education and practice. In J. Fook & B. Pease (Eds.), Transforming Social Work Practice: Postmodern Critical Perspectives (2nd ed.), Routledge, 195–208.

Grassini, S. (2023). Shaping the future of education: Exploring the potential and consequences of AI and ChatGPT in educational settings. Education Sciences, 13(7). https://doi.org/10.3390/educsci13070692

Guillemin, M., & Gillam, L. (2004). Ethics, reflexivity, and “ethically important moments” in research. Qualitative Inquiry, 10(2), 261–280.

Halcomb, E. J., & Davidson, P. M. (2006). Is verbatim transcription of interview data always necessary? Applied Nursing Research, 19(1), 38–42.

Hassan, S. Z., Salehi, P., Røed, R. K., Halvorsen, P., Baugerud, G. A., Johnson, M. S., Lison, P., Riegler, M., Lamb, M. E., Griwodz, C., & Sabet, S. S. (2022). Towards an AI-driven talking avatar in virtual reality for investigative interviews of children. Proceedings of the 2nd Workshop on Games Systems, 9–15. https://doi.org/10.1145/3534085.3534340

Ipek, Z., Gözüm, A., Papadakis, S., & Kalogiannakis, M. (2023). Educational applications of ChatGPT, an AI system: A systematic review research. Educational Process, 12(3), 26–55. https://doi.org/10.22521/edupij.2023.123.2

James, N., Humez, A., & Laufenberg, P. (2020). Using technology to structure and scaffold real-world experiential learning in distance education. TechTrends, 64(4), 636–645. https://doi.org/10.1007/s11528-020-00515-2

Jantjies, M., Moodley, T., & Maart, R. (2018). Experiential learning through virtual and augmented reality in higher education. Proceedings of the 2018 International Conference on Education Technology Management, 42–45. https://doi.org/10.1145/3300942.3300956

Kanfer, R., & Ackerman, P. L. (1989). Motivation and cognitive abilities: An integrative/aptitude-treatment interaction approach to skill acquisition. Journal of Applied Psychology, 74(4), 657.

Kaplan-Rakowski, R., Grotewold, K., Hartwick, P., & Papin, K. (2023). Generative AI and teachers' perspectives on its implementation in education. Journal of Interactive Learning Research, 34(2), 313–338.

Kocaballi, A. B. (2023). Conversational AI-powered design: ChatGPT as designer, user, and product. arXiv Preprint.

Kolb, A. Y., & Kolb, D. A. (2005). Learning styles and learning spaces: Enhancing experiential learning in higher education. Academy of Management Learning & Education, 4(2), 193–212.

Kolb, D. A. (1984). Experiential learning: Experience as the source of learning and development. Prentice-Hall.

Kolb, D. A. (2014). Experiential learning: Experience as the source of learning and development (2nd ed.). Pearson Education.

Lave, J., & Wenger, E. (1991). Situated learning: Legitimate peripheral participation. Cambridge University Press.

Lewin, K. (1951). Field theory in social science: Selected theoretical papers (Edited by Dorwin Cartwright.).

Malik, A., Khan, M. L., & Hussain, K. (2023). How is ChatGPT transforming academia? Examining its impact on teaching, research, assessment, and learning. (SSRN Scholarly Paper 4413516). https://doi.org/10.2139/ssrn.4413516

Mayer, S., & Schwemmle, M. (2023). Teaching university students through technology-mediated experiential learning: Educators' perspectives and roles. Computers & Education, 207, 104923. https://doi.org/10.1016/j.compedu.2023.104923

Merriam, S. B., & Tisdell, E. J. (2016). Qualitative research: A guide to design and implementation (4th ed.). John Wiley & Sons.

Mhlanga, D. (2023). Open AI in education, the responsible and ethical use of ChatGPT towards lifelong learning. Education, the Responsible and Ethical Use of ChatGPT Towards Lifelong Learning. http://dx.doi.org/10.2139/ssrn.4354422

Ouyang, F., Zheng, L., & Jiao, P. (2022). Artificial intelligence in online higher education: A systematic review of empirical research from 2011 to 2020. Education and Information Technologies, 27(6), 7893–7925. https://doi.org/10.1007/s10639-022-10925-9

Owens, K. (2023). Employing artificial intelligence to increase occupational tacit knowledge through competency-based experiential learning. CEUR Workshop Proceedings. International Conference on Artificial Intelligence in Education (AIED), Tokyo, Japan.

Pateman, R. M., Dyke, A., & West, S. E. (2021). The diversity of participants in environmental citizen science. Citizen Science: Theory and Practice, 6. https://doi.org/10.5334/cstp.369

Patton, M. Q. (2002). Two decades of developments in qualitative inquiry: A personal, experiential perspective. Qualitative Social Work, 1(3), 261–283.

Piaget, J. (1970). Science of education and the psychology of the child. (D. Coltman, Trans.). Orion.

Qadir, J. (2023). Engineering education in the era of ChatGPT: Promise and pitfalls of generative AI for education. 2023 IEEE Global Engineering Education Conference (EDUCON), 1–9. https://doi.org/10.1109/EDUCON54358.2023.10125121

Rudolph, J., Tan, S., & Tan, S. (2023). ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? Journal of Applied Learning and Teaching, 6(1), 342–363. https://doi.org/10.37074/jalt.2023.6.1.9

Safdar, N. M., Banja, J. D., & Meltzer, C. C. (2020). Ethical considerations in artificial intelligence. European Journal of Radiology, 122, 108768.

Saldaña, J. (2021). The coding manual for qualitative researchers. Sage Publications.

Sallam, M. (2023). The utility of ChatGPT as an example of large language models in healthcare education, research, and practice: Systematic review on the future perspectives and potential limitations. Healthcare, 11(6), 887. https://doi.org/10.20944/preprints202302.0356.v1

Schön, D. A. (1983). The reflective practitioner: How professionals think in action. New York: Basic Books.

Shneiderman, B. (2022). Human-centered AI. Oxford University Press.

Smolansky, A., Cram, A., Raduescu, C., Zeivots, S., Huber, E., & Kizilcec, R. F. (2023). Educator and student perspectives on the impact of generative AI on assessments in higher education. Proceedings of the Tenth ACM Conference on Learning Scale, 378–382.

Sternberg, R. J., & Zhang, L. (2014). Perspectives on thinking, learning, and cognitive styles. Routledge.

Su, J., Zhong, Y., & Ng, D. T. K. (2022). A meta-review of literature on educational approaches for teaching AI at the K-12 levels in the Asia-Pacific region. Computers and Education: Artificial Intelligence, 3, 100065. https://doi.org/10.1016/j.caeai.2022.100065

Vygotsky, L. (1978). Mind and society: The development of higher psychological processes. In V. Cole, S. John-Steiner, S. Scribner, & E. Souberman (Eds.), Interaction between learning and development. Harvard University Press.

Wilson, J. P., & Beard, C. (2013). Experiential learning: A handbook for education, training and coaching. Kogan Page Publishers.

Yang, W. (2022). Artificial Intelligence education for young children: Why, what, and how in curriculum design and implementation. Computers and Education: Artificial Intelligence, 3, 100061. https://doi.org/10.1016/j.caeai.2022.100061

Yardley, S., Teunissen, P. W., & Dornan, T. (2012). Experiential learning: AMEE guide No. 63. Medical Teacher, 34(2), e102–e115.

Zimmerman, B. J. (2002). Achieving self-regulation. In Urdan, T. & Pajares, F. (Eds.), Academic motivation of adolescents, Information Age Publishing, 1–28.

Comments

Show All Comments