Michael Vallance, EdD, MSc, BSc (Hons), PGCE is a professor in the Department of Media Architecture, Future University Hakodate, Japan. He has been involved in educational technology design, implementation, research, and consultancy for over 20 years. He has worked closely with higher education institutes, schools, and media companies in UK, Singapore, Malaysia, and Japan. Vallance was awarded second place in the Distributed Learning category of the USA′s Department of Defense (DoD) Federal Virtual Worlds Challenge for his research in virtual collaboration. His website is http://www.mvallance.net.

Abstract

The paper summarizes an educational researcher’s approach to determining the impact of active learning in disaster-themed 3D virtual worlds. The goal of the project is to advance students’ declarative, procedural and meta-cognitive knowledge by implementing measurable robot-mediated interaction activities in 3D virtual worlds. Through the design and iterative development of unique ‘active learning’ activities for authentic international collaboration, the participants are able to synergize engineering and science academic content with the learning processes. In addition, by actively participating in international 3D virtual tele-collaboration challenges, which include controlling basic robots within a simulated disaster zone, quantitative metrics of students’ programming skills and psychometric assessment of declarative, procedural and meta-cognitive knowledge can be measured. This will enable educators to quantify the impact of active learning.

KEY WORDS: virtual worlds; knowledge; education

1. INTRODUCTION

Anderson et al. (2001), developing the work of Bloom (1956), proposed a hierarchy of knowledge consisting of factual knowledge (relating to a specific discipline), declarative knowledge (relationship between concepts so that constituent parts can function as a whole), procedural knowledge (techniques and procedures and when to use), and meta-cognitive knowledge (knowledge of demands, strategies and one′s limitations). Learning science researchers have suggested though that an assessment-focused culture still prevalent in twenty-first century education negatively impacts on learners′ capabilities to progress from static declarative knowledge to active procedural knowledge and, subsequently, to meta-cognitive knowledge (Bachnik, 2003; Towndrow and Vallance, 2013). Moreover, Hase (2011, p. 2) states, “The acquisition of knowledge and skills does not necessarily constitute learning. The latter occurs when the learner connects the knowledge or skill to previous experience, integrates it fully in terms of value, and is able to actively use it in meaningful and even novel ways.”

A possible solution to this current circumstance worth researching is to engage high school and undergraduate students by their actively participating in international collaborative tasks that integrate the design, construction, and programming of robots in both the real world and a novel, unique virtual world simulation. The theoretical foundations of collaboration lie in social constructivism where personal meaning-making is constructed with others in a social space. Collaboration can promote creativity, critical thinking, and dialogue, and assist with deeper levels of knowledge generation, promote initiative, and when conducted internationally, address issues of culture (Vallance and Naamani, 2013). One outcome is the development of an essential twenty-first century skill that Jukes et al. (2010, p. 66) term collaboration fluency: “Collaboration fluency is teamworking proficiency that has reached the unconscious ability to work cooperatively with virtual and real partners in an online environment to create original digital products.”

Through collaborative efforts, it is speculated that students will be incentivized to use knowledge gained in theoretical technology courses to pragmatically solve challenges of increasing task complexity (Barker and Ansorge, 2002; Vallance and Martin, 2012; Vallance et al., 2013). To confirm this requires researchers to specifically measure students′ development of declarative (recall), procedural (apply and analyze), and meta-cognitive (understand) knowledge (Anderson et al., 2001; Schank, 2013). This can be undertaken by triangulating data of task complexity, pre- and post-task surveys, and analysis of digitally captured communication (Vallance et al., 2015).

2. JUSTIFICATION OF 3D VIRTUAL WORLD SIMULATIONS AND ROBOT-MEDIATED INTERACTIONS

Learning is considered to be a process whereby knowledge is created through the transformation of experience (Kolb, 1984). In earlier iterations of this research, reported in Vallance and Martin (2012), deFreitas and Neumann (2009) suggest that the appeal, immersivity, and immediacy of virtual worlds can support this experiential learning, but education requires a reconsideration of how, what, when, and where we learn. deFreitas and Neumann use Dewey′s concept of inquiry to posit that learners′ virtual experiences, their use of multiple media, the transactions and activities between peers, and the facilitation of learner control between them will lead to transactional learning, which “aims to support deeper reflection upon the practices of learning and teaching” (deFreitas and Neumann, 2009, p. 346), which arguably leads to “wider opportunities for experiential learning” (deFreitas and Neumann, 2009, p. 346).

An approach then is required that encourages exploration; development of procedural knowledge; iterative, recursive, and logical thinking; structured task breakdown; and dealing with abstraction—in other words, computational thinking (Wing, 2006). An instructivist pedagogy does not support such an approach, so an alternative has to be sought. Prior successful projects have involved simulation and robotics. For instance, beginner programming concepts can be introduced and experienced using the graphical LEGO Mindstorms software, which has been shown to support programming through its semiotic drag-and-drop graphical user interface (Lui et al., 2010).

Previous research has also determined that closed and highly defined tasks provide the necessary comparability and empirical data to determine the success of task completion (Vallance and Martin, 2012). To satisfy these criteria, the programming of a robot to navigate mazes of measurable complexity can be adopted (Olsen and Goodrich, 2009). Research can be designed to observe students communicating in a 3D virtual world when they are programming a robot to follow distinct challenges that, in turn, results in tangible and quantifiably measured outcomes.

Consequently, LEGO Mindstorms robots hardware and software are used in this research as tools to mediate the communication of the students. The research has been designed to collate data of students collaborating in 3D virtual worlds to program LEGO robots to successfully navigate mazes from start to completion in both the physical world and simultaneously within the 3D virtual spaces. This is implemented online by students remotely located in Japan and UK who: (i) design circuits that necessitate the use of robot maneuvers and sensors and (ii) collaborate in a virtual world to solve predetermined tasks. The task solutions necessitate the use of programming skills, collaboration, communication, and higher-level cognitive processes. It is posited that these experiences lead to personal strategies for teamwork, planning, organizing, applying, analyzing, creating, and reflection.

As LEGO EV3 robot programming components can be used to quantify task complexity and thus iteratively increase the challenges given to high school and undergraduate university students, a 3D virtual simulation provides interesting, engaging, and realistic yet safe contexts where robots are ordinarily utilized, e.g., disaster recovery situations. Virtual simulations allow remotely located students to enter as avatars to communicate and collaborate with other students. Data within world can also be captured for analysis. Through active participation in online, international, collaborative challenges it is anticipated that students will develop programming, design, and communication skills.

This section concludes with our research hypothesis: International collaboration in robot-mediated active learning interactions will significantly increase participants′ declarative, procedural, and meta-cognitive knowledge.

3. METHODOLOGY

A quasi mixed-method design experiment is being implemented. For instance, a triangulated data set (problem and solution measures, cognitive process, specific knowledge) can be captured during implementation of tasks so that the researchers can determine the factors that impact the development of declarative, procedural, and meta-cognitive knowledge. The advantage of this method is that researchers can quantify students′ experiences and correlate them with their task achievements. The methodology is summarized in Table 1.

TABLE 1: Methodology summary

| Instrument | Metric |

1. Task complexity (i.e., task problem and solution measures).

Two dependent measures (task challenge and student skill) will be analyzed using a type-2, six-way mixed design ANOVA with the following independent variables:

|

Task fidelity equals robot task complexity/circuit task complexity i.e., TF = RTC/CTC Circuit task complexity, CTC = ∑ N(d + m + o + s) where Nd = number of directions; Nm = number of maneuvers; No = number of obstacles; Ns = number of sensors Adapted from Olsen and Goodrich (2003) Robot task complexity, RTC = ∑ (Mv1 + Sv2 + SW + Lv3) where M = number of moves (direction and turn), S = number of sensors, SW = number of switches, L = number of loops; and where v = number of decisions required by user for each programmable block so that v1 = 6, v2 = 5, v3 = 2 Adapted from Vallance et al. (2013) |

| 2. Analyze discourse of screen capture of all simulated tasks in the 3D virtual space and video capture of all real-world tasks. Discourse analysis of communication to identify evidence of cognition associated with remember, understand, apply, analyze, evaluate, create | Cognitive descriptors of remember, understand, apply, analyze, evaluate, create—as detailed in Anderson et al., 2002; Knipe and Lee, 2002; Martin and Vallance, 2008; and Vallance and Martin, 2012. |

| 3. Analyze participants′ experiences using pre-and post-task surveys. | Knowledge descriptors of declarative (recall), procedural (apply), and meta-cognitive (understand) knowledge, as detailed in Anderson et al., 2002. |

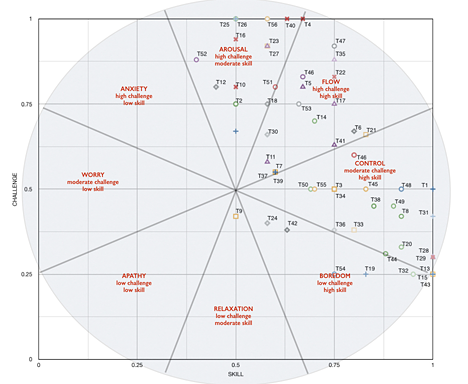

The research to date has developed a metric termed task fidelity (Vallance et al., 2015). Task fidelity is defined as an indicator of the complexity of the circuit compared with the complexity of the program to complete the circuit. Although the development of task fidelity is beyond the scope of this paper (cf. Vallance et al., 2015), it has been determined that task fidelity is a useful indicator of the complexity of a task, and a cognitive determiner of task fidelity is immersion (or flow). To calculate immersion/flow, the Pearce et al. (2005) flow criteria of task challenge and skill have been used: “Amongst the various studies researching flow, an on-going issue has been to find a method for measuring flow independently from the positive states of consciousness (such as enjoyment, concentration, control, lack of self-consciousness, lack of distraction). One solution has been to use a measure of the balance between the challenge of an activity and the participant′s perception of their skill to carry out that activity” (Pearce et al. (2005, p. 250). This has been further developed in this research using the eight-channel model of flow by Carli et al. (1998). A summary of flow data captured to date in this research is illustrated in Section V.

4. IMPLEMENTATION

4.1 Fukushima Disaster-Themed 3D Virtual Space

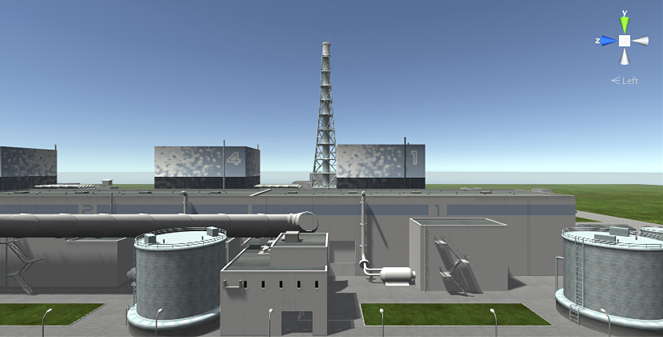

Metaverse designers have created a virtual Fukushima nuclear plant using the Unity 3D application (see Figure 1). The 3D space replicates the real-world Fukushima reactors with cooling towers prior to the disaster of March 2011. One of the technical challenges was to program a virtual robot to move within the Fukushima space and have its maneuvers replicated in the real-world lab by a LEGO Mindstorms robot. This has been achieved within an OpenSim virtual world (Arnold, 2012) but remains a challenge within Unity 3D at the time of publication of this paper. As the project matures, it is anticipated that students and adults gain hands-on experience moving around the simulated virtual Fukushima space and observing a physical robot simultaneously moving at a remote location (e.g., in a scaled mock-up of a hazardous building such as the reactor). It is anticipated that such user-accessible simulations with citizens controlling the virtual robot will create an awareness and understanding of disaster recovery, and not simply rely on retrospective information from unprepared experts (Guizzo, 2011). Consequently, as well as capturing data for analysis of cognitive processes, an affective aim is to familiarize students and the public with the complexities of nuclear power; given that there is much confusion about the situation at present in Japan (Vallance et al., 2013).

FIG. 1: Virtual Fukushima nuclear power plant

4.2 Training Area in OpenSim

Before entering the virtual Fukushima, students undertake tasks in the training area developed using OpenSim (see Figure 2). The learner-centered design approach (cf. Vallance, 2012) enabled a number of innovative tools to be created and customized by the students in OpenSim, for instance, the ability to move a graphical representation of the LEGO robot object and leave a trace of the circuit in-world. Also, media objects in-world can display live streamed video from the lab in Japan (using the online Bambuser service) and also from an iPhone attached to the front of the LEGO robot using the Bambuser iPhone app. Virtual notice boards can be updated with text and images to aid communication. Additional interactivity has been added in the form of debris, which when touched by the virtual robot will explode. Although programming is mostly undertaken using the Mindstorms EV3 software, a LabView VI program has been developed to enable communication directly between an in-world prim and the physical LEGO robot (see Figure 3).

FIG. 2: OpenSim training area

FIG. 3: Students remote control a LEGO EV3 robot to navigate a circuit

Some tasks to date have involved Japanese students collaborating with other remotely located Japanese students and some with Japanese students collaborating with UK students. Tasks have included maneuvering around obstacles using distance and turn commands, using touch sensors to navigate around obstacles, constructing a bridge and using touch sensors to move over obstacles, using light sensors to avoid obstacles, using sensors to locate items, and manipulating the tele-robotic controls to virtually maneuver our LEGO robot within the virtual space as part of “search and rescue” simulations. Communication between students has required the use of virtual world tools such as text panes, voice, live video streaming of respective real-world labs, and 3D presentation boards where Mindstorms program images were deposited. Avatars in-world enabled students to remotely maneuver a real-world robot in addition to a LEAP Motion controller set up for hands-free remote maneuvering of the EV3 robot. As previously reported by Vallance and Naamani (2013), “The developments have enabled students to actively engage in international collaboration, problem solving, construction of solutions, develop increasingly sophisticated communication and effective team working” (p. 63).

4.3 Robot-Mediated Interactions Using the Oculus Rift.

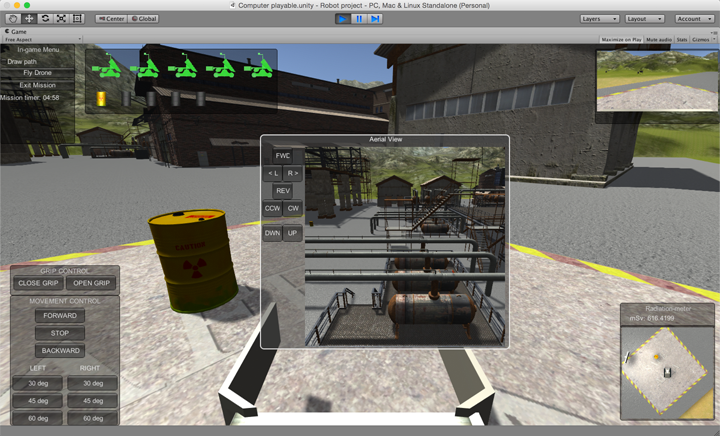

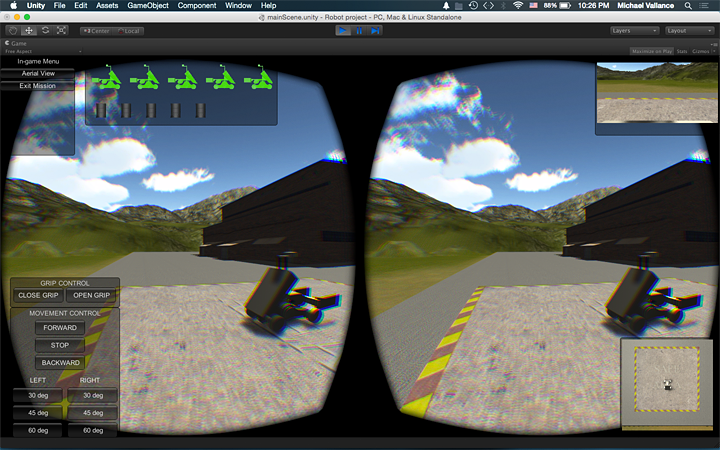

To additionally engage the learners in the active design and construction of their learning environment both in the 3D space and the real world lab, a consultant Unity programmer was commissioned to initiate the design of an abandoned factory as the virtual space (see Figure 4). The rationale is to combine a 3D simulation space for real-world collaboration; in this case, teaching the programming of robots contextualized by simulating a robot navigating within a restricted area. Student participants are presented with the following scenario:

- Four children have been admitted to hospital with apparent radiation sickness. They were playing in the old abandoned factory complex belonging to NEPCo (Nuclear Energy Production Company). Upon inspection, the factory area recorded a radiation value of 4.00 Sv/h. This measurement is the same value as the Fukushima Reactor 1. Note that a dose of 0.75 Sv/h can be enough to induce radiation sickness. Therefore, it seems it is too dangerous to enter the factory complex. It is estimated there are 5 radioactive bins within the complex. We are not sure where the radioactive bins are located and do not yet know why they have been dumped in the old factory complex. Your mission is to maneuver the robot and drone, locate the 5 radioactive bins, and return them to the designated safe area. Be careful! One wrong move can cause an explosion … and disaster!

FIG. 4: Abandoned factory scenario

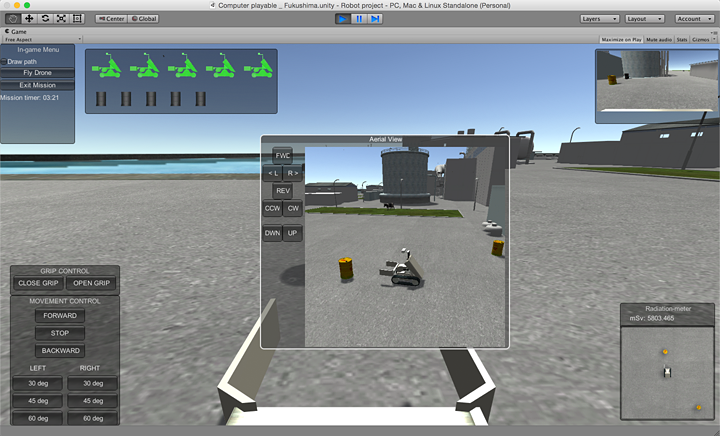

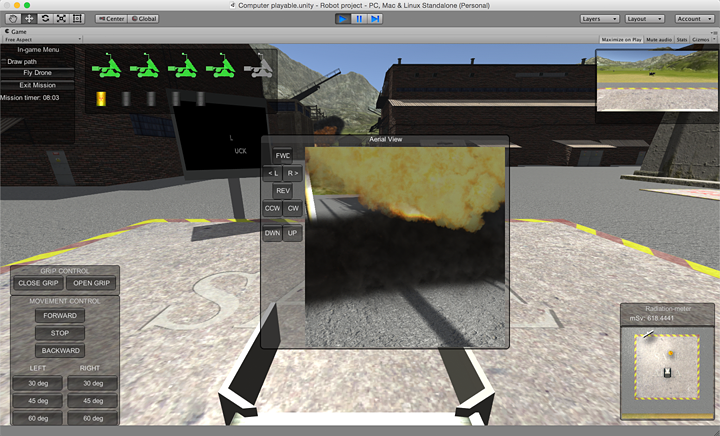

Students maneuver the virtual robot using the built-in controller to pick up radioactive bins (see Figure 5). A radiation meter in the bottom right corner indicates radioactivity levels that provide a clue as to the nearness of the radioactive bins. A rearview mirror for the robot controller is viewed in the top right corner. A birds-eye view is offered via a virtual drone that can be maneuvered over the abandoned factory, seeking out the location of the radioactive bins. If the robot crashes, then the radioactive bins explode (see Figure 6). On successful completion of the abandoned factory scenario, students are then teleported to the Fukushima nuclear power plant scenario

FIG. 5: Fukushima scenario

FIG. 6: Crashing the robot results in a disastrous explosion and the simulation is terminated

The project has also been developed to be viewable via the Oculus Rift 3D head-mounted display (see Figure 7) and utilizes the hands-free Leap Motion controller. This research is in anticipation of commercial developments in virtual reality technologies that will enable users to log in to online 3D virtual spaces to engage and collaborate with other participants remotely located. Technologies not only include head-mounted displays exemplified in this research by the Oculus Rift, but also immersive virtual reality environments known as CAVE (cave automatic virtual environment). The maturation of immersive technologies has profound implications for the design and implementation of online education environments and subsequent pedagogies and active learning.

FIG. 7: The scenario is also viewed via the Oculus Rift 3D head-mounted display

Data is currently being collected as students in Japan and UK participate in tasks requiring collaboration and communication. Also, a comparison between the Fukushima scenario and the abandoned factory scenario will be investigated.

5. DISCUSSION

In order to capture data immediately after the completion of a task and while still in communication with their virtual collaborators in the virtual world, the students reported on the task′s challenge and their skill in attempting the task. For “challenge” they had to report whether they considered the task difficult, demanding, manageable, or easy. For “skill” they had to report whether they considered their ability to undertake the task as hopeless, reasonable, competent, or masterful (Pearce et al., 2005). Once the task had been completed, students logged out of the virtual world and a general discussion of the task process and its outcome was held locally with the researchers. Immersion was then graphically illustrated using the Carli et al. (1998) eight-channel model of flow: arousal, flow, control, boredom, relaxation, apathy, worry, and anxiety.

Vallance et al. (2015) reported earlier iterations of the research. The participants were undergraduate students studying media architecture in a systems information science focused Japanese university (n = 6) and A-level students in UK studying science-based subjects (n = 10). Of the 16 participant students, two were female, 14 were male, all were between 17 and 19 years of age, and none had prior experience working with the project′s technologies. A total of 56 robot tasks undertaken were analyzed.

The immersion of students within each task were calculated and graphically represented on the Carli et al. (1998) eight-channel model of flow (see Figure 8). Tasks where participants were considered to be immersed (in a state of flow) were T5, T6, T7, T11, T14, T17, T18, T21, T22, T30, T35, T39, and latterly T47, T46, T41, and T53. These selected tasks of optimal flow are summarized in Table 2. Detailed task designs and learning analysis are beyond the scope of this paper but are explained in Vallance et al. (2015).

FIG. 8: Data of flow in tasks 1–56

TABLE 2: Selected tasks of optimal flow

| Task summary | ||

| T5: Program the LEGO robot to navigate a specified circuit around obstacles. JPN teaching JPN. | T17: Suika robot. Rotate robot + follow line + use sensors to slice an object. Japan preparation. | T37: Program the LEGO robot to robot to move fwd + turn left + move fwd + turn left + move fwd + turn left + move fwd + turn right + move fwd + turn right + move fwd + stop (Pathfinder circuit). Use of color sensor. |

| T6: Program the LEGO robot to navigate a specified circuit around obstacles. JPN teaching JPN. | T21: Suika robot. Rotate robot + follow line + use sensors to slice an object. Japan teach UK. | T6: Program the LEGO robot to navigate a specified circuit around obstacles. JPN teaching JPN. T21: Suika robot. Rotate robot + follow line + use sensors to slice an object. Japan teach UK. T39: Design and assemble a unique LEGO robot. Program the LEGO robot to move from start point + a sensor + a touch sensor + a move to an exact goal. |

| T7: Program the LEGO robot to navigate a specified circuit around obstacles using touch sensors. Locate and press OFF switch. JPN teaching JPN. | T22: Programming LabView for remote control of LEGO robot via OpenSim virtual robot for search and rescue. | T41: Design and assemble a unique LEGO robot. Program the LEGO robot to move two green objects from danger area to safe area. Use of sensors. |

| T11: Program the LEGO robot to maneuver a robotic arm. JPN preparation | T30: Program the LEGO robot to move forward (fwd) + sound sensor + stop + turn 90 deg + move forward. JPN teaching JPN | T46: Design and assemble a unique LEGO robot. Program the LEGO robot to move two objects from danger area to safe area. Use of sensors. Fastest time. JPN teach UK. |

| T14: Programming LabView for remote control of LEGO robot via OpenSim virtual robot. | T35: Program the LEGO robot to move fwd + turn left + move fwd + turn left + move fwd + turn left + move fwd + turn right + move fwd + turn right + move fwd + stop. Turns are not 90 degrees (i.e., Pathfinder circuit) | T47: Design and assemble a unique LEGO robot. Program the LEGO robot to lift one object from danger area to safe area. Use of sensors (including gyro). |

| T53: Design and assemble a unique LEGO robot. Program the LEGO robot to move two green objects from danger area to safe area. Do not move the two red objects. Use of sensors. | ||

Looking at the task summaries, a variety of sensors were used in these tasks. As the tasks became more complex, according to our robot task complexity criteria (cf. Vallance et al., 2015), the students indicated that even though the tasks were considered demanding they deemed their skills to be competent, thereby indicating some degree of development. However, in later tasks the students revealed that as the level of challenge increased (from manageable to difficult), their skill level in attempting to seek successful outcomes decreased (from competent to reasonable). Looking at the task communication transcripts and screen captures, it appeared that the students had to utilize different procedural knowledge involving, for instance, programming a touch sensor to coordinate with a motor action. These latter tasks required students to analyze and create unique solutions based on their prior task experiences and were thus deemed most challenging. The increased task complexity necessitated a higher level of programing skill incorporating sensor variables and loops. Although students′ post-task reflection data revealed that they found sensor-related tasks difficult, being immersed in a task led to more active learning and, in turn, to greater student success.

To conclude this section, it is acknowledged that research into the efficacy of online 3D virtual collaborations for effective learning must be persevered in order to determine its value to educators and learners: “Even though video captures of robot movements and the online collaboration, transcriptions of in-world communication, and post-task reflections are collated as data for this research, at present the data only superficially demonstrates a development of procedural knowledge and collaborative fluency” (Vallance and Naamani, 2013, p. 72).

6. CONCLUSION

To conclude this paper, an attempt is being made to quantify the impact of active learning. By actively participating in online, international, 3D virtual tele-collaboration challenges, which include controlling basic robots within a simulated disaster zone, quantitative metrics of students′ programming skills, and psychometric assessment of declarative, procedural and meta-cognitive knowledge are attempted to be measured. This is being undertaken through the design and iterative development of unique, 3D virtual world active learning activities for authentic, online, international collaboration, where participants are able to synergize engineering and science academic content with their learning processes. Much more work needs to be done and the research hypothesis remains inconclusive. However, for 3D virtual worlds to impact education, quantifiable metrics of learning are required. This research project is an attempt to deliver those metrics.

ACKNOWLEDGMENTS

This material is based on the work supported by JAIST “kakenhi” Grant No. 15K01080 and Future University Hakodate “tokubetsu kenkyuhi” Grant No. 2014B02. Many thanks to students at Future University Hakodate, Japan, and physics teacher Malcolm Thomas and pupils at Mountain Ash Comprehensive School, South Wales, UK. Credit is given to the metaverse designers at Reaction Grid. Gratitude to Glenn Mallon and Gabriel Campitelli for their programming expertise.

REFERENCES

Anderson, L.W., Krathwohl, D.R., Airasian, P.W., Cruicshank, K.A., Mayer, R.E., Pintrich, P.R., Raths, J., and Wittrock, M.C., A Taxonomy for Learning, Teaching and Assessing: A Revision of Bloom′s Taxonomy of Educational Objectives, Longman, New York, 2001.

Arnold, L., Controlling Robots 6,000 Miles Away? No Problem! National Instruments Developer Zone, 2012, Accessed March 24, 2015; https://decibel.ni.com/content/groups/sweet-apps/blog/2012/03/15/controlling-robots-6000-miles-away-no-problem.

Barker, S.B. and Ansorge, J., Robotics as Means to Increase Achievement Scores in an Informal Learning Environment, J. Res. Technol. Educ., vol. 39, no. 3, pp. 229–243, 2007.

Battro, A.M., Fischer, K.W., and Lena, P.J., The Educated Brain: Essays in Neuroscience, Cambridge University Press, London, 2011.

Bloom, B.S. (ed.), Taxonomy of Educational Objectives, the Classification of Educational Goals, Handbook 1: Cognitive Domain, McKay, New York, 1956.

Carli, M., Delle Fave, A., and Massimini, F., The Quality of Experience in the Flow Channels, Csikszentmihalyi, M. and Csikszentmihalyi, I.S. (eds.), Optimal Experience: Psychological Studies of Flow in Consciousness, Cambridge University Press, New York, 1998.

deFreitas, S. and Neumann, T., The Use of ‘Exploratory Learning’ for Supporting Immersive Learning in Virtual Environments, Comput. Educ., vol. 52, pp. 343–352, 2009.

Guizzo, E., Fukushima Robot Operator Writes Tell-All Blog, IEEE Spectrum, August 23, 2011, Accessed August 20, 2012; http://spectrum.ieee.org/automaton/robotics/industrial-robots/fukushima-robot-operator-diaries.

Hase, S., Learner Defined Curriculum: Heutagogy and Action Learning in Vocational Training, SIT J. Appl. Res.(Special Edition), pp. 1–10, 2011; Accessed May 16, 2016; http://sitjar.sit.ac.nz/SITJAR/Special/.

Jukes, I., McCain, T., and Crockett, L., Understanding the Digital Generation, Sage, Thousand Oaks, CA, 2010.

Knipe, D. and Lee, M., The Quality of Teaching and Learning via Videoconferencing, Br. J. Educ. Technol., vol. 33, no. 3, pp. 301–311, 2002.

Kolb, D.A., Experiential Learning: Experience as the Source of Learning and Development, Prentice-Hall, Englewood Cliffs, NJ, 1984.

Lui, A.K., Ng, S.C., Cheung, H.Y., and Gurung, P., Facilitating Independent Leaning with LEGO Mindstorms Robots, ACM Inroads, vol. 1, no. 4, pp. 49–53, 2010.

Martin, S. and Vallance, M., The Impact of Synchronous Inter-Networked Teacher Training in Information and Communication Technology Integration, Comput. Educ., vol. 51, pp. 34–53, 2008.

Olsen, D.R. and Goodrich, M.A., Metrics for Evaluating Human-Robot Interactions, 2003, Accessed March 14, 2009; http://icie.cs.byu.edu/Papers/RAD.pdf.

Pearce, M. Ainley, M., and Howard, S., The Ebb and Flow of Online Learning, Comput. Hum. Behav., vol. 21, pp. 745–771, 2005.

Schank, R., Teaching Minds: How Cognitive Science Can Save Our Schools, Teachers College Press, New York, 2013.

Towndrow, P.A. and Vallance, M., Making the Right Decisions: Leadership in 1-to-1 Computing in Education, Int. J. Educ. Man., vol. 27, no. 3, pp. 260–272, 2013.

Vallance, M., Design and Robots for Learning in Virtual Worlds, Khan, B. (ed.), User Interface Design for Virtual Environments: Challenges and Advances, McWeadon Education, Hershey, PA, pp. 268–284, 2012.

Vallance, M. and Martin, S., Assessment and Learning in the Virtual World: Tasks, Taxonomies and Teaching for Real, J. Virtual Worlds Res., vol. 5, no. 2, pp. 1–13, 2012.

Vallance, M. and Naamani, C., Experiential Learning, Virtual Collaboration and Robots, Jerry, P., Tavares-Jones, N., and Gregory, S. (eds.), Riding the Hype Cycle: The Resurgence of Virtual Worlds, Inter-Disciplinary Press, Oxford, UK, 2013.

Vallance, M., Naamani, C., Thomas, M., and Thomas, J., Applied Information Science Research in a Virtual World Simulation to Support Robot Mediated Interaction Following the Fukushima Nuclear Disaster, Commun. Info. Sci. Man. Eng., vol. 3, no. 5, pp. 222–232, 2013.

Vallance, M., Martin, S., and Naamani, C., A Situation that We Had Never Imagined: Post-Fukushima Virtual Collaborations for Determining Robot Task Metrics, Int. J. Learning Technol., vol. 10, no. 1, pp. 30–49, 2015.

Wing, J.M., Computational Thinking, Commun. ACM, vol. 49, no. 3, pp. 33–35, 2006.

Comments

Show All Comments