SEVEN YEARS OF ONLINE PROJECT-BASED LEARNING AT SCALE

Georgia Institute of Technology, Atlanta, Georgia 30332-0383, USA

*Address all correspondence to: Chaohua Ou, Georgia Institute of Technology, Atlanta, GA 30332-0383, USA, E-mail: cou@gatech.edu

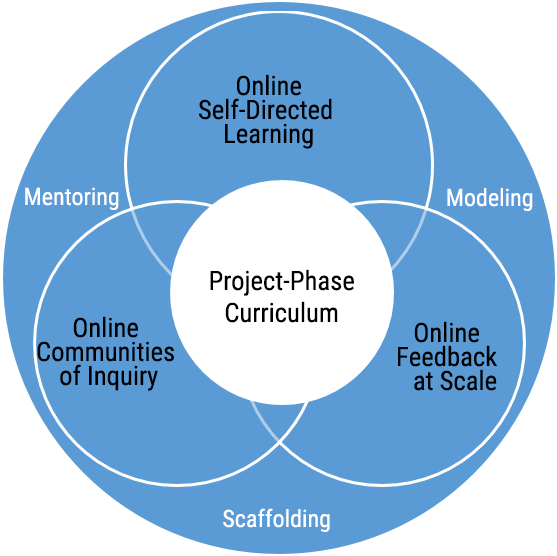

Adequate literature has commended the effectiveness and benefits of well-implemented project-based learning (PjBL), yet its success can significantly vary based on contextual factors and approaches. Thus, design guidelines generated from the research need to be adapted and evaluated in various learning environments. Prevailing guidelines predominantly cater to PjBL in traditional residential education. Research into online PjBL remains limited, often involving one-off and noniterative experiments in small classes. This study addresses these gaps by implementing a comprehensive PjBL model in a large online graduate course in computer science. This model integrates project-phase curriculum, online self-directed learning, online communities of inquiry, and online feedback at scale, supported by scaffolding, modeling, and mentoring. The effectiveness of this model is assessed through student surveys spanning seven years. Survey results (n = 2179) revealed consistently positive perceptions of the course's efficacy among students. Notably, older adults and female students, as well as those who identified project topics earlier in the course, reported higher ratings on course effectiveness. This paper shares the detailed implementation of the model, survey findings, and student perspectives on how to succeed in online PjBL. The implications of the study for future research and practice is also discussed.

KEY WORDS: project-based learning (PjBL), online learning at scale, learning communities, pedagogical issues, teaching and learning strategies

1. INTRODUCTION

Project-based learning (PjBL) is “a systematic teaching method that engages students in learning knowledge and skills through an extended inquiry process structured around complex, authentic questions and carefully designed projects and tasks” (Markham et al., 2003, p. 4). While there are other different definitions, they all emphasize two essential elements: (1) a driving question or problem and (2) the production of one or more artifacts that address the questions or problem (Blumenfeld et al., 1991; Morgan, 1984). The second element is regarded as what distinguishes project-based learning from problem-based learning, as the latter does not necessarily require constructing a concrete learning artifact (Helle et al., 2006). PjBL is also uniquely different from simply “doing projects,” which has long been included as a learning activity in many classes. In PjBL, projects are “central, not peripheral to the curriculum” (Thomas, 2000, p. 3), guiding the instruction of an entire course instead of a single course unit.

PjBL has been widely used in various disciplines in K-12 and postsecondary educational settings for several decades. As a result, there has been a large body of research literature on its design principles, implementations, challenges, practices, efficacy, and so on. Adequate literature has commended that well-implemented PjBL has positive effects on students' motivation, engagement, self-regulated learning, collaborative learning, problem-solving skills, academic achievement, and satisfaction in education (Chen & Yang, 2019; Condliffe et al., 2017; Helle et al., 2006; Thomas, 2000). Nevertheless, planning and enacting PjBL is challenging. This learner-centered and inquiry-based approach to education demands a drastic shift in the teaching and learning paradigm (Ravitz, 2010). It requires a dramatic departure from traditional teacher-led knowledge transmission to student-driven knowledge construction through their investigation of authentic problems (Condliffe et al., 2017; Helle et al., 2006; Thomas, 2000). Hence the effectiveness of PjBL often hinges on the depth and quality of implementation. Since PjBL implementation can vary significantly in different contexts or with different approaches, design principles generated from the research need to be adapted and evaluated in different learning environments (Condliffe et al., 2017; Thomas, 2000).

The COVID-19 pandemic forced educational institutions worldwide to quickly switch from in-person instruction to online teaching at an unprecedented scale. As people return to the office, schools, and classrooms and try to adjust to the widespread impacts of the pandemic, it is clear that they have quickly adapted to and accepted online learning. A survey of 1286 faculty and administrators from higher education institutions and 1469 students across forty-seven US states revealed changing attitudes towards online learning postpandemic: 58% of faculty expressed increased optimism, and 73% of students preferred some fully online courses (Seaman, 2021). Now more than ever, we need to reimagine PjBL, as educators and curriculum developers face immense challenges in designing or redesigning PjBL for online or hybrid learning environments. However, current established guidelines are primarily oriented towards PjBL in K-12 and postsecondary residential education, where students meet regularly with their teachers and peers in physical locations and work closely on their projects (Condliffe et al., 2017; Larmer, 2020; Larmer & Mergendoller, 2010; Larmer, et al., 2015; Thomas, 2000). Evidence is sparse on how PjBL could be implemented—and perhaps even scaled up—in online learning in higher education, despite its rapid growth and expansion during the past decade.

We seek to address this gap and improve our understanding of PjBL in online education by leveraging technologies to implement PjBL in a large online graduate class. The implementation was accomplished through the rigorous application of a model comprised of four key components: project-phase curriculum, self-directed online learning, online communities of inquiry, and online feedback at scale. They are integrated with three instructional strategies: scaffolding, modeling, and mentoring. We evaluated the effectiveness of this model through surveys among graduate students for twenty-one consecutive semesters from 2015 to 2022. This paper shares the detailed implementation of the PjBL based on the model, the survey findings, and students' perspectives on how to succeed in online PjBL.

2. LITERATURE REVIEW

Several reviews have been conducted during the past two decades to synthesize and summarize the underpinnings, development, and future directions of research on PjBL (Chen & Yang, 2019; Condliffe et al., 2017; Guo et al., 2020; Helle et al., 2006; Thomas, 2000).

Thomas (2000) reviewed studies in K-12 settings published before 2000. He noted that it was difficult to generalize the effectiveness of PjBL from the existing research because there was not a uniform vision of what constitutes PjBL. As such, Thomas tried to distinguish PjBL from other instructional methods by establishing several criteria. He called for a theory providing guiding principles for PjBL, built on further research for more evidence of its effectiveness. He also recommended more attention be paid to whether PjBL is more or less effective for specific student subgroups different in “age, sex, demographic characteristics, ability, and a host of dispositional and motivational variables” (p. 20).

After reviewing studies published between 2000 and 2017 on PjBL in K-12 settings, Condliffe et al. (2017) indicated that much had changed in the PjBL practices and research since the publication of Thomas' (2000) review. New approaches to support PjBL implementation have emerged, and a significant body of research on the implementation and effectiveness of PjBL has accumulated. Nevertheless, the researchers noted that these studies did not share common design principles, making it difficult to conclude what principles work well and what do not. Therefore, they considered it a top priority for the PjBL community to design and refine design principles that can be evaluated in different education settings. The review also reiterated the importance of investigating the effects of PjBL on specific student subgroups because related research evidence is “too thin to support any conclusions” (p. 49). The authors specifically called for future studies focused on underserved student population, as PjBL is theorized to be effective for these studens who typically have had fewer opportunities in traditional educational settings. Interestingly, a recent study revealed that women in engineering reported more positive benefits in PjBL than men (Vaz et al., 2023), which is not surprising, as PjBL possesses all characteristics of gender-inclusive curriculum and pedagogy (Brotman & Moore, 2008). Further studies are needed to look into how and why a specific PjBL approach would benefit certain student subgroups.

Helle et al. (2006) systematically reviewed twenty-eight studies published from 1966 to 2001 on PjBL in postsecondary education. They found that most of the studies were mainly course descriptions on PjBL implementation and did not reference a pedagogical or psychological framework as a rigid research basis. For those who provided such a framework, it was mostly “poorly articulated or conceptualized” (p. 300). Therefore, the authors called for more theoretically grounded research on the topic. Another issue noted was the assessment of PjBL. Assessing PjBL became complicated because the goals of the courses in the studies tended to be manifold, and the course objectives were broad. The researchers recommended that they be clearly defined and aligned with learning activities. While the accuracy of assessment is undoubtedly important, the researchers reiterated that it was of utmost importance that students have multiple opportunities for formative assessments and revision.

Chen and Yang (2019) revisited the effectiveness of PjBL in both educational contexts in a meta-analysis of thirty studies published between 1998 and 2017. It concluded that PjBL was much more effective in enhancing students' academic achievement when compared with traditional instructions. Several factors were found to affect the effectiveness of PjBL, including subject areas, school location, instruction hours, and use of technology. No significant differences were found regarding the effects of PjBL in educational settings and student group size. The authors acknowledged that the success of PjBL might depend on other factors, such as students' age and ability, as well as project scaffolds and structure. They called for further analysis to evaluate the effect of these factors.

Guo et al. (2020) reviewed seventy-six empirical studies published before September 2019 focused on student outcomes and measures. Four outcomes, namely, cognitive, affective, behavioral outcomes, and artifact performance, were often measured by five categories of instruments, including questionnaires, rubrics and taxonomies, interviews, tests, and self-reflection journals. The researchers concluded the review with three recommendations: (1) more studies should be conducted to evaluate student's learning processes and learning artifacts; (2) the quality of measurement instruments should be reported; and (3) more experimental research should be conducted to determine the effects of PjBL on student learning.

None of the above reviews distinguished learning environments of PjBL in the studies examined, i.e., in-person, hybrid, or online learning. We searched for empirical studies on online PjBL in higher education between 2000 and 2022 and found twelve relevant studies. To be classified as empirical, some form of data (e.g., pretests, post-tests, surveys, interviews, learning artifacts) had to be collected and reported. A summary of the review of the twelve studies can be found in Appendix A. A review of the studies reveals the current status of online PjBL research outcomes as follows:

- Online PjBL occured in both online and in-person classes: Eight of fourteen courses that participated in these studies were offered online. Five courses were offered in person with online PjBL activities, and one did not specify the course delivery mode.

- Classes using online PjBL were small: The enrollment ranged from eleven to eighty-two, and the median class size was thirty-four.

- Duration of the studies varied but was often short: The studies ranged from four weeks to a semester and were conducted in a one-off and noniterative manner.

- Most of the courses involved were in the education discipline: Eight out of twelve studies involved education courses, specifically education technology, instructional design, or educational media.

- Online discussion was the main PjBL activity: Online discussion was most often used for collaboration within a student group or between groups. Therefore, online discussion postings became the main source of data for assessing online PjBL.

Thus, three major research gaps can be identified:

- Longitudinal studies on PjBL with large online classes are scarce, especially in disciplines beyond education.

- A conspicuous gap exists in the availability of a comprehensive pedagogical framework for systematically designing and implementing online PjBL.

- Research into the effects of PjBL on subgroups of online student subgroups remains significantly understudied.

We addressed these gaps by exploring how to implement and scale up PjBL in a large online graduate course through a rigorous application of an online PjBL model. The model integrates established learning theories, instructional strategies, learning technologies, and project management principles. We examined the effectiveness of this model through student surveys. The study was designed to address the following three research questions:

- How do students perceive the course effectiveness, and how do their perceptions vary based on age, gender, and educational level?

- To what extent does students' online program experience influence their project decision, and how does this subsequently affect their perceived course effectiveness?

- What strategies do students believe contribute to success in online project-based learning?

3. AN ONLINE PROJECT-BASED LEARNING MODEL

3.1 About the Online Course

The course in this study is offered three semesters a year as part of an online graduate program in computer science at a major public research university in the United States. The spring and fall semesters are 17 weeks long, and the summer semester is 12 weeks. The course was initially offered in fall 2015, and a total of 3340 students completed the course by fall 2022. The enrollment of the class ranged from 58 to 262 students, with a median of 171. The teaching team consists of an instructor and teaching assistants (TAs). The TA-to-student ratio is approximately 1:20. Although the course subject is educational technology, which is similar to those of the courses in previous studies on online PjBL, the demographics of its students were quite different. All students majored in computer science, not education, and most were full-time working professionals located across or outside of the United States. Detailed demographics of the students are provided in the Materials and Methods section.

This asynchronous online course introduces students to a variety of topics on educational technology. It is built around a semester-long project defined by students, with the goal that they be able to make a real contribution to the field of educational technology through a project they develop. Students choose one of three project tracks (i.e., research, development, or education) to work on individually or collaboratively. At the end of the semester, students deliver a project, a paper, and a presentation within the project track they chose.

3.2 Four Core Components

The course instructor developed and employed an online PjBL model that integrates curriculum, instruction, and assessment to facilitate and support the PjBL in this online course. The model consists of four core components (see Fig. 1):

FIG. 1: Online project-based learning model

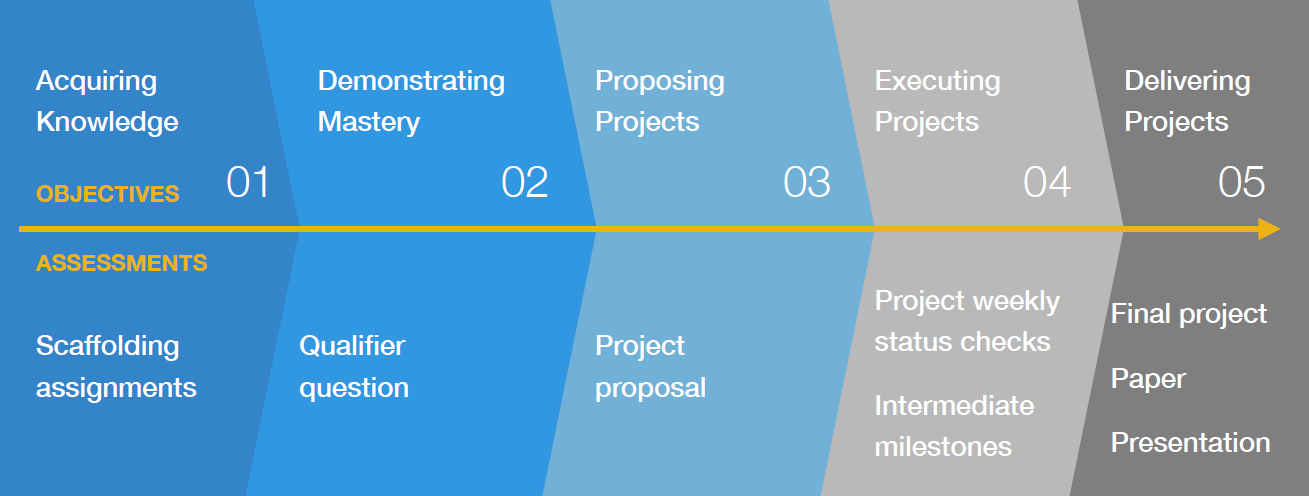

- Project-Phase Curriculum: PjBL provides students with opportunities and freedom to choose and decide what to work on, how to work, and what products to deliver (Blumenfeld et al., 1991). Consequently, students must take responsibility for their projects while the teacher takes on the roles of project advisor and product evaluator (Helle et al., 2006). These new roles pose challenges to both students and teachers who may not have the necessary training in project management. Project management is a systematic approach to executing a project through the phases of initiation, planning, design, execution, commissioning, and closing. Breaking down the execution of a complex project into multiple phases could help achieve project success within the constraints of time, cost, and quality (Packendorff, 1995; Turner & Müller, 2003). The course employs a five-phase structure (see Fig. 2) that aligns learning objectives with assessments to guide students' learning process, ensuring that they can achieve the learning outcomes and deliver their projects by the end of the course.

FIG. 2: Project-phase curriculum: objectives and assessments

- Online Self-Directed Learning (SDL): SDL is “a process in which individuals take the initiative, with or without the help of others, in diagnosing their learning needs, formulating learning goals, identifying human and material resources for learning, choosing and implementing appropriate learning strategies, and evaluating learning outcomes” (Knowles, 1975, p. 18). It is an essential characteristic of PjBL and other inquiry-based learning. Students' success depends on it, and developing SDL skills is one of the learning outcomes in PjBL (De Corte, 1996; Garrison, 1997; Knowles, 1975). Nevertheless, it could be challenging to students, especially novice students, because the self-directed situation of PjBL involves initiating an inquiry, directing the investigation, managing time, and using technologies productively (Grant, 2011; Krauss & Boss, 2013). In contrast to most other online courses that typically deliver a series of video lectures on predetermined topics, this course provided students with a library comprising a variety of curated resources categorized by topics in the field of educational technology, including:

- Instructor-recorded videos introducing course topics

- Interview videos of experts from the field of educational technology

- Scholarly readings categorized by course topics

- Research how-to guides

- Assignment 1: Complete a logic chart for each of five papers, mapping out how each paper addresses its research objectives through its methodology, audience, and results. This activity helps students structure the eventual proposal of their work by reviewing the structure of others' work.

- Assignment 2: Complete a literature-backed analysis of some problem statement, integrating sources into the writing with proper in-line citations.

- Assignment 3: Write a problem statement and a set of research questions. The problem statement covers projects that are more development- or design-oriented, while the research questions cover projects that are more research-oriented.

- Online Communities of Inquiry: PjBL highlights the importance of engaging students by placing their learning in social and physical contexts that represent real-life situations in which social interactions and collaborations are essential in problem-solving (Lee et al., 2015; Lin & Tsai, 2016; Swift, 2014). This class built and developed online communities of inquiry by incentivizing a comprehensive set of participatory activities (Garrison et al., 1999; Garrison, 2009). Students could earn participation credit in one of four ways:

- Participating in peer review: Each week, students were asked to review four classmates' assignments and give feedback. They could also request to provide feedback to more classmates for additional points. Peer reviews were graded for substantivity.

- Contributing to the online course forum: Different topics were set for discussion five days a week. Students were encouraged to share high-quality contributions to the course forum on these topics or any other topics that came to mind.

- Participating in classmates' projects: Many projects in the classes involved evaluating prototypes, sending surveys, or conducting interviews, and credits were given for acting as classmates' participants.

- Completing course surveys: Student feedback was systematically gathered on its pace, rigor, and structure through surveys distributed at key points during the semester: at the start, a quarter-way through, at mid-term, and at the end. Completing these surveys earned participation points as well.

- Online Feedback at Scale: As noted earlier, the median enrollment of the class was 171. Given the large size of this class, it was not realistic for the instructor to review and evaluate assignment submissions from all students and offer individual guidance as they formulated their ideas and developed their projects. This challenge was addressed by using feedback from a designated mentor and peers. Each student was paired with one of the course's mentors. Institutionally, mentors are officially classified as TAs, but most of them had real-world experience with educational technology. Each mentor advised approximately twenty students, with an average time commitment of 30 minutes per student per week. The key responsibility of the mentor was to review, evaluate, and regulate students' progress and performance by providing weekly feedback. On the other hand, students may review the work from up to eight classmates per week, with additional credit for completing reviews early in the week to encourage rapid feedback. The feedback from the mentors and peers provided students with critique, correction, and guidance on their work, but ownership and accountability lied with the students. If their assignments received a grade of less than an A, they were allowed to revise them based on the feedback they received and resubmit within a set deadline. The grade was adjusted accordingly if their revisions met the criteria for an A. This practice provided students with multiple opportunities for formative assessments and revisions, which are critical in PjBL (Helle et al., 2006). It should be noted that implementing peer feedback requires training and practice (Topping, 1998, 2009). Students in the class were given instructions on providing constructive feedback and examples of good-quality feedback. Rubrics were also used to guide and scaffold the feedback process by helping students focus on formulating and delivering feedback on target areas.

Students are prescribed a weekly agenda for using this course library as a starting point for their exploration and investigation. Their interest and the project track (i.e., research, development, or education) they choose to pursue will drive their exploration of the course content. This SDL is guided by the following three assignments during the first four weeks of the semester. These assignments serve as scaffolds to help students identify a driving question in an area of interest and carry students to the point of proposing a project.

3.3 Three Instructional Strategies

Three instructional strategies were used to support the PjBL implementation in this course: scaffolding, modeling, and mentoring, which play essential roles in inquiry-based learning environments (Jonassen, 1999).

- Scaffolding: A learning scaffold can be any resource that supports student learning and performance beyond the learner's capabilities (MacLeod & van der Veen, 2020; van Rooij, 2009; Saye & Brush, 2002). Teachers, peers, learning materials, and technology can all serve as scaffolds (Blumenfeld et al., 1991; 2000). At the course level, the project-phase course structure (see Fig. 2) was used as a major scaffold, breaking down a semester-long project into smaller and more manageable tasks for all students. At the project-phase level, the assignments served as scaffolds that ensure students can propose and craft a project plan. Finally, the online learning technologies used in this class provided scaffolds for enabling and supporting online communication and collaboration among the students.

- Modeling: Modeling provides learners with an example of the desired performance, including behavior modeling and cognitive modeling (Jonassen, 1999). Students could access examples of projects developed by past students who agreed to share them. These examples served as a cognitive model to help students identify what and how to do with their projects to meet the expected performance. The presence of these exemplary projects helped create long-term communities of inquiry (Garrison et al., 1999; Garrison, 2009) around the course, and current students commonly wrote to past students for insights into their work.

- Mentoring: In higher education, mentoring was broadly defined as a process whereby knowledgeable and experienced persons instruct, counsel, guide, and facilitate the intellectual and/or career development of persons identified as protégé (Blackwell, 1989). There are four major domains of mentoring: (1) psychological and emotional support; (2) support for setting goals and choosing a career path; (3) academic subject knowledge support; and (4) specification of a role model (Crisp & Cruz, 2009). Studies on mentoring in education have demonstrated its positive impact on student retention, graduation rates, and overall satisfaction with the educational environment (Crisp & Cruz, 2009). It should be noted that mentors are not exclusively faculty members. Staff, senior students, peers, or even friends can also fulfill this role (Kram & Isabella, 1985). In the context of this large online class, mentoring was facilitated by a team of TAs, each assigned to mentor about twenty students. As described in the Online Feedback at Scale section, each student in the class was paired with a mentor who reviewed, evaluated, and provided feedback on their work on a weekly basis. All questions and feedback were given in a private thread on the course discussion forum as a semester-long conversation between the student and mentor. These threads were visible to all mentors in the class to allow peripheral learning and collaboration by the mentors based on one another's activity. In addition to the discussion thread, students were encouraged to communicate with their mentor via emails and video conferencing for in-depth discussions on their projects. This consistent partnership throughout the semester allowed the mentors to tailor their communication to each student's unique history and progress. While the focus was on academic support, mentors also engaged in social-emotional interactions, offering encouragement and support throughout the learning process. Additionally, the mentoring relationships involved the mentors acting as role models. By sharing their own personal and professional experiences, mentors provided students with real-world perspectives and strategies for navigating their projects.

3.4 Technologies for Online Project-Based Learning

Several online learning technologies were leveraged to support and scale up PjBL in this class:

- EdTech Course Library: The students who took this class developed the course library on the GitHub platform based on the course resources initially curated by the course instructor and expanded by students who have contributed over the years. The choice of GitHub as the platform for developing the course library was primarily due to its version control capabilities and collaborative features. GitHub's version control system allows for efficient tracking of changes and contributions over time, ensuring that the library's evolution is well documented and easily reversible if necessary. Additionally, GitHub's collaborative features enable seamless contributions from multiple students, supporting a dynamic and cumulative development process.

- Peer Feedback: A home-grown peer review platform was used for students to provide feedback on each other's homework and projects. This system was integrated with the university's learning management system. Rubrics were created and used as criteria for numeric evaluation, and written feedback was entered in a free-response box.

- Peer Survey: Students from the class developed this platform for students to participate in their peers' projects by taking surveys, evaluating prototypes, participating in interviews, and so on.

- Forum: An online Q&A forum (originally Piazza, more recently EdStem) was used for course communication and interaction among all course participants. The online forum became the classroom, where students deeply engaged in conversations and daily discussions on different topics.

3.5 Summary of the Online PjBL Pedagogy

Table 1 summarizes the pedagogical rationale of the four core components of the online PjBL model, the challenges they address, and corresponding implementation strategies.

TABLE 1: Online project-based learning: pedagogy, challenges, and strategies

| Component | Pedagogical Rationale | Challenges Addressed | Implementation Strategies |

| Project-PhaseCurriculum | Breaking down a complex project into multiple phases could help achieve project success within the constraints of time, cost, and quality. | Learners and teachers may not have the necessary training in project management to effectively execute a complex project. | A project-phase curriculum clearly defines learning objectives and aligns them with assessments to guide learners' learning process, ensuring that they have multiple opportunities for formative assessment and achieve the learning outcomes. |

| Online Self-Directed Learning (SDL) | Developing SDL skills is one of the learning outcomes of PjBL, and learners' success depends on these skills. | Novice learners may struggle to identify an area of interest and initiate a project plan. | Scaffolding: Scaffolding assignments support learners and ensure they can propose a project.Modeling: Exemplary projects from previous semesters serve as sources of guidance and inspiration. |

| Online Communities of Inquiry | Social interactions and collaborations are essential in real-world problem-solving. | Online learners are not collocated, and communities of inquiry may not be automatically formed. | A participation policy incentivizes a comprehensive set of participatory activities. |

| Online Feedback at Scale | It is critical that students have multiple opportunities for formative assessment and revision. | Instructors do not have the time or capacity to provide all students with prompt and quality feedback. | Mentoring: Each learner receives feedback regularly from a dedicated teaching assistant who serves as a mentor throughout the semester. Peer Feedback: Learners provide feedback to each other and earn participation credit by doing so. |

4. MATERIALS AND METHODS

Survey research was the primary method for collecting and analyzing data to measure the effectiveness of the online PjBL model. Students enrolled in the course were invited to take a course survey administered through the learning management system used at the university. Although students were required to log in with their university user account and password, the survey was anonymous. Participation was voluntary, and no incentives were offered for participating in the study. Approval was obtained from the university's Institutional Review Board (IRB), ensuring adherence to ethical standards and guidelines for research involving human subjects.

4.1 Participants

A total of 3340 students were enrolled in the course, and 2179 of them completed the survey. The response rate was 65%. Table 2 provides detailed demographics of the participants.

TABLE 2: Demographics of students who participated in the survey from fall 2015 to fall 2022

| Demographics | N | % | |

| Age | 18–24 | 238 | 10.9 |

| 25–34 | 1218 | 56.0 | |

| 35 and above | 719 | 33.1 | |

| Total | 2175 | 100.0 | |

| Gender | Female | 450 | 20.9 |

| Male | 1706 | 79.1 | |

| Total | 2156 | 100.0 | |

| Highest Prior Education | Bachelor's degree | 1663 | 76.7 |

| Master's/Doctoral/Professional degree | 506 | 23.3 | |

| Total | 2169 | 100.0 | |

4.2 The Survey Instrument

The survey included questions for feedback on various course elements, but only the following seven questions are reported in this study to address the three research questions:

- Demographics: They include three questions on students' age, gender, and their highest prior education (see Table 2).

- Course effectiveness: Students were asked to rate the course effectiveness with a seven-point Likert scale, 1 = Very Poor and 7 = Excellent.

- Online program experience: Students were asked to provide the number of courses they have taken within the online program.

- Project decision timing: Students were asked when they decided on their project for the course.

- Advice for future students: This open-ended question that asked students to provide those who take the course in the future with advice about how to succeed in online PjBL, based on their experiences with the course.

5. RESULTS

5.1 Research Question 1

To answer the research question regarding students' perception of course effectiveness and its variations across different demographics, we asked participants to rate the course on a seven-point Likert scale, where 1 indicated “Very Poor” and 7 indicated “Excellent.” The data collected were analyzed by using descriptive statistics within SPSS. The results revealed an overall positive evaluation of the course (M = 5.79, SD = 1.11).

A one-way ANOVA was conducted to examine how students of different ages perceived the course effectiveness differently. A statistically significant difference was found among the three age groups: 18–24, 25–34, and 35 and above, F (2, 2168) = 21.098, p < 0.001, η2 = 0.019. Games-Howell post hoc tests found that participants aged 35 and above reported significantly higher course effectiveness ratings (M = 6.01, SD = 1.01) compared to those aged 18–24 (M = 5.65, SD = 1.15), p < 0.001, and those aged 25–34 (M = 5.69, SD = 1.14), p < 0.001.

An independent t-test was performed to compare the course ratings of participants of different genders and different education degrees, respectively. There was a significant difference in the course ratings for female students (M = 5.97, SD = 1.03) and male students (M = 5.75, SD = 1.13), t (2150) = 3.73, p = 0.002, indicating that female students tended to rate the course as more effective. However, no significant difference was found in the course ratings between participants holding a bachelor's degree (M = 5.81, SD = 1.12) and those with advanced degrees (master's/doctoral/professional) (M = 5.74, SD = 1.07), t (2163) = 1.18, p = 0.860. This suggests that the educational levels of students did not significantly influence their perceptions of course effectiveness.

5.2 Research Question 2

This question investigates the potential influence of students' online program experiences on the timing of their project decisions and, subsequently, how these decisions affected their perceived course effectiveness. As the course did not have prerequisites, students frequently grappled with questions like: Should I take the course upon entering the online program? Would it be wiser to wait until after completing several courses for better preparedness? Can the course be taken even without a clear project idea in mind? We hypothesize that advanced students—those who entered the program earlier and had completed more courses—were more capable of arriving at project decisions sooner compared to novice students.

A one-way ANOVA (Table 3) was conducted to examine potential differences in the number of completed classes and perceived course effectiveness among participants who made project decisions at different junctures. While no statistically significant difference was found in the number of classes completed by students, F (3, 2119) = 0.165, p = 0.920, it should be noted that Table 3 indicates a p-value of 0.05 for another aspect of the analysis, suggesting a marginal level of significance that warrants careful interpretation. Therefore, the hypothesis pertaining to the influence of advanced students' course progression on early project decision-making is rejected. However, there was a significant effect of project decision time on course ratings: F (3, 2119) = 5.01, p = 0.002, η2 = 0.007. Tukey's HSD test for multiple comparisons found that participants who decided on their projects during the proposal writing process, which was the last stage of project decision-making, rated the course effectiveness significantly lower (than those who decided during the initial weeks of the class, as well as those who made the decision during the miniproposal assignment). This indicates that the participants who deferred their project choices until a later stage of the course tended to perceive the course as less effective than those who made their decision earlier, either during the initial weeks of the class or during the miniproposal assignment.

TABLE 3: Means, standard deviations, and one-way analyses of variance in the number of courses and course effectiveness rating by project decision time

| Measure | Prior to starting the class | F | |||||||

| Prior to starting the class | During the first couple weeks of the class | During the miniproposal assignment | During the proposal writing process | ||||||

| M | SD | M | SD | M | SD | M | SD | ||

| Number of courses completed | 4.63 | 2.69 | 4.55 | 2.80 | 4.61 | 2.65 | 4.66 | 2.77 | 0.78 |

| Course effectiveness rating | 5.82 | 1.12 | 5.82 | 1.08 | 5.87 | 1.06 | 5.61 | 1.23 | 3.01† |

|

† p < 0.05 |

|||||||||

5.3 Research Question 3

We asked students to advise future students on how to succeed in this course. A total of 1677 students responded to the question. Their responses were analyzed by using a pretrained language model named all-mpnet-based-v2 (https://huggingface.co/sentence-transformers/all-mpnet-base-v2), a sentence transformers model designed for natural language processing tasks. It was trained to convert sentences or paragraphs into fixed-length numerical representations (or embeddings) that capture the text's semantic and syntactic meaning. These embeddings are then used for text clustering, a process of grouping similar texts into clusters or categories based on their content automatically discovered based on their similarities. We employed a five-step pipeline approach used and tested in analyzing online course reviews (Xiao et al., 2022). The results of the text clustering from the model generated ten suggestions for future students on how to succeed in the course, focused on mentoring, collaborative learning, SDL, and project and time management (see Appendix B).

6. DISCUSSION

This study examines the perceptions of students regarding the effectiveness of online PjBL, grounded in a pedagogical model encompassing four core components: project-phased curriculum, online SDL, online communities of inquiry, and online feedback at scale. These components are further bolstered by three instructional strategies: scaffolding, modeling, and mentoring. The results of the survey data collected over a span of seven years consistently portray positive evaluations of the course's efficacy among students. Exploring demographic variations in these perceptions, we identified that older and female students assigned higher effectiveness ratings compared to their younger and male counterparts. This finding indicates that while PjBL has been employed in diverse educational contexts, it may be a particularly effective instructional method for older adults and female learners. Previous reviews noted that age and gender effects have been understudied in PjBL (Chen & Yang, 2019; Condliffe et al., 2017; Thomas, 2000). Remarkably, this finding aligns with existing literature indicating that older and female students exhibit a propensity for adaptability and success in online learning due to their advanced self-regulation and time-management skills (McSporran & Young, 2011; Xu & Jaggars, 2013). These competencies are inherently essential for succeeding in PjBL, potentially explaining the heightened appeal of this approach to these specific student subgroups.

The revelations concerning the effects of age and gender differences on perceived course effectiveness unearth important implications for workforce development, online education, and STEM education. In light of the evolving postpandemic work landscape, where a substantial 44% of workers' skills are predicted to face disruption within the next five years, and where the need for training will be pertinent for six out of ten workers by 2027 (World Economic Forum, 2023), the rise of older learners enrolling in online education becomes an imminent reality due to their constraints regarding geographical mobility for continuing education.

Given these changes, curriculum designers should contemplate integrating online PjBL into courses to cater to the unique needs of these learners. On another front, the gender differences discovered in the study have profound implications for mitigating the long-standing gender disparity issue in STEM education, often caused by a general trend of skewed enrollment in STEM courses, where male students outnumber their female counterparts. Existing literature has indicated that a masculine learning environment can induce feelings of technological inadequacy and reduced motivation among women (Yates & Plagnol, 2022). Initiatives aimed at closing this gender gap and enhancing gender parity in STEM classrooms often advocate for a gender-inclusive curriculum and pedagogy that “incorporates long-term, self-directed projects; includes open-ended assessments that take on diverse forms; emphasizes collaboration and communication; provides a supportive environment; uses real-life contexts” (Brotman & Moore, 2008, p. 983). In this context, PjBL, which possesses all of these characteristics, emerges as an ideal gender-inclusive pedagogical strategy, as substantiated by a study on the long-term impacts of PjBL in which women in engineering reported more positive benefits in PjBL than men in 36 of 39 impact areas (Vaz et al., 2023). The researchers believe this discrepancy's interpretation rests on motivation effects; female students are more motivated by opportunities for social context and collaboration than males, which leads to greater engagement by females in PjBL. This interpretation finds validation in the strategies suggested by the students in the current study, particularly mentoring and collaborative learning, which foster a supportive community of inquiry for all students within this large online class. The effect of gender differences uncovered in this study further underscores PjBL's potential as a retention and success-enhancement strategy for female students in STEM education.

The significant effect of project management differences revealed in this study confirms the critical role of project management skills in online PjBL. While students' online program experience, gauged by the number of completed classes within the program, exhibited no influence on the timing of their project decision-making, the timing itself significantly affected their perceived course efficacy. This finding resonates with the project management strategy suggested by the students, such as identifying a project early, picking a passion project, starting a project early, and staying on track. For students who might be in the process of developing these skills, it becomes pivotal for course instructors and mentors of the course to support them by leveraging the instructional strategies of scaffolding, mentoring, and modeling. By providing the requisite resources and support, these strategies can empower students to cultivate these critical skills, ultimately fostering their success in PjBL.

7. CONCLUSIONS

This longitudinal study has proven that the online PjBL model employed within the online graduate course was effective in making online project-based learning possible, successful, scalable, and sustainable over a span of seven years. The findings of the study highlight the intricate interplay between individual attributes and their perceived effectiveness of online PjBL, an insight with important implications for addressing challenges in workforce development, online education, and STEM education. As education evolves to cater to an increasingly diverse student population, these findings hold promise for fostering more effective and inclusive pedagogical practices in today's educational settings. While the model was designed for online PjBL, educators and practitioners can integrate its principles into teaching in accordance with individual contexts and available resources, acknowledging the inherent complexities of PjBL implementation.

While we gauged students' perceptions of the model's effectiveness, further research is needed to delve into its effects on students' learning performance. Additionally, this study focused on graduate students within the computer science domain, inviting exploration into the model's applicability across disciplines and various academic levels.

ACKNOWLEDGMENTS

We are grateful to Chenghao Xiao at Durham University for his assistance and advice on using large language models for data analysis. We also thank Rochan Madhusudhana at the Georgia Institute of Technology and Wesley Morris at Vanderbilt University for their help with experimenting with the language models.

REFERENCES

Beneroso, D. & Robinson, J. (2022). Online project-based learning in engineering design: Supporting the acquisition of design skills. Education for Chemical Engineers, 38, 38–47. https://doi.org/10.1016/j.ece.2021.09.002

Blackwell, J. E. (1989). Mentoring: An action strategy for increasing minority faculty. Academe, 75(5), 8–14. https://doi.org/10.2307/40249734

Blumenfeld, P., Fishman, B. J., Krajcik, J., Marx, R. W., & Soloway, E. (2000). Creating usable innovations in systemic reform: Scaling up technology-embedded project-based science in urban schools. Educational Psychologist, 35(3), 149–164. https://doi.org/10.1207/s15326985ep3503_2

Blumenfeld, P. C., Soloway, E., Marx, R. W., Krajcik, J. S., Guzdial, M., & Palincsar, A. (1991). Motivating project-based learning: Sustaining the doing, supporting the learning. Educational Psychologist, 26(3-4), 369–398. https://doi.org/10.1080/00461520.1991.9653139

Brotman, J. S. & Moore, F. M. (2008). Girls and science: A review of four themes in the science education literature. Journal of Research in Science Teaching, 45(9), 971–1002. https://doi.org/10.1002/tea.20241

Çakiroğlu, Ü., & Erdemir, T. (2019). Online project based learning via cloud computing: Exploring roles of instructor and students. Interactive Learning Environments, 27(4), 547–566. https://doi.org/10.1080/10494820.2018.1489855

Chanpet, P., Chomsuwan, K., & Murphy, E. (2020). Online project-based learning and formative assessment. Technology, Knowledge and Learning, 25, 685–705. https://doi.org/10.1007/s10758-018-9363-2

Chen, C. & Yang, Y. (2019). Revisiting the effects of project-based learning on students' academic achievement: A meta-analysis investigating moderators. Educational Research Review, 26, 71–81. https://doi.org/10.1016/j.edurev.2018.11.001

Ching, Y. H., & Hsu, Y. C. (2013). Peer feedback to facilitate project-based learning in an online environment. International Review of Research in Open and Distributed Learning, 14(5), 258–276. https://doi.org/10.19173/irrodl.v14i5.1524

Condliffe, B., Quint, J., Visher, M.G., Bangser, M. R., Drohojowska, S., Saco, L., & Nelson, E. (2017). Project-based learning: A literature review. New York, NY: Manpower Demonstration Research Corporation (MDRC). Retrieved from https://www.mdrc.org/sites/default/files/Project-Based_Learning-LitRev_Final.pdf

Crisp, G. & Cruz, I. (2009). Mentoring college students: A critical review of the literature between 1990 and 2007. Research in Higher Education, 50(6), 525–545. https://doi.org/10.1007/s11162-009-9130-2

De Corte, E. (1996). Learning theory and instructional science. In Reimann, P. and Spada, H. (Eds.), Learning in Humans and Machines (pp. 97–108). Pergamon, NY.

García, C. (2016). Project-based learning in virtual groups-collaboration and learning outcomes in a virtual training course for teachers. Procedia-Social and Behavioral Sciences, 228, 100–105. https://doi.org/10.1016/j.sbspro.2016.07.015

Garrison, D. R. (1997). Self-directed learning: toward a comprehensive model. Adult Education Quarterly, 48(1), 18–33. https://doi.org/10.1177/074171369704800103

Garrison, D. R. (2009). Communities of inquiry in online learning. In Encyclopedia of distance learning, 2nd ed. (pp. 352–355). IGI Global. https://doi.org/10.4018/978-1-60566-198-8.ch052

Garrison, D. R., Anderson, T., & Archer, W. (1999). Critical inquiry in a text-based environment: Computer conferencing in higher education. The Internet and Higher Education, 2(2-3), 87–105. https://doi.org/10.1016/s1096-7516(00)00016-6

Grant, M. M. & Branch, R. B. (2005). Project-based learning in a middle school: Tracing abilities through the artifacts of learning. Journal of Research on Technology in Education, 38(1), 65–98. https://doi.org/10.1080/15391523.2005.10782450

Guo, P., Saab, N., Post, L. S., & Admiraal, W. (2020). A review of project-based learning in higher education: Student outcomes and measures. International Journal of Educational Research, 102, 101586. https://doi.org/10.1016/j.ijer.2020.101586

Helle, L., Tynjälä, P., & Olkinuora, E. (2006). Project-based learning in post-secondary education – Theory, practice and rubber sling shots. Higher Education, 51(2), 287–314. https://doi.org/10.1007/s10734-004-6386-5

Heo, H., Lim, K. Y., & Kim, Y. (2010). Exploratory study on the patterns of online interaction and knowledge co-construction in project-based learning. Computers & Education, 55(3), 1383–1392. https://doi.org/10.1016/j.compedu.2010.06.012

Jonassen, D. H. (1999). Designing constructivist learning environments. In C. M. Reigeluth (Ed.), Instructional design theories and models: A new paradigm of instructional theory (Vol. II, pp. 215–239). Mahwah, NJ: Lawrence Erlbaum.

Knowles. M. S. (1975). Self-directed learning: A guide for learners and teachers. New York, NY: Association Press. https://doi.org/10.1177/105960117700200220

Koh, J. H. L., Herring, S. C., & Hew, K. F. (2010). Project-based learning and student knowledge construction during asynchronous online discussion. The Internet and Higher Education, 13(4), 284–291. https://doi.org/10.1016/j.iheduc.2010.09.003

Kram, K. E. & Isabella, L. A. (1985). Mentoring alternatives: The role of peer relationships in career development. Academy of Management Journal, 28(1), 110–132.

Krauss, J. & Boss, S. (2013). Thinking through project-based learning: Guiding deeper inquiry (1st ed.). Thousand Oaks, CA: Corwin.

Larmer, J. & Mergendoller, J. R. (2010). Seven essentials for project-based learning. Educational Leadership, 68(1), 34–37.

Larmer, J. (2020). Gold standard PjBL: Essential project design elements. Buck Institute for Education. Retrieved from https://www.pblworks.org/blog/gold-standard-pbl-essential-project-design-elements

Larmer, J., Mergendoller, J. R., & Boss, S. (2015). Setting the standard for project-based learning: A proven approach to rigorous classroom instruction. Alexandria, VA: ACSD.

Lee, D., Huh, Y., & Reigeluth, C. M. (2015). Collaboration, intragroup conflict, and social skills in project-based learning. Instructional Science, 43(5), 561–590. https://doi.org/10.1007/s11251-015-9348-7

Lin, J. W. & Tsai, C. W. (2016). The impact of an online project-based learning environment with group awareness support on students with different self-regulation levels: An extended-period experiment. Computers & Education, 99, 28–38. https://doi.org/10.1016/j.compedu.2016.04.005

Lou, Y. (2004). Learning to solve complex problems through between-group collaboration in project-based online courses. Distance education, 25(1), 49–66. https://doi.org/10.1080/0158791042000212459

Lou, Y. & MacGregor, S. K. (2004). Enhancing project-based learning through online between-group collaboration. Educational Research and Evaluation, 10(4-6), 419–440. https://doi.org/10.1080/13803610512331383509

MacLeod, M. & van der Veen, J. T. (2020). Scaffolding interdisciplinary project-based learning: a case study. European Journal of Engineering Education, 45(3), 363–377. https://doi.org/10.1080/03043797.2019.1646210

Markham, T., Larmer, J., & Ravitz, J. (2003). Project-Based Learning Handbook: A Guide to Standards Focused Project-Based Learning for Middle and High School Teachers. Novato, CA: Buck Institute for Education.

McSporran, M. & Young, S. (2011). Does gender matter in online learning? Research in Learning Technology, 9(2). https://doi.org/10.3402/rlt.v9i2.12024

Morgan, A. (1984). Overview: project-based learning. In Henderson, E. S. & Natheson, M. B. (Eds.), Independent learning in higher education (pp. 221–237). Milton Keynes, England: The Open University.

Packendorff, J. (1995). Inquiring into the temporary organization: New directions for project management research. Scandinavian Journal of Management, 11(4), 319–333. https://doi.org/10.1016/0956-5221(95)00018-q

Papanikolaou, K. & Boubouka, M. (2010). Promoting collaboration in a project-based e-learning context. Journal of Research on Technology in Education, 43(2), 135–155. https://doi.org/10.1080/15391523.2010.10782566

Ravitz, J. (2010). Beyond changing culture in small high schools: Reform models and changing instruction with project-based learning. Peabody Journal of Education, 85(3), 290–312. https://doi.org/10.1080/0161956x.2010.491432

Saye, J. W. & Brush, T. (2002). Scaffolding critical reasoning about history and social issues in multimedia-supported learning environments. Educational Technology Research and Development, 50(3), 77–96. https://doi.org/10.1007/bf02505026

Seaman, J. (2021). Digital learning pulse survey. Retrieved from https://www.bayviewanalytics.com/reports/pulse/slides_spring2021.pdf

Shih, W. L. & Tsai, C. Y. (2017). Students' perception of a flipped classroom approach to facilitating online project-based learning in marketing research courses. Australasian Journal of Educational Technology, 33(5), 32–49. https://doi.org/10.14742/ajet.2884

Swift, L. (2014). Online communities of practice and their role in educational development: A systematic appraisal. Community Practitioner, 87(4), 28–32.

Thomas, J. W. (2000). A review of research on project-based learning. San Rafael, CA: The Autodesk Foundation. Retrieved from https://my.pblworks.org/resource/document/a_review_of_research_on_project_based_learning

Topping, K. (1998). Peer assessment between students in colleges and universities. Review of Educational Research, 68(3), 249–276. https://doi.org/10.3102/00346543068003249

Topping, K. J. (2009). Peer assessment. Theory into Practice, 48(1), 20–27. https://doi.org/10.1080/00405840802577569

Turner, R. J. & Müller, R. (2003). On the nature of the project as a temporary organization. International Journal of Project Management, 21(1), 1–8. https://doi.org/10.1016/s0263-7863(02)00020-0

van Rooij, S. W. (2009). Scaffolding project-based learning with the project management body of knowledge (PMBOK®). Computers & Education, 52(1), 210–219. https://doi.org/10.1016/j.compedu.2008.07.012

Vaz, R. F., Quinn, P., Heinricher, A. C., & Rissmiller, K. J. (2013, June). Gender differences in the long-term impacts of project-based learning. In 2013 ASEE Annual Conference & Exposition (pp. 23–634).

World Economic Forum (May 2023). The future of jobs report 2023. Retrieved from https://www3.weforum.org/docs/WEF_Future_of_Jobs_2023.pdf

Xiao, C., Shi, L., Cristea, A., Li, Z., & Pan, Z. (2022, July). Fine-grained main ideas extraction and clustering of online course reviews. In Artificial Intelligence in Education: 23rd International Conference, AIED 2022, Durham, UK, July 27–31, 2022, Proceedings, Part I (pp. 294–306). https://doi.org/10.1007/978-3-031-11644-5_24

Xu, D. & Jaggars, S. (2013). Adaptability to online learning: Differences across types of students and academic subject areas. Journal of Higher Education, 85(5).

Xu, D., & Jaggars, S. (2013). Performance gaps between online and face-to-face courses: Differences across types of students and academic subject areas. Journal of Higher Education, 85(5). https://doi.org/10.1080/00221546.2014.11777343

Yates, J. & Plagnol, A. C. (2022). Female computer science students: A qualitative exploration of women's experiences studying computer science at university in the UK. Education and Information Technologies, 27, 3079–3105. https://doi.org/10.1007/s10639-021-10743-5

APPENDIX A.

TABLE A1: Empirical studies on online project-based learning in higher education (2000–22)

| Author(s) | Course Delivery Mode | Class Size /Course Level | Discipline/Subject Area | Online PjBL Activities | Duration of Study | Location of Study |

| Lou (2004) | Online | 11/Graduate | Education/Education Technology | Within-group discussions; Between-group feedback |

Semester | USA |

| Lou & MacGregor (2004) | Online | Class 1– 18/Graduate | Education/educational research | Within-group discussions; Between-group feedback |

Semester | USA |

| In-person | Class 2 – 18/Undergraduate | Education/Educational technology | ||||

| Heo et al. (2010) | In-person | 49/Undergraduate | Education/Educational Technology | Online group discussions | 3 weeks | Korea |

| Koh et al. (2010) | Online | 17/Graduate | Education/Instructional Design | Online discussions | 8 weeks | USA |

| Papanikolaou & Boubouka (2010) | Not specified | 82/Not specified | Computer Science/Computer Science Education | Scaffolding; Peer feedback; Group discussions |

One month | Greece |

| Ching & Hsu (2013) | Online | 21/Graduate | Education/Instructional Design | Online discussion; Peer feedback |

Semester | USA |

| García (2016) | Online | 40/Graduate | Education/Technology Integration into Education | Online discussions | Four weeks | Spain |

| Lin & Tsai (2016) | In-person | Class 1 – 41/Undergraduate | Business/Management Information system | Online discussion; Peer feedback |

14 weeks | Taiwan |

| In-Person | Class 2 – 43/Undergraduate | |||||

| Shih & Tsai (2017) | In-person | 67/Undergraduate | Business/MarketingResearch | Online group discussions | Semester | Taiwan |

| Çakiroğlu & Erdemir (2019) | Online | 13/Undergraduate | Education/Instructional Technology | Group projects | Semester | Turkey |

| Chanpet et al. (2020) | Online | 28/Undergraduate | Education/Media Creation | Online discussions; Instructor feedback |

Semester | Thailand |

| Beneroso & Robinson (2022) | Online | 74/Undergraduate | Engineering | Online tutorials; Group/individual meetings; Mentoring |

Semester | UK |

APPENDIX B.

TABLE B1: Top ten strategies suggested by students on how to succeed in the course

| Category | Strategies | Example of Responses |

| Mentoring | Work with the mentor early and closely | Interact with your mentors often and early, they are a wealth of knowledge and very helpful. |

| Collaborative Learning | Participate in peer feedback and surveys | Don't get behind in the participation, and always do the surveys and take extra peer reviews whenever you can! |

| Join a group project if needed | Don't forget to look for a group early if you don't already have a project idea when you start the class!! | |

| Self-Directed Learning | Learn from exploration | I had a hard time narrowing in on a topic, but I felt I got a lot more from the class since I explored many topics early on. You will get a lot from this class if you explore many topics early on, since you will learn more than just the project topic. |

| Invest time in assignments | The class project seemed daunting at first, but the assignments were very helpful in preparing me to complete the project. If you have no idea what type of project you want to do, invest a lot of time in the initial assignments. It will help you develop ideas. | |

| Put in efforts and get the most out of the course | This class is different. It's a chance to really stretch yourself. “You get out of it what you put into it” is true of most courses, but it is particularly true for this class. Go for it! | |

| Project and Time Management | Identify a project idea early | When you decide to register for this course, you should really start thinking about the type of project you want to do. Read ahead about the different types of projects you can do (research, content, or tool creation) and come in with an idea. This will put you ahead when you are going through the first few weeks of the course and set you up for success throughout the course. |

| Pick a passion project | If possible, pick a project topic that you are extremely interested in or have a passion for... your commitment to the project will likely follow! | |

| Start a project early | Pick your topic early and start working on the project as soon as you can. Do not procrastinate. | |

| Stay on task | I think making sure you pace yourself and with the project, and work hard to stay on your task list's project timeline. |

Comments

Show All Comments