EVALUATING TECHNOLOGIES FOR COMMUNICATING MATHEMATICAL AND SCIENTIFIC REASONING IN FULLY ONLINE ENGINEERING COURSES: A TECHNOLOGY CHOICE FRAMEWORK

|  |  |

| Richard McInnes | Joshua Cramp | Claire Aitchison |

Teaching Innovation Unit, University of South Australia, Adelaide SA 5000, Australia

*Address all correspondence to: Richard McInnes, Teaching Innovation Unit, The University of South Australia, Adelaide SA 5000, Australia, E-mail: richard.mcinnes@unisa.edu.au

Studying science, technology, engineering, and mathematics (STEM) degrees online brings unique challenges since students and academics are expected to digitally communicate complex mathematical and scientific formulas as well as draw graphs and diagrams in online environments that are ill-suited to the task. Current practices using linear syntax, graphical equation editors, and scanned handwriting all have limitations. Digital inking (that is, the use of digital stylus-based technologies and computer software to produce digitized handwriting) offers one solution. This practice-orientated case study aimed to identify and integrate a suitable technology that enabled students and academics to communicate STEM reasoning across a fully online engineering degree. The technology needed to be affordable and widely available, easy to install and use, have minimal academic integrity concerns, and be compatible with existing systems including the learning management system, virtual classroom software, and online exam invigilation software. Guided by learning design considerations and institutional, disciplinary, student, and teacher requirements, the case study demonstrates a process of decision making that enables the development of high-quality courses, positive student learning outcomes, and staff development opportunities. After reflecting upon and analyzing our experiences, we propose a concise technology choice framework to guide others through the process of technology investigation and adoption for online STEM courses.

KEY WORDS: science, technology, engineering, and mathematics (STEM), online learning and teaching, online assessment, digital inking, displaying scientific reasoning online, graphics tablet, engineering online, learning technologies

1. INTRODUCTION

Designing effective online science, technology, engineering, and mathematics (STEM) degrees brings with it unique challenges since academics and students are expected to communicate complex mathematical and scientific formulas, graphs, and diagrams in learning management systems (LMSs) that do not easily accommodate these functions (Loch & McDonald, 2007). Communicating mathematical and scientific reasoning online has long been a problem, which has been recently exacerbated by the explosion in demand for virtual learning associated with the COVID-19 global pandemic, as well as student and institutional demands for flexibility and equity and the urgent need for secure, accessible, and functional online technologies. Furthermore, the move toward remotely invigilated online exams adds additional complexities to disciplines where students are required to digitally show fully worked solutions to problems as well as draw graphs and diagrams. Consequently, institutions are urgently seeking affordable technologies appropriate for supporting student learning and assessments that require nonlinear, non-text–based computational and graphical representation online.

Implementing a technological solution across a whole degree requires the navigation of competing institutional, technological, and teaching and learning intricacies. Technologies must be compatible with STEM-specific pedagogies (Winberg et al., 2019), while not detracting from the robustness of existing assessment practices (Winger et al., 2019). Moreover, effective technological solutions must walk a tightrope, balancing successful online learning design with end-user perspectives—that is, those of the student and teacher—on perceived usefulness and ease of use (Davis, 1989). Teaching STEM online requires innovative thinking that harmonizes these often competing expectations through the incorporation of suitable educational technologies. This paper demonstrates one process for achieving this by examining learning design considerations and institutional, disciplinary, student, and teacher requirements. Using a case study methodology (Yin, 2017), we demonstrate a process of decision making that enables the development of high-quality courses, positive student learning outcomes, and staff development opportunities.

2. LITERATURE

Teaching and learning in STEM disciplines is characterized by unique signature pedagogies that have been developed to maximize learning outcomes while imparting the professional values, attitudes, and dispositions required to be successful within the field (Shulman, 2005). These pedagogies emphasize cognitive and procedural fluency (Lafreniere, 2016) in disciplinary knowledge as well as professional habits of mind, such as system thinking, problem solving, design (Lucas & Hanson, 2016), pattern finding, visualizing, conjecturing (Cuoco et al., 1996), scepticism, objectivity, and curiosity (Çalik & Coll, 2012). Transitioning STEM teaching and learning from the classroom to online courses challenges traditional pedagogies, but doing so enables academics to manipulate their practices in order to achieve objectives not possible in a face-to-face setting (Bourne et al., 2005). However, teachers can only facilitate positive learning outcomes if students are able to engage effectively with the content and demonstrate their learning through authentic assessment within the online context.

Online STEM course design faces complexity intrinsic to the text-based nature of current LMSs, which results in less than optimal learning environments for mathematics-based disciplines (Loch & McDonald, 2007). Students and teachers require an interface that enables them to communicate STEM reasoning through creating and manipulating complex mathematical and scientific formulas, graphs, and diagrams online, preferably without the constraints of using a mouse and keyboard to express symbolic subjects (Urban, 2017). In addition, the predilection for complex online exams in mathematics-based disciplines (Alksnis et al., 2020), academic integrity concerns (Trenholm, 2007), and professional accreditation requirements brings further challenges since technological solutions must also be compatible with remote invigilation systems, which already present intrinsic pedagogical challenges (Cramp et al., 2019). For students completing formative and summative assessments, access to affordable and user-friendly technological solutions can be limited, with new technologies often being challenging to learn and slow to use (Akelbek & Akelbek, 2009). Linear syntax, such as LaTeX, requires significant effort and time to learn, a challenge with already full curricula (Loch et al., 2015; Winger et al., 2019). Comparatively, graphical equation editors, which are more suitable for beginners, can be slow to use and cause students to compress calculation steps (Loch et al., 2015; Winger et al., 2019). Compressing calculation can disrupt feedback cycles by eliminating the ability for teachers to view, mark, and therefore give feedback on students' techniques, strategy, accuracy, and efficiency in the application of procedural concepts. Furthermore, studies have found that when presented with equation editors, students prefer using handwritten and scanned responses (Misfeldt & Sanne, 2012). A common consequence for formative and summative assessments is the use of multiple-choice–style questions (Draskovic et al., 2016; Soares & Lopes, 2018). However, while multiple-choice questions can form valid and reliable assessments (Parkes & Zimmaro, 2016), they cannot be considered a valid replacement for fully worked solutions, diagrams, and graphing in summative assessments. Additionally, multiple-choice–style questions' focus on conceptual understanding means that they present a pedagogical concern, whereby teaching staff are unable to close the feedback loop on students' procedural fluency. Furthermore, it can be challenging to display non-text–based teaching and learning materials in the LMS in ways that maximize accessibility for students (Armano et al., 2018).

Digital inking technologies have recently shown promise in STEM teaching and learning as a way to easily and accurately represent mathematics and science online (Stephens, 2018), and the recent pivot to emergency remote teaching has triggered increased interest in their adoption (Dekkers & Hayes, 2020). In this paper, we refer to digital inking as the use of digital stylus-based technologies (for example, graphics or pen tablets, tablet PC and Mac devices, and smartpens) and computer software to produce digitized handwriting (Lafreniere, 2016). Prior contributions have highlighted increases in efficiency for instructors employing digital inking in the marking and production of multimedia (May, 2018), and students' preference for watching instructional videos utilizing digital inking (Maclaren, 2014). Furthermore, digital inking has advantages for online synchronous learning that enable instructors to facilitate interactions in real time (Iwundu, 2018), thus promoting collaborative problem solving. Digital inking technologies also exhibit high mathematical and cognitive fidelity—that is, the ability of the tool to accurately represent mathematic and scientific symbolic objects, expose students to procedural thinking, and as such allow teachers to evaluate and therefore give feedback on students' mathematical and scientific reasoning (Lafreniere, 2016).

Implementing a digital technology solution into course design and development is neither simple nor straightforward, with many competing institutional, technological, and teaching and learning factors. Employing an adaptable model such as analysis, design, development, implementation, and evaluation (ADDIE) (Molenda, 2003) can support the methodical and cyclical adoption of new educational technologies. Such systematic approaches promote consideration of end-user perspectives, including perceived usefulness and ease of use (Davis, 1989) and socio-economic barriers (Van Dijk, 2017). Likewise, the impacts of the technology on teaching, learning, and course design should be addressed to ensure that learning remains outcomes focused (Nuland et al., 2020) and equitable (Basham & Marino, 2013), and that the adoption of the technology for assessment does not affect valid, reliable assessment design (Brady et al., 2019).

3. METHOD

Cognizant of these complexities, we sought a viable solution for the efficient and accurate display and communication of non-text–based material in the LMS. In 2020, an Australian metropolitan university commenced the development of a new, fully online associate degree in engineering. Each online course (10-week unit of study) was developed in collaboration between academic subject matter experts and a team of academic developers and online educational designers over a 12-week development cycle. In total, 16 fully online courses were created over an 18-month period using an established quick course development model (McInnes et al., 2020).

A case study approach (Yin, 2017) was deemed the most appropriate way to investigate and articulate our method of technology appraisal and adoption for the particular teaching and learning challenges previously outlined. As indicated by Flyvbjerg (2006), in-depth case study research has particular resonance in scientific disciplines for the systematic development of exemplars otherwise rendered invisible through unrecorded practices. The aim of this case study was to interrogate practice and inform decisions in order to create a framework for future use. Guided by the ADDIE model, the research team analyzed our context and processes in light of the scholarly literature and were able to harness their cumulative experiences and knowledge as reflective practitioners systematically and objectively building “the collective process of knowledge accumulation” (Flyvbjerg, 2006, p. 227). This case study illuminates key aspects of teaching and learning scholarship by making pedagogical decisions transparent, replicable, and subject to critical scholarly debate (Boyer, 1990).

4. TECHNOLOGY TESTING AND SELECTION

To identify suitable technologies that could be used across a fully online engineering degree the team of academic developers and online educational designers, in consultation with academic subject matter experts, undertook the multi-step ADDIE process (Molenda, 2003). End-user analysis and an environmental scan were completed to establish the needs of the students and teaching staff and the scope of obtainable technology options, including those in use at the institution and documented in extant literature.

4.1 Student Technologies

For student use, the end-user analysis parameters required the technologies to be affordable, available domestically and internationally, intuitive to set up and use, useful across the student's whole degree, accessible, appropriate for formative and summative assessments, and compatible with university systems, including the LMS, virtual classroom, and online exam invigilation software. Several technology options were eliminated immediately, such as touch-screen and hybrid PCs, tablet devices, document cameras, and scanners for reasons including expense, non-ubiquitous availability, complexity, and inability to work with university systems—predominantly the exam remote invigilation system. After this preliminary round of investigation, a refined list of technologies was developed. Two styles of graphical equation editors were tested: WIRIS MathType/ChemType and Microsoft Equation Editor. Several styles of market-leading pen tablets were tested, including the Wacom Intuos and Wacom One software programs. The pen tablets were predominantly tested with Microsoft Inking across the Office 365 Suite (institutionally provided and supported software); however, a hybrid solution was also tried by using a pen tablet with the handwriting functionality in WIRIS. Finally, the LiveScribe Echo smartpen and Wacom Bamboo pen tablet were tested to digitize handwriting.

Utilizing the end-user analysis parameters, a set of technology testing criteria (Table 1) was created that the academic developers, online educational designers, and academic subject matter experts could use to evaluate each technology. Testing was conducted using sample assessment papers and solutions from previous iterations of the courses within the engineering degree in consultation with course teaching staff.

TABLE 1: Evaluation criteria for student technologies

| Criteria | Descriptor | Comparative Suitability | ||

| High | Medium | Low | ||

| Intuitiveness | How easy is the technology to set up and use out of the box? | |||

| Learning curve | How long does it take to become proficient? | |||

| Readability | How easy is it to read formulas, diagrams, and graphs (i.e., are they clear and well structured)? | |||

| Speed | How long does it take to input solutions to a set of questions? | |||

| Equity | Is it affordable and easy to obtain domestically, and internationally? | |||

| Academic integrity | Are there any technology-specific academic integrity issues? | |||

| Applications | Will it be useful across the degree and authentic to industry practice? | |||

| Integration | How well does it integrate with the LMS and other university systems? | |||

| Assessment | Does the technology enable or inhibit types of assessment? | |||

| Exam suitability | Will the tool work with the remote invigilation system? | |||

| Synchronous participation | Can students participate in constructing formulas in real-time? | |||

| Collaboration | Can students and staff collaborate on documents simultaneously? | |||

The results of the testing showed that the WIRIS and Microsoft Equation Editor were very similar in relation to the criteria—the key differences being the cost to the institution of using WIRIS (an annual license fee and the ongoing maintenance associated with integration into the LMS) and that the WIRIS editor could be directly integrated into the Moodle editor. Testers were impressed by both equation editors' ability to provide clear, well-formatted equations. However, the complexity of their use in synchronous classrooms for real-time collaboration would be difficult. Additionally, the equation editors presented limits on assessment types, constraining the use of graphing and freehand diagrams. Critically, the equation editors were slow to gain proficiency, requiring significant practice to memorize symbol organization in the editor. Once proficient, they were reasonably fast; however, on average, they were over twice as slow as traditional handwriting. Furthermore, only one line at a time could be written in the editor, meaning slower times to enter multi-stage calculations and complexities in their formatting. These findings reaffirmed results previously obtained in the literature (Akelbek & Akelbek, 2009; Loch et al., 2015; Winger et al., 2019).

The handwriting conversion functionality in WIRIS was more intuitive than that in the equation editor—being more similar to handwriting—thus, making this technique easier to learn such that proficiency can be quickly developed. However, the testers found that despite impressive character recognition, the system proved frustrating when characters and symbols were not recognized and noted that under the high-stakes pressure of an exam it could be easy to miss where handwriting was not rendered correctly—an important consideration since technologies used in online exams should not impose additional cognitive load (Cramp et al., 2019) or anxiety. As with the graphical equation editors, there were limitations on multi-line equation construction, echoing the findings from Winger et al. (2019).

The Smartpen technologies were quickly discounted due to their inability to be easily used in online exams (where they contravened hardware and physical paper restrictions) and virtual classrooms (where synchronous use was not possible). Despite their similarity to handwriting and intuitiveness, the high initial and ongoing cost and the complexity of their setup meant that they were considered unsuitable.

Comparatively, the pen tablets were operationally similar, being relatively intuitive, similar to handwriting (therefore, allowing solutions to questions to be quickly written), and easy to set up with both PCs and Macs. In addition, the immediacy of their input enabled real-time participation in virtual classrooms. The disadvantages included the cost to students (approximately A$80–100) and the potential for low readability while students gained proficiency in writing with a digital stylus. Furthermore, some technical hurdles were encountered when integrating the software and uploading documents during online exams, which were identified and solved (Cramp et al., 2019). Concerns were raised about the amount of additional time that could be required in exams and the clarity of student work. Measures were taken to support students through orientation, scaffolded practice, and application via course design to mitigate these concerns. The testing noted that while one of the pen tablets has increased sensitivity and additional customizable buttons, which had the potential to improve clarity and speed up writing notation, this was not significant enough to preference one product.

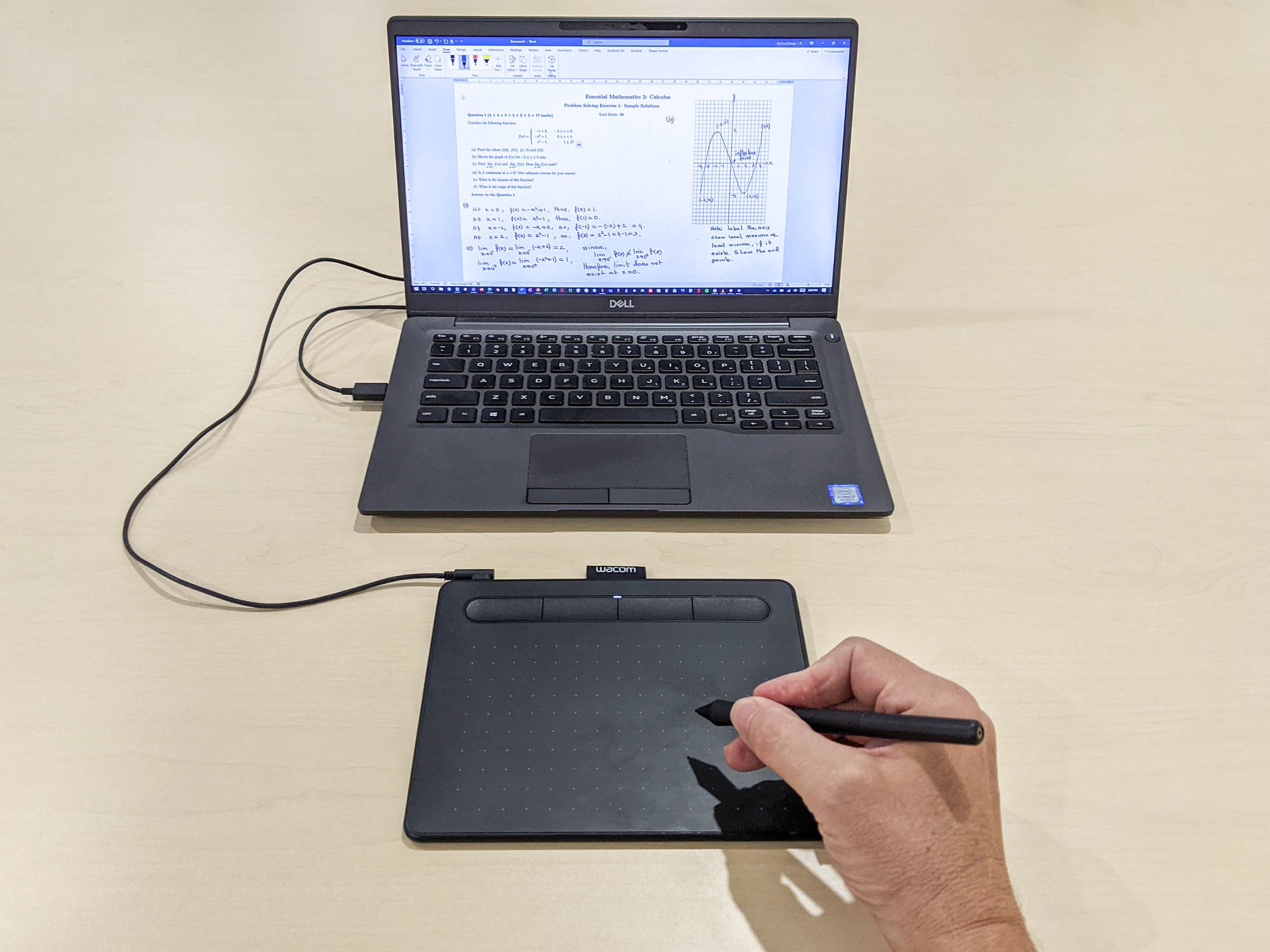

Based on the results of this extensive testing, it was determined that students would be required to obtain a wired Wacom pen tablet to be used with Microsoft Inking in the Microsoft Suite to digitally handwrite equations, diagrams, and graphs. Figure 1 illustrates a typical student technology setup and the resultant digital inking display. Wired technology was preferred due to academic integrity concerns associated with the use of wireless technology in online invigilated exams and the risk of the device running out of battery power part way through an assessment. These specific products, with minimum specifications and features, were recommended to students to mitigate concerns that variations in product functionality would influence or affect student performance (i.e., those that could afford better products would be unfairly advantaged). Support for mastering these technologies was scaffolded across the degree using videos and activities for familiarization such that students could gain proficiency before the pressure of a remotely invigilated online exam.

FIG. 1: Example of a typical student's Wacom pen tablet and laptop setup with a close-up view of the Microsoft Inking display in Microsoft Word

4.2 Teacher Technologies

For teachers, technologies are required to support the communication of mathematics and science online, including course design and development, the creation of multimedia, marking and feedback on student assessments, and the facilitation of synchronous classes. An ideal scenario is the adoption of a single technological solution that will address all of these applications. Technologies available in our case were glassboards, whiteboards, interactive whiteboards, equation editors, graphics tablets, tablet PCs, and document cameras. To determine the best fit of technologies for these applications, technology testing criteria (Table 2) were developed against which technologies could be evaluated.

TABLE 2: Evaluation criteria for teacher technologies

| Criteria | Descriptor | Comparative Suitability | ||

| High | Medium | Low | ||

| Intuitiveness | How easy is the technology to use with basic training? | |||

| Learning curve | How long does it take to become proficient? | |||

| Readability | How easy is it to read equations, diagrams, and graphs (i.e., are they clear and well structured)? | |||

| Expense | What is the cost to the institution? | |||

| Integration | How easy is it for staff to use with the LMS and other university systems? | |||

| Synchronous participation | Can staff facilitate virtual classrooms, constructing formulas in real time? | |||

| Collaboration | Can students and staff collaborate on documents simultaneously? | |||

| Learning design | Does the technology enable or inhibit learning design or pedagogies? | |||

| Feedback | Can staff use the technology to give feedback directly on student work? | |||

| Accessibility | Does the technology present any accessibility concerns? | |||

| Ongoing usage | Is the technology sustainable and scalable? | |||

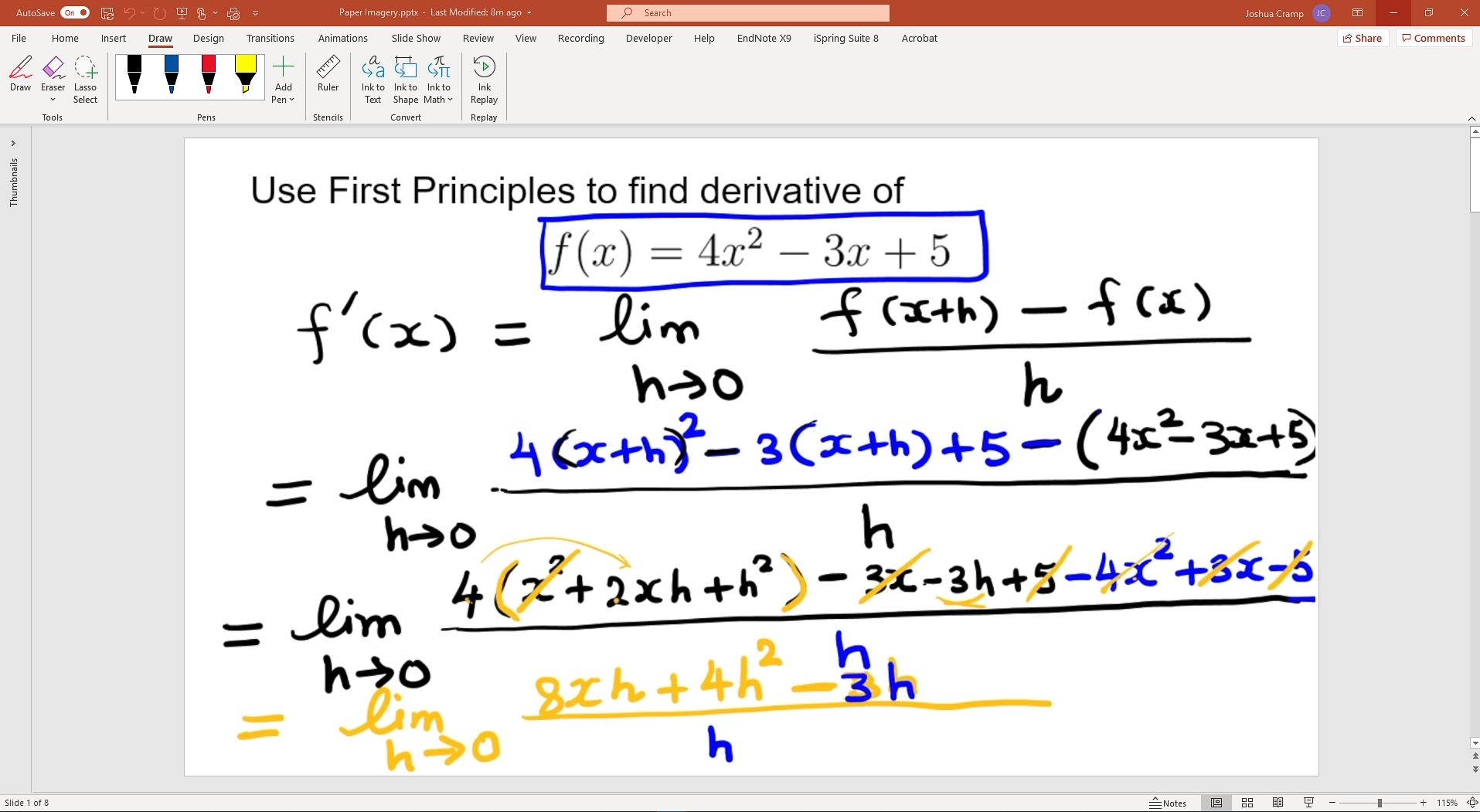

Based on the testing criteria and existing literature (Dekkers & Hayes, 2020; Iwundu, 2018; Maclaren et al., 2017; May, 2018; Urban, 2017), it was determined that academics should use tablet PCs and digital inking, in this case utilizing existing on-campus Wacom Cintiq devices with Microsoft Inking software. Digital inking using tablet PCs was selected, in part because it was a single technology that could be used for creating multimedia, marking, giving feedback on student assessments, and facilitating synchronous classes, therefore minimizing technological complexities. Critically, for our purposes, digital inking enables high mathematical and cognitive fidelity while providing practical improvements to teaching without substantially altering signature STEM pedagogies (Maclaren et al., 2017). Throughout the investigation and testing, digital inking technologies demonstrated the potential to enhance the learning design for multimedia and synchronous classes. In synchronous classes, digital inking demonstrated the capability of increasing teacher presence and enabling active learning strategies (Iwundu, 2018). For multimedia, digital inking enabled presenters to conform to the many research-based principles that guide practice (Mayer, 2017; Seethaler et al., 2020). Figure 2 illustrates an example of a PowerPoint-based multimedia presentation utilizing digital inking. This example demonstrates the use of pre-setup questions, simultaneous narration of the reasoning underpinning content while digitally inking step by step the procedural fluency necessary for its creation, and color to highlight elements and provide cues to learners. Figure 2 shows the presenter view—when published for student viewing, extraneous menu bars and the surrounding frame are cropped out.

FIG. 2: Example of a slide from a multimedia presentation using digital inking

Another critical advantage of the tablet PC combined with digital inking technologies was the low barrier to usage, short learning curve, and the ability to work with existing software with which academics were already familiar, e.g., PowerPoint, Zoom, and Panopto (the institutionally supported video recording and hosting software). Seamless integration of existing technologies and rapid skill acquisition meant academics were able to work on the development of content and facilitation of classes with minimal training and without dedicated technical support. Furthermore, the ease of using these devices to directly annotate and give feedback on student submissions provided an intuitive and effective means for marking. Such ease of use contributes to the sustainability and scalability of these technologies across institutions and has likely more broadly aided the increasing uptake of these devices by academics (Dekkers & Hayes, 2020; Maclaren, 2014; May, 2018).

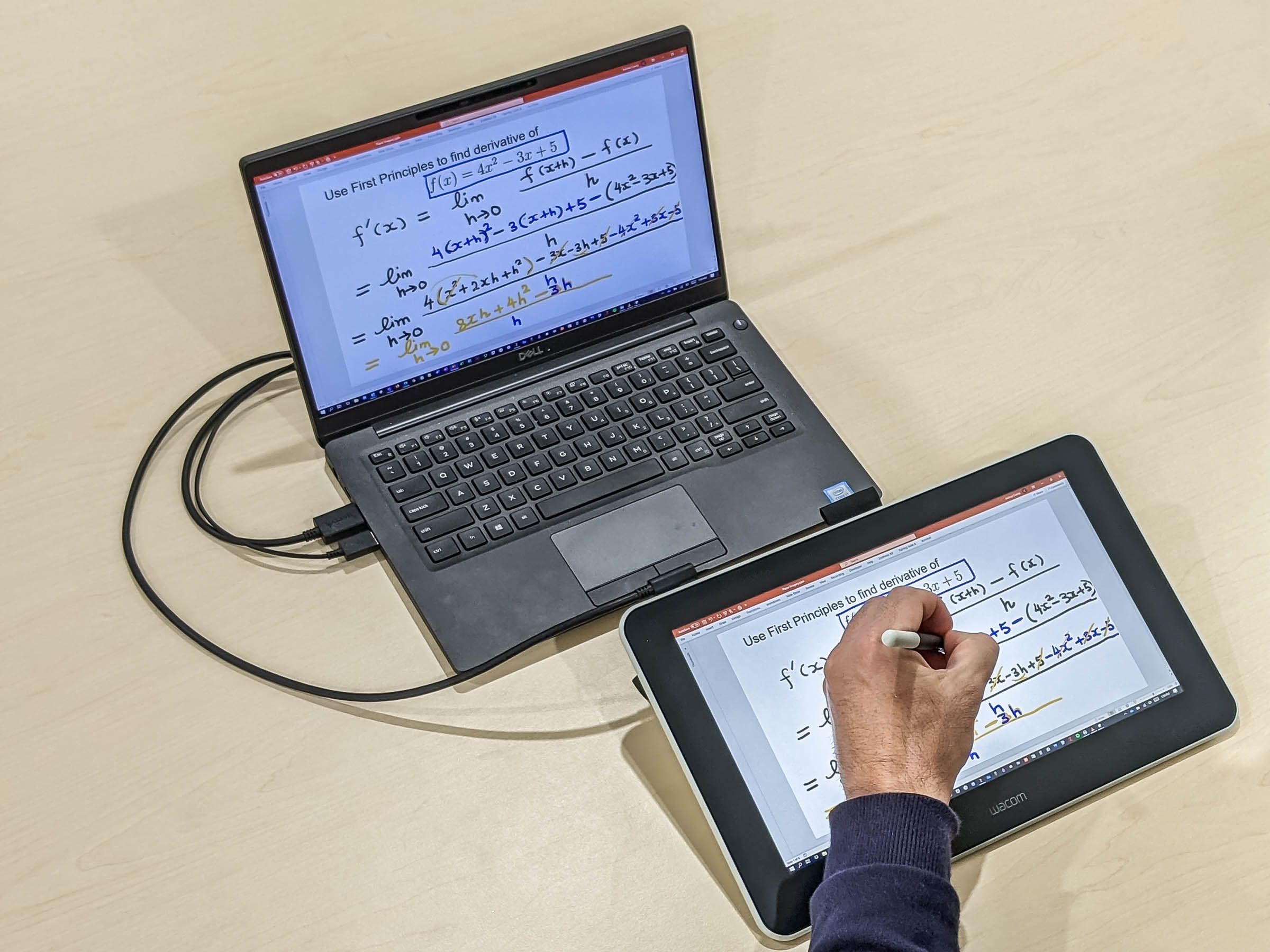

During the course development process COVID-19 restricted access to campus facilities, requiring a rapid solution to be deployed in order to ensure consistency of multimedia quality and allow access to technology familiar to course writers. After a condensed environmental scan and using lessons learned from the previous technology testing, the Wacom One 13 inch tablet was provided to staff working off campus. This technology was recommended due to the cost, portability, intuitiveness, continuity of experience from on-campus devices, and ease of use with the academics' existing hardware—including potential limitations of home devices. Figure 3 illustrates a typical academic technology setup.

FIG. 3: Example of a typical academic's Wacom pen tablet and laptop setup

For course design and development, another challenge was finding technologies to support the display of mathematical and scientific content in the text-based LMS, while maintaining accessibility standards and not impeding learning or assessment design. Based on the existing literature (Armano et al., 2018), it was evident that the most effective and accessible format for online content was using LaTeX and the MathJax Moodle filter, which was also implemented for the H5P software program. Therefore, the primary challenge was ensuring that the content was in the correct LaTeX format. In our case, due to the collaborative course development process, course content was provided by subject matter experts in a variety of forms, including LaTeX, typed equations using Microsoft Equation Editor, images, as well as handwritten and scanned files. Where content was not supplied using LaTeX, educational developers were forced to investigate technologies that would support the translation of content from its native format into LaTeX. After scoping several options, the development team identified the need for a range of tools, including MyScript Math in conjunction with inking to convert pen tablet digital inking, and MathPix to convert image files. The resulting LaTeX outputs could then be input to Moodle and H5P. For those without expertise in LaTeX, the use of a pen tablet and software to convert digital writing to LaTeX and digitizing handwritten content into LaTeX resulted in significant time efficiencies while maintaining the effective and accessible display of content.

5. TECHNOLOGY CHOICE FRAMEWORK FOR ASSESSING TECHNOLOGY SUITABILITY IN ONLINE STEM COURSES

When we embarked upon the process of identifying, evaluating, and selecting technologies to support online STEM learning and teaching there was a growing body of literature on teacher use of technology; however, there was sparse information on student technologies, other than equation editors, as previously reported. Through systematic research, we investigated, evaluated, and adopted digital inking technologies to address our needs. However, we are acutely aware of the pace of technological change and that context plays a critical role in technology adoption; therefore, while our decision to adopt digital inking was most appropriate for our context and cohort this is not necessarily a ubiquitous solution to the limitations of text-based LMSs. Having navigated hard choices to determine an appropriate technology for integration into fully online STEM courses, we have learned lessons about the teaching, learning, technological, and user requirements against which online STEM technologies can be evaluated. As such, by reflecting upon and analyzing our experiences we have created a technology choice framework (see Table 3) that may guide others through the process of technology investigation and adoption. The framework continues to be refined in relation to practice and peer input. It should be noted that other tools are available to support the selection of educational technologies (see, for example, Anstey & Watson, 2018); however, this framework originated from a STEM-specific experience and as such contributes a new angle to the body of scholarship in this space.

TABLE 3: Technology choice framework

| Criteria (Check as Applicable) |

Technology Suitability | |||

| Enhances Practice | Minimally Affects Practice | Inhibits Practice | ||

| Learning and teaching | ||||

| □ | Mathematical fidelity | Accurately represents mathematical and scientific formulas, graphs, and diagrams. | Accurately represents mathematical and scientific formulas. | Cannot accurately represent mathematical and scientific formulas. |

| □ | Cognitive fidelity | Makes visible technique, strategy, accuracy, and efficiency in the application of procedural concepts. Mechanisms such as color and highlighting can be used to give visual cues. | Makes visible technique, strategy, accuracy, and efficiency in the application of procedural concepts. | Partially or does not make visible technique, strategy, accuracy, and efficiency in the application of procedural concepts. |

| □ | Learning and assessment design | Enables improvements to learning design, pedagogies, or the types of formative and summative assessment that can occur. | Does not inhibit learning design or pedagogies and does not limit the types of formative and summative assessment that can occur. | Inhibits learning design, or pedagogies and/or limits the types of formative and summative assessment that can occur (e.g., no graphing/diagrams or only multiple-choice questions). |

| □ | Feedback | Allows teachers to view fully worked solutions and therefore mark students' mathematical and scientific reasoning. Feedback can be annotated directly onto student submissions. | Allows teachers to view worked solutions and therefore mark and give feedback on students' mathematical and scientific reasoning. | Only allows teachers to view numerical solutions and therefore they are unable to mark and give feedback on students' mathematical and scientific reasoning. |

| □ | Collaboration | Can be used synchronously (e.g., in virtual classrooms) and for simultaneous collaboration (e.g., instant collaboration on shared documents). | Can be used synchronously and for asynchronous collaboration (e.g., sequential collaboration on shared documents). | Cannot be used synchronously or collaboratively. |

| □ | Broader applications | Will be useful across all courses in the degree and is authentic to industry practice. | Will be useful across many courses in the degree and is authentic to industry practice. | Will be useful across few courses in a student's degree and/or is not authentic to industry practice. |

| □ | Academic integrity | Can help mitigate academic integrity issues. | Introduces no additional academic integrity issues. | Introduces academic integrity issues. |

| Technological factors | ||||

| □ | Compatibility and setup | Is compatible with institutionally recommended computer hardware, software and browsers on PC, Mac, and mobile devices, and requires no setup or uses plug and play technology. | Is compatible with institutionally recommended computer hardware on PC and Mac and requires some setup or software installation. | Requires high specifications computer hardware, is not compatible with PC and Mac, and/or requires extensive setup, maintenance, and software installation. |

| □ | Integration | Is fully integrated into the LMS and other institutional systems. | Can be partially integrated with the LMS and other institutional systems. | Cannot be integrated with the LMS, other institutional systems. |

| □ | Sustainability | Technology is highly sustainable (e.g. institutional maintenance and support are provided, the tool will be stable over time, and there is no ongoing resource commitment). | Technology is moderately sustainable. | Technology is unlikely to be sustainable (e.g., limited or no institutional support, the tool will go out of date quickly, and/or not scalable to larger class sizes). |

| □ | Data security and privacy | Exceeds institutional security and privacy policies including those relating to intellectual property, licensing, and learning analytics data. | Conforms to institutional security and privacy policies including those relating to intellectual property, licensing, and learning analytics data. | Does not conform to institutional security and privacy policies including those relating to intellectual property, licensing, and learning analytics data. |

| User experience | ||||

| □ | Usability | Is intuitive having a short learning curve (i.e., proficiency is gained quickly, resulting in faster/clearer responses and an improved user experience). | Has a moderate learning curve. | Is not intuitive, having a steep learning curve (i.e., proficiency is gained slowly). |

| □ | Readability | STEM notation is very easy to read and there is little to no margin for error. | STEM notation is easy to read and there is some margin for error. | STEM notation is difficult to read and there is a high margin for error. |

| □ | Speed | Can be used to complete tasks faster than the time to handwrite responses. | Can be used to complete tasks in approximately the same time as handwritten responses. | Is slower to use than the time to handwrite responses. |

| □ | Cost and availability | Is available, affordable, and easily obtained. | Is available, moderately expensive, and/or complex to obtain. | Is prohibitively expensive and/or unobtainable for certain cohorts. |

| □ | Accessibility | Exceeds institutionally mandated accessibility standards. | Meets institutionally mandated accessibility standards. | Does not meet institutionally mandated accessibility standards. |

| Context-specific criteria | ||||

| e.g., Remotely invigilated exam compatibility | e.g., The tool meets the requirements of the online invigilation system and does not impose additional cognitive load for students. | e.g., The tool meets the requirements of the online invigilation system but adds some additional complexities for students. | e.g., The tool contravenes restrictions of the online invigilation system or the tool would be too complex for an exam setting. | |

The framework supports the evaluation of technology suitability by categorizing its ability to enhance practice, minimally affect practice, or inhibit practice. The three-point scale is broadly based on the substitution, augmentation, modification, and redefinition (SAMR) model (Puentedura, 2010) of technology implementation; however, in this case, it was necessary to adapt the SAMR model to take into account technologies that do not improve teaching and learning or user experience. Despite the hierarchal nature of these classifications, it is important to consider the evaluation of technology from a contextual ecological perspective (Hamilton et al., 2016). The three-point scale in the framework is not meant to imply that a technology must meet a pre-determined threshold; rather, the framework enables contextualized evaluation of the comparative strengths and weaknesses of the technologies. Depending on teaching, learning, and institutional factors, it may be more appropriate to choose a tool that may be less technologically perfect but better fits teacher, student, or contextual needs. The criteria in the framework have been categorized into domains relating to learning and teaching, technological factors, and user experience. The criteria in each individual domain are revised versions of those used in our testing and evaluation process; however, we have made a concerted effort to combine student and teacher requirements into a single collated list, since ultimately it is unproductive to consider one group without also considering the impacts on the other group.

The technology choice framework works like a rubric to guide decision making by facilitating a comparison of the relative advantages of technology options. When using the framework, we recommend the following procedure:

- Initially, each criterion should be assessed for its relevance to a particular context and the problem that is being addressed. For example, if an online course is delivered fully asynchronously, then the collaboration criterion (which is within the learning and teaching domain) could be discounted. Where necessary, additional context-specific criteria can be added at the end of the framework that address the specific needs of the tool.

- Working systematically through each remaining criterion, the target technology should be assessed across a three-point scale (based on enhances, minimally affects, or inhibits practice). This process can be replicated for each of the technologies under consideration.

- Completed frameworks provide a qualitative overview of the relative strengths and weaknesses of each technology, enabling objective comparisons between options.

Although this technology choice framework is valuable in assessing technology suitability for online STEM programs, it has limitations. In the post-COVID climate, it is important not to conflate fully online teaching and learning with emergency remote teaching since the technological solutions may not be applicable to both situations. The rapid pivot to remote learning and teaching brings a new set of contextual factors unaccounted for in this case study, such as the time and resources required to redesign courses to incorporate new technologies. Thus, although this framework may provide a broad outline of factors to consider, it is critical to reflect on these within the specific disciplinary, institutional, student, and environmental context at the time. Furthermore, the technologies and framework discussed are predicated on the premise that educators try to replicate successful signature pedagogies of face-to-face teaching in the online environment. On the other hand, it is useful to remember the Shulman (2005, p. 56) reflections that “[signature pedagogies] persist even when they begin to lose their utility”; therefore, perhaps for some contexts it may be worth investing resources to explore new STEM pedagogies (Deák et al., 2021). Regardless, it must be remembered that the technology choice framework is merely an instrument used to assist in assessing the suitability of technology for supporting online STEM teaching and learning; however, ultimately, successful student learning will be the outcome of a myriad of factors, of which the selection of appropriate technology is only one part.

6. CONCLUSIONS

This paper outlines some of the key considerations for academics and educational developers when adopting technologies to address the issues of online STEM learning and teaching in LMSs that are ill-suited to the task of communicating complex mathematical and scientific formulas, graphs, and diagrams. Determining an appropriate technology for integration into online STEM courses is complex, and whichever solution is selected will have advantages and limitations; however, despite the hard choices, this paper demonstrates that positive outcomes can be achieved by a well-researched, systematic, and broadly applicable approach. Until LMS design mitigates the limitations of text-based environments, academics and educational developers will continue to be challenged to assess and adopt technologies that meet their needs. The technology choice framework offers one solution by providing a tool that can facilitate the systematic criterion-referenced objective decision making necessary to evaluate and compare technological innovations. Given the scarcity of literature investigating the implementation of digital inking technologies for students studying STEM online, further contributions are essential in informing and guiding academics and educational developers working in this space. Studies that investigate student acceptance of these technologies and their experiences would make a valuable contribution.

REFERENCES

Akelbek, S., & Akelbek, M. (2009). Developing and delivering a successful online math course despite the limitations of cost efficient technology available to students. In T. Bastiaens, J. Dron, & C. Xin (Eds.), Proceedings of E-Learn: World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education (pp. 33–36), Vancouver, Canada.

Alksnis, N., Malin, B., Chung, J., Halupka, V., & Vo, T. (2020). From paper to digital: Learnings from a digital uplift program. Proceedings of the Australasian Association for Engineering Education 2020 Conference, Sydney, Australia.

Anstey, L., & Watson, G. (2018, September 10). A rubric for evaluating e-learning tools in higher education. EDUCAUSE Review.

Armano, T., Borsero, M., Capietto, A., Murru, N., Panzarea, A., & Ruighi, A. (2018). On the accessibility of Moodle 2 by visually impaired users, with a focus on mathematical content. Universal Access in the Information Society, 17(4), 865–874. https://doi.org/10.1007/s10209-017-0546-8

Basham, J. D., & Marino, M. T. (2013). Understanding STEM education and supporting students through universal design for learning. Teaching Exceptional Children, 45(4), 8–15. https://doi.org/10.1177/004005991304500401

Bourne, J., Harris, D., & Mayadas, F. (2005). Online engineering education: Learning anywhere, anytime. Journal of Engineering Education, 94(1), 131–146. https://doi.org/10.1002/j.2168-9830.2005.tb00834.x

Boyer, E. L. (1990). Scholarship reconsidered: Priorities of the professoriate. Princeton University Press.

Brady, M., Devitt, A., & Kiersey, R. A. (2019). Academic staff perspectives on technology for assessment (TfA) in higher education: A systematic literature review. British Journal of Educational Technology, 50(6), 3080–3098. https://doi.org/10.1111/bjet.12742

Çalik, M., & Coll, R. K. (2012). Investigating socioscientific issues via scientific habits of mind: Development and validation of the Scientific Habits of Mind Survey. International Journal of Science Education, 34(12), 1909–1930. https://doi.org/10.1080/09500693.2012.685197

Cramp, J., Medlin, J. F., Lake, P., & Sharp, C. (2019). Lessons learned from implementing remotely invigilated online exams. Journal of Teaching and Learning Practice, 16(1). https://doi.org/10.53761/1.16.1.10

Cuoco, A., Paul Goldenberg, E., & Mark, J. (1996). Habits of mind: An organizing principle for mathematics curricula. The Journal of Mathematical Behavior, 15(4), 375–402. https://doi.org/10.1016/S0732-3123(96)90023-1

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340. https://doi.org/10.2307/249008

Deák, C., Kumar, B., Szabó, I., Nagy, G., & Szentesi, S. (2021). Evolution of new approaches in pedagogy and STEM with inquiry-based learning and post-pandemic scenarios. Education Sciences, 11(7), 319. https://doi.org/10.3390/educsci11070319

Dekkers, A., & Hayes, C. (2020, October 13–14). The new chalkboard: The role of digital pen technologies in tertiary mathematics teaching within Covid-19 restricted environments [Conference session]. Scholarship of Tertiary Teaching Online Conference, Rockhampton, Queensland, Australia. https://cloudstor.aarnet.edu.au/plus/s/EVNVVifqiGGeQMM

Draskovic, D., Misic, M., & Stanisavljevic, Z. (2016). Transition from traditional to LMS supported examining: A case study in computer engineering. Computer Applications in Engineering Education, 24(5), 775–786. https://doi.org/10.1002/cae.21750

Flyvbjerg, B. (2006). Five misunderstandings about case-study research. Qualitative Inquiry, 12(2), 219–245. https://doi.org/10.1177/1077800405284363

Hamilton, E. R., Rosenberg, J. M., & Akcaoglu, M. (2016). The substitution augmentation modification redefinition (SAMR) model: A critical review and suggestions for its use. TechTrends, 60(5), 433–441. https://doi.org/10.1007/s11528-016-0091-y

Iwundu, C. (2018). Enhancing synchronous online learning using digital graphics tablets. In E. Langran & J. Borup (Eds., Proceedings of the Society for Information Technology & Teacher Education International Conference (pp. 180–186), Washington, DC, United States. Association for the Advancement of Computing in Education (AACE).

Lafreniere, M. (2016). Best practices of digital inking for student engagement. Proceedings of the EdMedia 2016 World Conference on Educational Media and Technology (pp. 292–297), Vancouver, Canada. Association for the Advancement of Computing in Education (AACE).

Loch, B., Lowe, T. W., & Mestel, B. D. (2015). Master's students' perceptions of Microsoft Word for mathematical typesetting. Teaching Mathematics and Its Applications, 34(2), 91–101. https://doi.org/10.1093/teamat/hru020

Loch, B., & McDonald, C. (2007). Synchronous chat and electronic ink for distance support in mathematics. Innovate: Journal of Online Education, 3(3).

Lucas, B., & Hanson, J. (2016). Thinking like an engineer: Using engineering habits of mind and signature pedagogies to redesign engineering education. International Journal of Engineering Pedagogy (IJEP), 6(2), 4–13. https://doi.org/10.3991/ijep.v6i2.5366

Maclaren, P. (2014). The new chalkboard: The role of digital pen technologies in tertiary mathematics teaching. Teaching Mathematics and Its Applications, 33(1), 16–26. https://doi.org/10.1093/teamat/hru001

Maclaren, P., Wilson, D., & Klymchuk, S. (2017). I see what you are doing: Student views on lecturer use of tablet PCs in the engineering mathematics classroom. Australasian Journal of Educational Technology, 33(2), 173–188. https://doi.org/10.14742/ajet.3257

May, H. (2018). Wacom leads to a more efficient classroom model. Journal for Research and Practice in College Teaching, 3(2), 178–181.

Mayer, R. E. (2017). Using multimedia for e-learning. Journal of Computer Assisted Learning, 33(5), 403–423. https://doi.org/10.1111/jcal.12197

McInnes, R., Aitchison, C., & Sloot, B. (2020). Building online degrees quickly: Academic experiences and institutional benefits. Journal of University Teaching and Learning Practice, 17(5). https://doi.org/10.53761/1.17.5.2

Misfeldt, M., & Sanne, A. (2012). Formula editors and handwriting in mathematical e-learning. In M. Khosrow-Pour (Eds.), Virtual Learning Environments: Concepts, Methodologies, Tools and Applications (pp. 1578–1593). https://doi.org/10.4018/978-1-60960-875-0.ch017

Molenda, M. (2003). In search of the elusive ADDIE model. Performance Improvement, 42(5), 34–37. https://doi.org/10.1002/pfi.4930420508

Nuland, S. E. V., Hall, E., & Langley, N. R. (2020). STEM crisis teaching: Curriculum design with e-learning tools. FASEB BioAdvances, 2(11), 631–637. https://doi.org/10.1096/fba.2020-00049

Parkes, J., & Zimmaro, D. (2016). Learning and assessing with multiple-choice questions in college classrooms. Routledge. https://doi.org/10.4324/9781315727769

Puentedura, R. R. (2010). SAMR and TPCK: Intro to advanced practice. Retrieved on April, 14, 2021, from http://hippasus.com/resources/sweden2010/SAMR_TPCK_IntroToAdvancedPractice.pdf

Seethaler, S., Burgasser, A., Bussey, T., Eggers, J., Lo, S., Rabin, J., Stevens, L., & Weizman, H. (2020). A research-based checklist for development and critique of STEM instructional videos. Journal of College Science Teaching, 50(1). https://www.nsta.org/journal-college-science-teaching/journal-college-science-teaching-septemberoctober-2020/research

Shulman, L. S. (2005). Signature pedagogies in the professions. Daedalus, 134(3), 52–59. https://doi.org/10.1162/0011526054622015

Soares, F., & Lopes, A. P. (2018). Online assessment through Moodle platform. ICERI2018 Proceedings (pp. 4952–4960). https://doi.org/10.21125/iceri.2018.2124

Stephens, J. (2018). The graphics tablet—a valuable tool for the digital STEM teacher. The Physics Teacher, 56(4), 230–231. https://doi.org/10.1119/1.5028238

Trenholm, S. (2007). A review of cheating in fully asynchronous online courses: A math or fact-based course perspective. Journal of Educational Technology Systems, 35(3), 281–300. https://doi.org/10.2190/Y78L-H21X-241N-7Q02

Urban, S. (2017). Pen-enabled, real-time student engagement for teaching in STEM subjects. Journal of Chemical Education, 94(8), 1051–1059. https://doi.org/10.1021/acs.jchemed.7b00127

Van Dijk, J. (2017). Digital divide: Impact of access. In The International Encyclopedia of Media Effects. John Wiley & Sons, Inc. https://doi.org/10.1002/9781118783764.wbieme0043

Winberg, C., Adendorff, H., Bozalek, V., Conana, H., Pallitt, N., Wolff, K., Olsson, T., & Roxå, T. (2019). Learning to teach STEM disciplines in higher education: A critical review of the literature. Teaching in Higher Education, 24(8), 930–947. https://doi.org/10.1080/13562517.2018.1517735

Winger, B., Vo, T., Halupka, V., & Wordley, S. (2019). Scoping e-assessment tools for engineering. In Proceedings of the 30th Annual Conference for the Australasian Association for Engineering Education (AAEE 2019): Educators Becoming Agents of Change: Innovate, Integrate, Motivate (pp. 404–410). Brisbane, Queensland. Engineers Australia.

Yin, R. K. (2017). Case study research and applications: Design and methods. Sage Publications.

Comments

Show All Comments