WEEKLY LOW-STAKES ASSESSMENTS PROMOTE STUDENT MOTIVATION, ENGAGEMENT, AND LEARNING IN ASYNCHRONOUS ONLINE COURSES

Department of Geography, Simon Fraser University, 8888 University Drive, Burnaby, British Columbia, Canada

*Address all correspondence to: Tara Holland, Department of Geography, Simon Fraser University, 8888 University Drive, Burnaby, British Columbia, V5A 1S6, Canada, E-mail: tholland@sfu.ca

Since the COVID-19 pandemic, many universities are increasing offerings of asynchronous online courses, including encouraging the modification of existing courses into an online format. With a move from in-person to online course delivery comes the challenge of maintaining and creating new ways of promoting student motivation and engagement to facilitate their attainment of course learning goals. This paper discusses the development and impact of an instructional intervention made in two large-enrollment active learning introductory courses at a Canadian university that were transformed to an asynchronous online course modality during and after the COVID-19 pandemic. In-class activities were redesigned based on best practices in online teaching into weekly low-stakes, formative “class engagement activities” (CEAs). The study used a mixed-methods research design to understand the impact that CEAs have on student motivation, engagement, and perceptions of learning. Results demonstrate that despite the low grading weight of the CEAs, the activities achieved high levels of student engagement, which impacted final exam performance, motivation to learn, and a perceived deeper understanding of course content. We conclude that CEAs are a relatively low-effort strategy for instructors to engage students in their course materials in the asynchronous online course environment and recommend best practices for incorporating these assignments into course design.

KEY WORDS: class engagement activities (CEAs), course design, active learning, instructional strategies, COVID-19 pandemic

1. INTRODUCTION

Since the spring 2020 pivot to emergency remote teaching, our Canadian institution actively encourages instructors to increase asynchronous online and blended (part asynchronous online, part in-person) course offerings for students. Consistent with shifting modality preference trends in higher education (Burns, 2023), this push is in part due to post-COVID-19 pandemic student and instructor demand for more flexible course delivery methods; the results include rapidly growing enrollments in asynchronous online courses. For example, some faculty members are building new courses to take advantage of this modality shift, while others are seeking ways to effectively and efficiently modify in-person courses into the online learning environment—a common challenge worldwide in the postpandemic higher education landscape (Ahshan, 2021; Daniels et al., 2021).

Engagement and motivation are two critical pedagogical aspects of student learning. Notably, it can be challenging to motivate students and keep them engaged in asynchronous online courses (Bridges et al., 2023; Randi & Corno, 2022; Wegmann & Thompson, 2013). Research on self-regulation and motivation in learning has been influential in understanding student success in online environments, wherein learners must manage their time, set goals, and stay motivated with less external structure. Zimmerman's (1989) social-cognitive model of self-regulated learning (SRL) emphasizes the importance of metacognitive strategies and self-efficacy, both critical in online settings where instructor guidance is limited. Furthermore, Pintrich (2003) suggests that motivational constructs like task value and goal orientation are highly relevant for maintaining engagement in asynchronous formats. Based on these learning implications of SRL, we conceptualize student engagement here according to three components of engagement widely discussed in the literature: behavioral, cognitive, and emotional (Fredricks et al., 2004). Behavioral engagement is related to how students engage with learning activities; cognitive engagement relates to how students think about their learning; and emotional engagement relates to how students feel about their learning (Fuller et al., 2018). We thus define student engagement in the present context as the enthusiasm and excellence of participation in class learning activities (Wegmann & Thompson, 2013).

It is well established that active learning increases engagement and improves student performance in face-to-face STEM classes (Freeman et al., 2014), including narrowing performance gaps for underrepresented students (Theobald et al., 2020). Active student engagement has also been found to correlate positively with student performance and satisfaction in online courses (Prince et al., 2020). As readers may recall, during and immediately following the period of emergency remote teaching, education research revealed that student engagement in online courses declined when compared to face-to-face courses (Walker & Koralesky, 2021; Wester et al., 2021). Within online courses, students were more highly motivated by synchronous course components (Walker & Koralesky, 2021). Consequently, we were interested in implementing active learning strategies that promote motivation and engagement in asynchronous components of an online course.

One strategy to increase student motivation and engagement in online courses is through frequent low-stakes assessments (Casselman, 2021; Cavinato et al., 2021; Dennis et al., 2007; Holmes, 2015; Prince et al., 2020). Low-stakes assessments are assessment activities that occur relatively often within a teaching term and have minimal consequences associated with the student's grading outcome. These assessments are designed to be formative and support student attainment of learning goals, including for nontraditional students in early years of university (Sambell & Hubbard, 2019). Frequent low-stakes assessments are often in the form of testing, which can incentivize participation and enhance engagement (Schrank, 2016). Vaessen et al. (2017) found that while frequent testing can increase study motivation in students, it is not perceived by students as important to their learning process and can also lead to negative effects such as stress and lower self-confidence. Vaessen et al. (2017) suggest that being transparent with students about the purpose of the assessments can be a way to mediate the negative effects. Similarly, Prince et al. (2020) highlight that clearly communicating expectations to students about how to achieve active engagement is crucial in the online learning environment. In terms of assignment structure, higher levels of motivation are associated with assignments that facilitate learner autonomy, social interaction, personal interest, and practical utility of the task or real-world applications (Barua & Lockee, 2025; Ismailov & Ono, 2021; Kessels et al., 2024; Martin & Bolliger, 2018; Pintrich, 2003).

This paper discusses the development and impact of an instructional intervention made in two large-enrollment introductory courses in geography and environmental science at a Canadian university. These courses were transformed to an asynchronous online course modality during and after the COVID-19 pandemic: EVSC 100 (Introduction to Environmental Science) and GEOG 104 (Climate Change, Water, and Society). EVSC 100 is a science course with an enrollment of approximately 200 students, and GEOG 104 is an interdisciplinary course with an enrollment of approximately 300–600 students, depending on the semester. Both courses were taught with active learning pedagogy (Bridges et al., 2023) in the original face-to-face versions, with frequent in-class activities that encouraged and incentivized student engagement and facilitated student achievement of learning outcomes.

When transitioning the courses online in 2020, the prior in-class activities were redesigned based on best online teaching practices into weekly “class engagement activities” (CEAs) withing the Canvas Learning Management System (LMS). CEAs are brief, yet challenging, low-stakes assessments that ask students to engage with the week's materials in varied ways. They can include short quizzes, discussions, reflection exercises, problem solving, creative expressions, and application of course concepts to their lived experience. The CEAs are strategically designed to incorporate three main components of motivation: support students' control of their learning; provide opportunities to link the content to their personal experience; and foster self-efficacy (Pintrich, 2003). The activities purposefully link to one of the weekly course learning goals and incrementally build through the revised levels of Bloom's taxonomy (Krathwohl, 2002) throughout the semester as students gain knowledge and confidence in their work. As low-stakes assessments, CEAs are graded for effort and completion. Together, they are cumulatively worth between 6 and 10% of the final grade, depending on the course. CEAs are introduced to students as opportunities to dive deeper into and think critically about an aspect of the weekly material, and this framing is reinforced throughout the course.

There are 12 CEAs throughout the 13-week semester, and the student's two lowest CEA grades are purposefully “dropped” at end of term. This effectively means that students can choose 10 out of 12 CEAs to complete; however, they are encouraged to complete all of them. Recognizing that instructor–student interaction and feedback is an important determinant of engagement (Czerkawski & Lyman, 2016; Kelsey & D'Souza, 2004; Martin & Bolliger, 2018; Muir et al., 2019), formative feedback is provided on each CEA by the instructor and/or graduate teaching assistant(s), either to individual students or as overall feedback to the whole class.

This research project spanned three semesters of the 2020/2021 academic year, when courses were moved online (i.e., EVSC 100: summer, fall 2020, summer 2021; GEOG 104: fall 2020, spring, summer 2021), with the goal of measuring impact of CEAs on student motivation, engagement, and learning.

2. METHODS

This study used a mixed-methods research design (Creswell & Plano Clark, 2011). To understand the impact CEAs have on student motivation, engagement, and perceptions of learning, we administered a postcourse survey in EVSC 100 and GEOG 104 in the summer 2021 semester. Survey completion was incentivized with a chance of winning one of two randomly drawn $50 cash prizes in each course. The questions were modified from a validated survey to measure self-reported engagement (Fuller et al., 2018). The survey asked students to rate pairs of items representing three components of engagement (i.e., behavioral, emotional, and cognitive; Table 1) on six CEAs in each course. The items were rated on a five-point Likert scale ranging from “strongly agree” to “strongly disagree.” Ratings were analyzed with respect to engagement (Fuller et al., 2018). The six CEAs represented the range of cognitive levels in the revised Bloom's taxonomy (Krathwohl, 2002) (see Table 2). Two additional researcher-developed questions were added to the survey. One question asked students to report the average length they spent on the CEA each week, and one open-ended question was “Do you feel that completing the CEAs impacted your learning in the course? Please explain.” We coded answers to the latter question through an iterative, inductive process of thematic analysis (Braun & Clarke, 2006) with respect to elements of engagement and learning that emerged from the responses.

TABLE 1: Postcourse self-reported engagement survey items relating to CEAs

| Engagement Component | Item Pair | |

| Behavioral | 1. I devoted my full attention to this activity | 2. I pretended to participate in this activity (i.e., wrote a rushed answer without really thinking about it) |

| Emotional | 3. While doing this activity, I enjoyed learning new things | 4. While doing this activity, I felt discouraged |

| Cognitive | 5. This activity really helped my learning | 6. In completing this activity, I tried a new approach or way of thinking about the content |

TABLE 2: CEAs represented in the survey by course and alignment to the revised Bloom's taxonomy of higher-order thinking levels (Krathwohl, 2002)

| Bloom's Level | GEOG 104 CEAs (Type of Activity) | EVSC 100 CEAs (Type of Activity) |

| Remember | Reflecting on the Greenland Ice Sheet System (recall/reflection) |

Nitrogen Pollution Solutions – N Footprint Calculator (recall/simple calculation/reflection) |

| Understand | Vulnerability & Adaptation: Why does Local Context Matter? (research & reflection) |

Deforestation Solutions (research & online discussion) |

| Apply | Investigating Earth's Energy Balance (application of lecture concepts to a spreadsheet calculation & interpretation) | Ocean Acidification, Biosphere Integrity, and Climate Change Links (drawing connections among course topics) |

| Analyze | Are We Water Secure in Canada? (case study analysis) |

Could Aerosols Be a Climate Change Solution? (reading synthesis & analysis; online discussion) |

| Evaluate | Climate Change Myth Busting (using evidence to evaluate and debunk climate change misinformation; online discussion) | Correcting Climate Change Misconceptions (using evidence to evaluate and debunk climate change misinformation; summary) |

| Create | How Can We Best Communicate Climate Change? (creating a communication plan for different audiences) |

A Water Сycle for the Anthropocene (drawing a hydrologic cycle that includes representation of human influences) |

We assessed quantitative measurement of impact on student performance through a regression analysis of CEA scores versus final exam grades for each semester the courses were offered online between summer 2020 and summer 2021 using Kendall's tau-b coefficient of correlation. We measured engagement quantitatively through descriptive statistics of completion rate and average time spent on CEAs in all semesters. We analyzed postcourse survey responses with respect to the revised Bloom's taxonomy cognitive level (Krathwohl, 2002) of each CEA to see if there was a correlation between the cognitive level of the assessment and engagement. We were also interested in determining whether grading weight influenced completion of CEAs (i.e., is there a lower limit to “low stakes” that discourages engagement?), and therefore compared the completion rate in GEOG 104 between the spring 2021 term when CEAs were worth 10% and the summer 2021 term, when they were reduced to 6% of the overall course grade.

3. RESULTS AND DISCUSSION

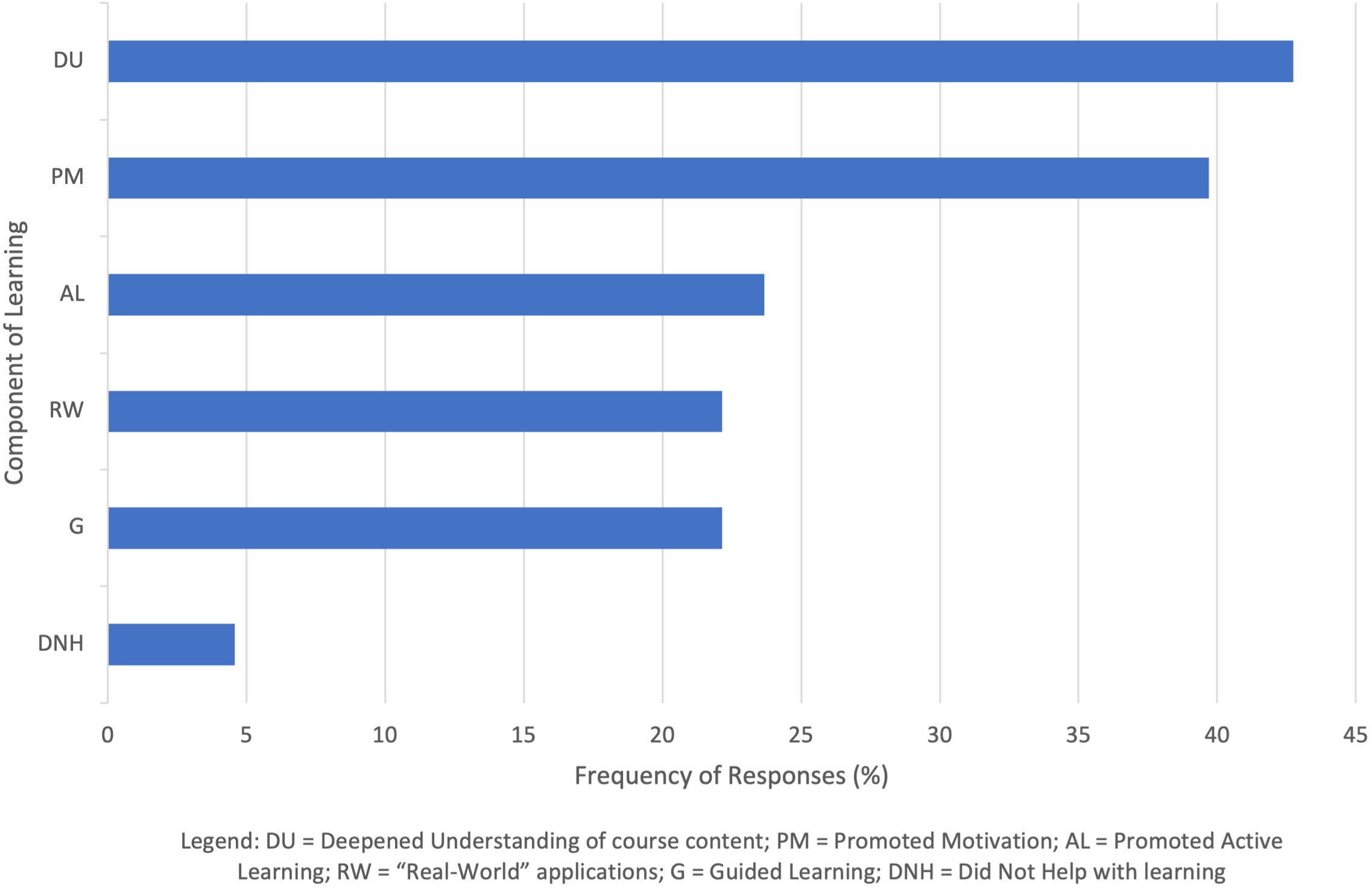

There were 53 students in EVSC 100 and 90 students in GEOG 104 who completed the postcourse survey in summer 2021, representing response rates of 41% and 34%, respectively. Student answers to the open-ended question “Do you feel that completing the CEAs impacted your learning in the course? Please explain” were coded into themes using an iterative process (Braun & Clarke, 2006) which related to students' perceptions of their learning through completing the CEAs. Out of the 143 completed surveys, there were 141 responses to the open-ended question. Ten of those responses were limited (e.g., the student just wrote “Yes” without an explanation) and were omitted from the analysis, leaving 131 responses to analyze. Through the coding process, six themes were identified that represent components of learning: deepened understanding of course content; promoted motivation; promoted active learning; gave a “real-world” application; helped guide learning; and did not help with learning (Fig. 1). Some comments were coded with more than one theme. Themes (i.e., components of learning) and representative student comments for each theme are given in Table 3.

FIG. 1: Frequency of responses for each component of learning, summer 2021 (n = 131)

TABLE 3: Themes identified from analysis of student responses to the question “Do you feel that completing the class engagement activities impacted your learning in the course?” N.B. Responses could be coded with more than one theme; hence percentages do not add up to 100%.

| Percent of Responses | Theme (Component of Learning) | Representative Student Responses |

| 43 | Deepened understanding of course content | I found the class engagement activities to be very fun and thought-provoking. Due to how a majority of them relied heavily on the slides, articles, and videos of the week, I was able to apply the information I've learned from them towards the activities, which cemented my learning. My favorite was the Climate Change Myth Busting activity. It taught me that it's not just important to know that a source is unreliable, but that it's also important to be able to independently point out why it's unreliable using scientifically backed information. |

| 40 | Promoted motivation | They helped me a lot, and it was fun learning new topics every week. The strong point for me was the length of the activities. They were not long, hence, it motivated me to complete each one every week. I think that the class engagement activities helped my learning in the course because they provide an extra way to think about the material. It is helpful that they are graded for effort instead of correctness because there is less pressure which allows the activities to be more fun and nonintimidating. |

| 24 | Promoted active learning | I feel that they were related to each week's lecture material but also expanding from what we learned instead of reviewing what we already learned, as well as applying it to real problems/analysis/looking for solutions. It was a more active form of learning which I appreciated. The activities presented problems related to a planetary boundary and allowed us to explore, investigate, and apply our own thinking. This helped improve my learning as I was actually seeking to find solutions and make connections that the engagement activity provided. |

| 22 | Gave a real-world application | Though they did contribute to my learning and understanding of learning outcomes for this course, they played an important role in changing my day-to-day life. For example, I noticed efforts to change my life to be more environmentally sustainable. |

| 22 | Guided them through course material | First of all, it is a good practice. I usually do such activities only when I am done with video/reading material. After, I test my new knowledge on the engagement activities. |

| 5 | Did not help with learning | The class engagement activities took too much time to think about and complete for something that is only worth one mark. A few of the activities I wanted to do but I forgot to submit it in time so I received 0. |

Students overwhelmingly reported that they believed that the CEAs helped their learning. The most frequent themes represented in the responses related to aspects of deepening understanding of course content (i.e., 43%) and promoting motivation (i.e., 40%), followed by encouraging active learning (i.e., 24%), linking course concepts to the real-world and personal experience (i.e., 22%), and helping guide their learning in the course (i.e., 22%). Less than 5% of respondents indicated that CEAs were not helpful to their learning, usually citing reasons relating to their low grading weight or time-consuming nature. It should be noted that as with all surveys, there may be a self-selection bias in these responses, whereby students who chose to complete the survey were already those who had high engagement in the course (Bowman, 2010).

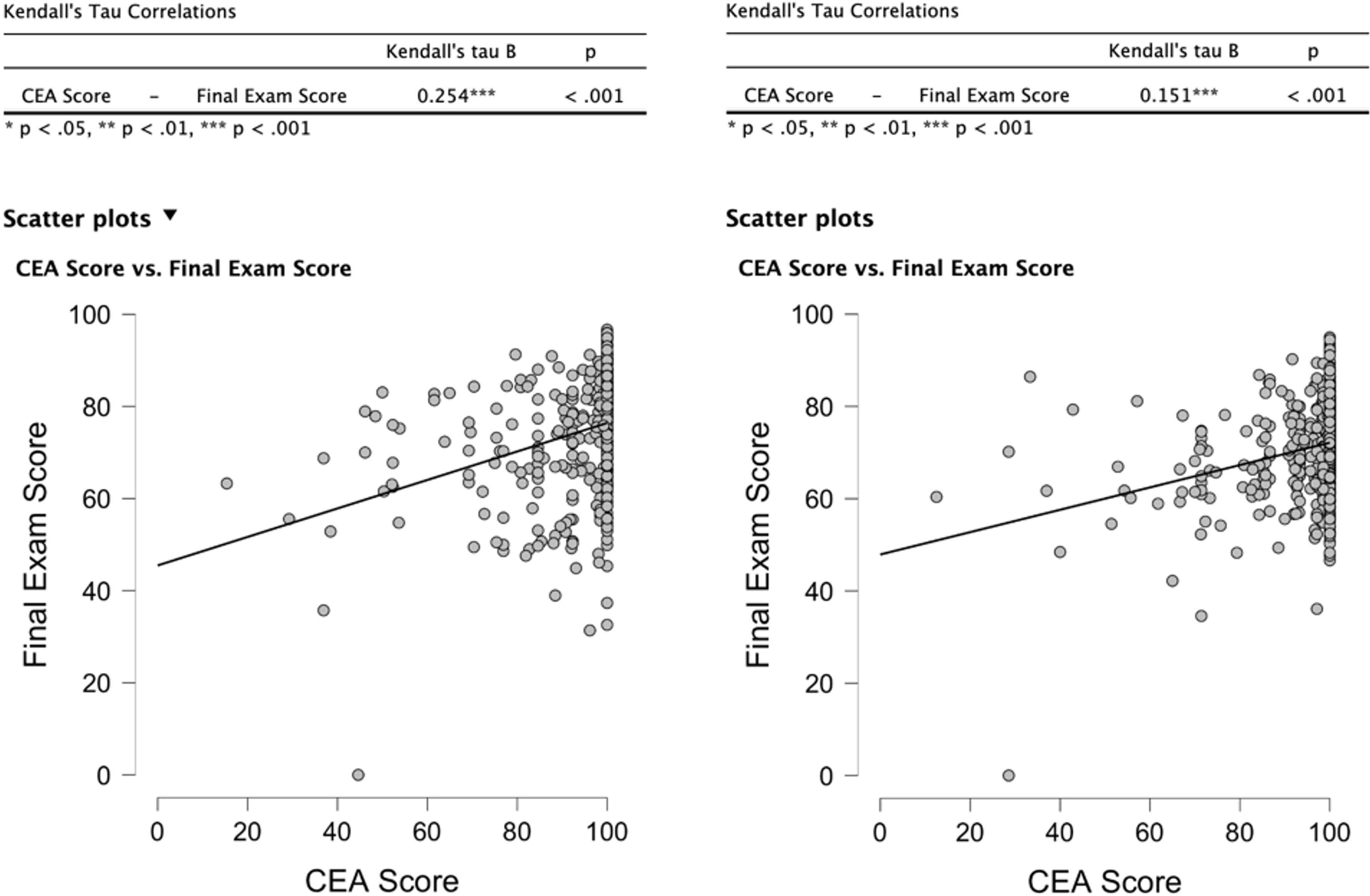

CEA scores for each student in each studied term of EVSC 100 and GEOG 104 were compared with final exam grades using a linear regression model in the statistical tool JASP. (Note: Final exam grades were used as the measure of “performance in the course” because the final grade includes the CEAs.) Because CEA scores are not normally distributed and contain many values with the same scores, Kendall's tau-b correlation coefficient was used (Field, 2024). In both EVSC 100 and GEOG 104, there was a significant (p < 0.001) positive correlation between CEA score and final exam score, but with a moderate (EVSC 100) and weak (GEOG 104) effect size (Kendall's tau-b = 0.254 and 0.151, respectively) (see Fig. 2). It stands to reason that students who were fastidious about completing CEAs also performed well on the final exam, so these correlations should be taken with that caveat in mind. However, since CEAs were graded for completion and effort, not correctness, it is encouraging to see that high rate of completion correlates with higher exam scores.

FIG. 2: CEA scores are significantly correlated with final exam scores in EVSC 100 (left) and GEOG 104 (right), but with moderate and weak effects, respectively

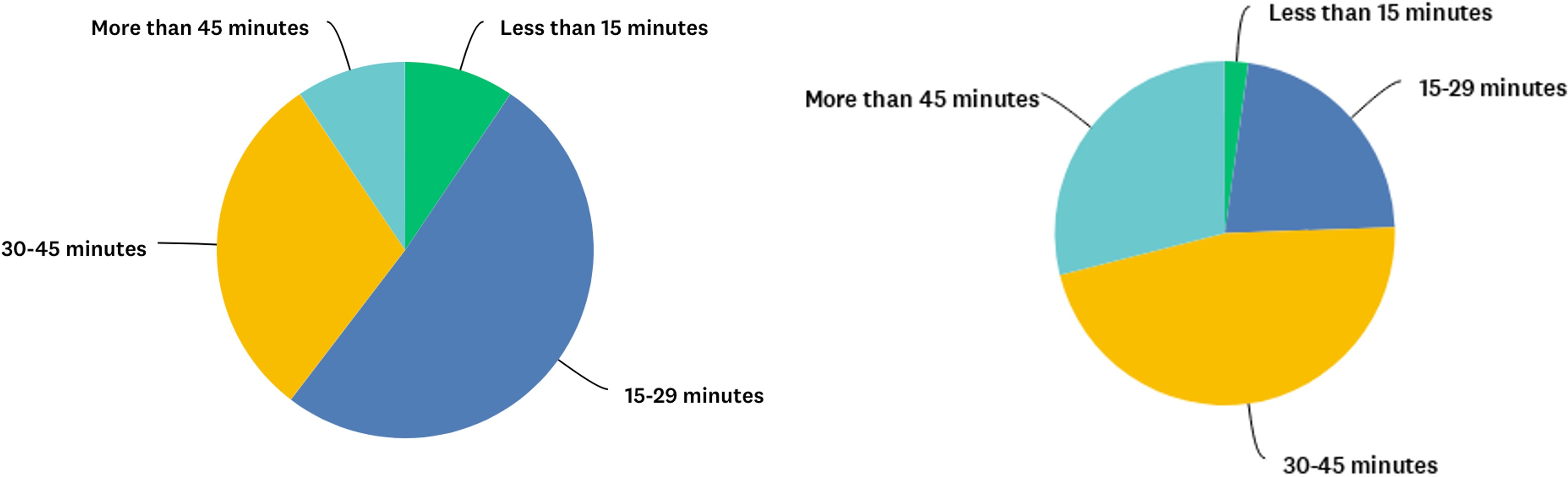

Regarding the amount of time spent on CEAs, most students in EVSC 100 reported spending less than 30 minutes on the CEA each week. In GEOG 104, most spent 30–45 minutes (see Fig. 3). Average completion rate of CEAs in EVSC 100 and GEOG 104 across all study terms was 93.9% and 95.9%, respectively. Each course had one CEA per week, except for the midterm week (for a total of 12 per course). Overall, the completion rates indicate a high level of engagement with the course material.

FIG. 3: Average amount of time per week spent on CEAs in EVSC 100 (left) and GEOG 104 (right)

In GEOG 104, CEAs were worth 10% of the course grade in fall 2020 and spring 2021. In summer 2021, the weight of CEAs was reduced to 6%. Average completion rates decreased only slightly from 96.8% when worth 10% of final course grade to 93.8% when worth 6%. This indicates that the reduction in weight of the assignments did not have a large impact on engagement.

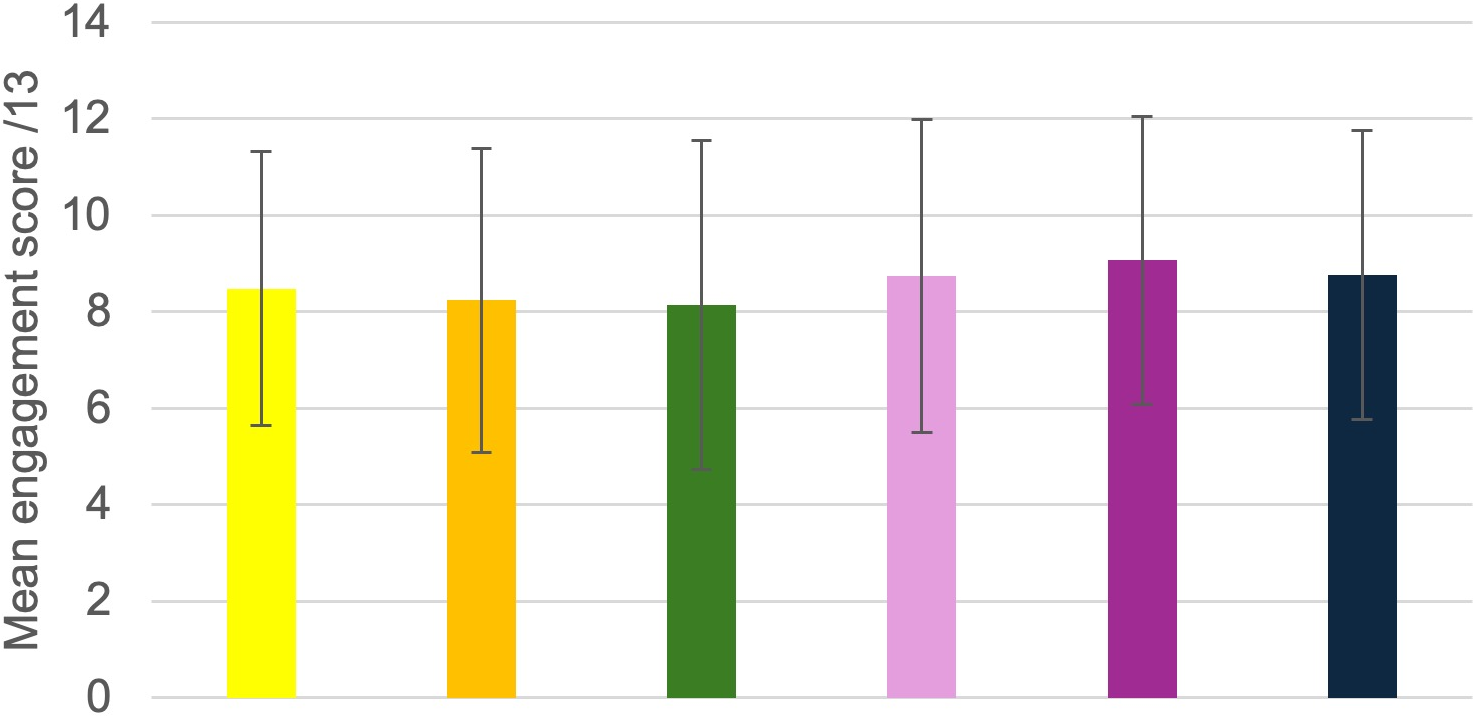

The postcourse survey responses to the engagement statements (see Table 4) were scored with the following points: Strongly Agree = 5; Agree = 4; Neutral = 3; Disagree = 2; Strongly Disagree = 1. Reliability of the self-report was assessed using Cronbach's alpha using JASP. The six items taken together as a measure of engagement showed acceptable reliability, with a Cronbach's alpha of 0.75. This is consistent with the reliability reported by Fuller et al. (2018). The self-report engagement score for each activity for each participant was determined by adding the scores for the three pairs of self-report items and using the following calculation: Engagement score = (item 1 – item 2) + (item 3 – item 4) + (item 5 + item 6) / 2 (Fuller et al., 2018). By this calculation, the highest possible engagement score is 13. Descriptive statistics for the engagement scores for each item across all study terms are given in Table 4.

TABLE 4: Mean and standard deviation of engagement scores across all study terms in both GEOG 104 and EVSC 100 (n = 143 students)

| Component of Engagement | Item | Mean | S.D. |

| Behavioral | 1. I devoted my full attention to this activity. | 4.23 | 0.18 |

| 2. I pretended to participate in this activity (i.e., wrote a rushed answer without really thinking about it). | 1.78 | 0.10 | |

| Emotional | 3. While doing this activity, I enjoyed learning new things. | 4.06 | 0.24 |

| 4. While doing this activity, I felt discouraged. | 1.97 | 0.26 | |

| Cognitive | 5. This activity really helped my learning. | 4.00 | 0.22 |

| 6. In completing this activity, I tried a new approach or way of thinking about the content. | 3.72 | 0.29 | |

| Engagement score (self-report aggregate) | 8.52 | 3.10 |

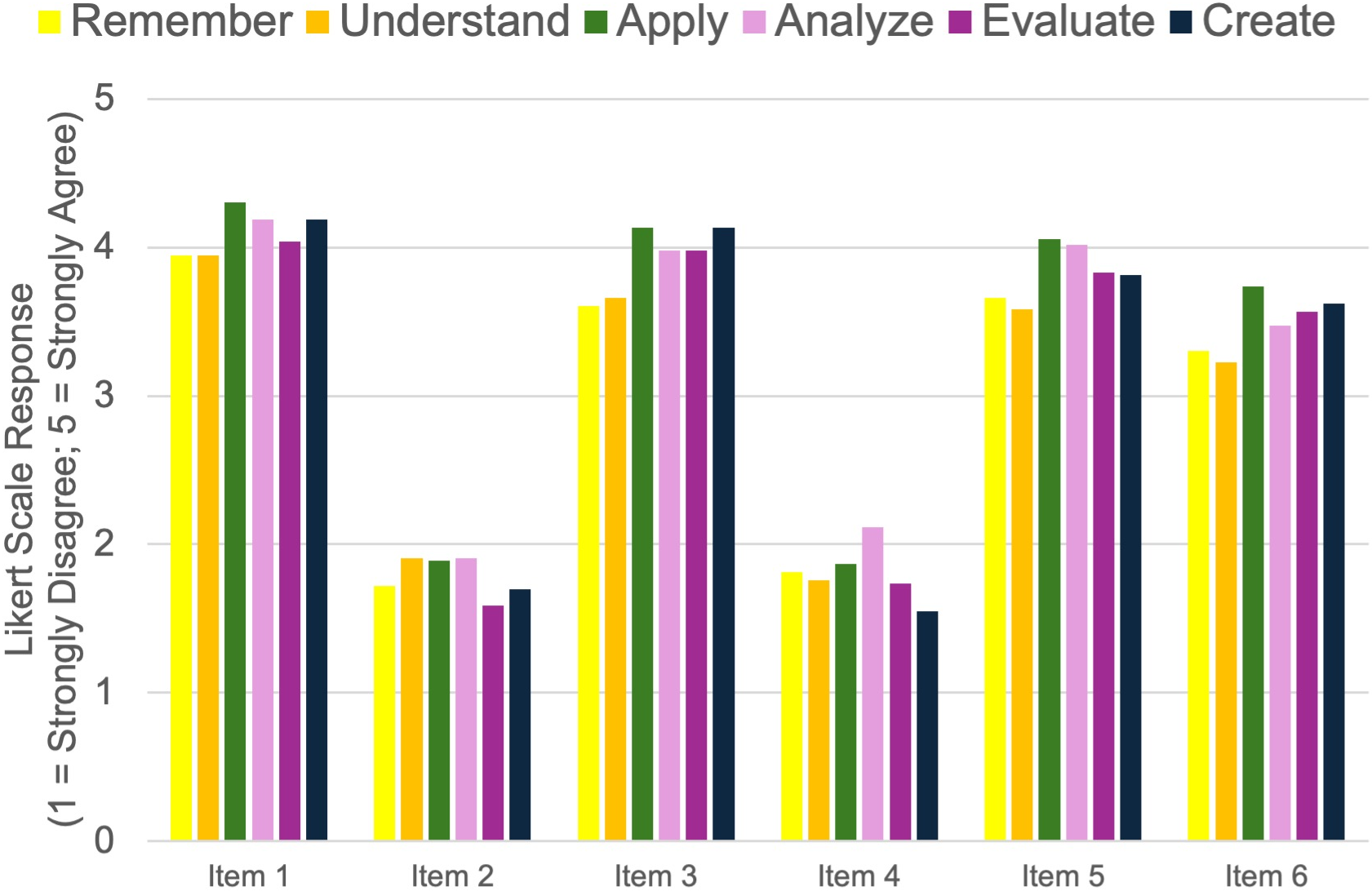

The results indicate strong engagement scores in all three components of engagement. The lowest mean engagement score (3.72 out of 5) is for the cognitive item “In completing this activity, I tried a new approach or way of thinking about the content.” This item is most closely aligned with higher-order learning levels in the revised Bloom's taxonomy (Krathwohl, 2002) (i.e., evaluation and creation). There was, however, no significant difference in engagement score with respect to each taxonomy level (see Fig. 4), which mirrors findings by Fuller et al. (2018). Median Likert scale scores for each item by taxonomy level are given in Fig. 5.

FIG. 4: Mean engagement score showed no significant difference between revised Bloom's taxonomy level (Krathwohl, 2002), legend below

FIG. 5: Median Likert scale response score by item, by revised Bloom's taxonomy level (Krathwohl, 2002)

4. CONCLUSIONS AND RECOMMENDATIONS FOR IMPLEMENTATION OF CEAs

With the increased appetite for asynchronous online course delivery in universities comes the challenge of maintaining and creating new ways of promoting student motivation and engagement to facilitate their learning. Scholarly literature contains myriad best practices for online course design and teaching. This research focused on leveraging evidence-based pedagogy to help instructors modify existing active learning strategies into an asynchronous learning environment with less workload than developing an entirely new course assessment structure. In-class active learning assessments were reenvisioned as asynchronous weekly, low-stakes “class engagement activities” (CEAs) in two online introductory geography and environmental science courses. Results demonstrate that despite the low grading weight of the CEAs (i.e., 6–10% of total course grade), CEA activities achieved high levels of student engagement, which impacted final exam performance, motivation to learn, and a perceived deeper understanding of course content. Students maintained a strong engagement with CEAs even when reduced to only 6% of the total course grade (i.e., 0.5% per activity).

We recommend development of CEAs as a relatively low-effort strategy for instructors to engage students in their course materials in the asynchronous online course setting. One caveat is that an important component of these assessments is regular and timely feedback. Such feedback does take time, which needs to be accounted for in instructor/teaching assistant workload. In a course of 200+ students, grading each CEA individually—even if only spending 5 minutes per student—can take upward of 16 hours per week. It is suggested that, where possible, these assignments should be set up in a way that allows them to be autograded while still giving feedback to students (e.g., through use of a detailed rubric for each student, or general feedback given to the whole class). Both courses used these strategies to reduce grading time.

It should also be noted that both EVSC 100 and GEOG 104 were designed for the online learning environment with many other best practices. Specifically, the instructor provided a consistent and supportive teaching presence through weekly LMS announcements, regular feedback, and clear instructions. There were regular opportunities for instructor–student, student–student, and student–content interaction throughout the course. The Canvas LMS course site was well organized and easy to navigate. Weekly leaning outcomes were clearly defined and explicitly linked to content. Lectures were recorded in short pieces, and multiple different resources were offered to students in varying formats. Students had choice in which assignments to complete and flexible due dates.

CEAs are just one component of that overall course structure, and instructors wishing to incorporate these assessments into an asynchronous online course should keep in mind the context of an overall well-designed course to facilitate student learning.

ACKNOWLEDGMENTS

This research was supported by Transforming Inquiry into Learning and Teaching (TILT) at Simon Fraser University. The authors are grateful to the reviewer and editor for helpful feedback on this manuscript.

REFERENCES

Ahshan, R. (2021). A framework of implementing strategies for active student engagement in remote/online teaching and learning during the COVID-19 pandemic. Education Sciences, 11(9), 483. https://doi.org/10.3390/educsci11090483

Barua, L., & Lockee, B. (2025). Flexible assessment in higher education: A comprehensive review of strategies and implications. TechTrends, 69(2), 301–309. https://doi.org/10.1007/s11528-025-01039-3

Bowman, N. A. (2010). Can 1st-year college students accurately report their learning and development? American Educational Research Journal, 47(2), 466–496. https://doi.org/10.3102/0002831209353595

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa

Bridges, M. W., DiPietro, M., Lovett, M., Norman, M. K., & Ambrose, S. A. (2023). How learning works: Eight research-based principles for smart teaching (2nd ed.). San Francisco, CA: Jossey-Bass.

Burns, S. (2023). Educational technology research in higher education: New considerations and evolving goals [Research Report]. EDUCAUSE. https://www.educause.edu/ecar/research-publications/2023/educational-technology-research-in-higher-education-a-moving-target/introduction-and-key-findings

Casselman, M. D. (2021). Transitioning from high-stakes to low-stakes assessment for online courses. In Advances in online chemistry education (Vol. 1389, pp. 21–34). American Chemical Society. https://doi.org/10.1021/bk-2021-1389.ch002

Cavinato, A. G., Hunter, R. A., Ott, L. S., & Robinson, J. K. (2021). Promoting student interaction, engagement, and success in an online environment. Analytical and Bioanalytical Chemistry, 413(6), 1513–1520. https://doi.org/10.1007/s00216-021-03178-x

Creswell, J. W., & Plano Clark, V. L. (2011). Designing and conducting mixed methods research (2nd ed.). Sage.

Czerkawski, B. C., & Lyman, E. W. (2016). An instructional design framework for fostering student engagement in online learning environments. TechTrends, 60(6), 532–539. https://doi.org/10.1007/s11528-016-0110-z

Daniels, L. M., Goegan, L. D., & Parker, P. C. (2021). The impact of COVID-19 triggered changes to instruction and assessment on university students' self-reported motivation, engagement and perceptions. Social Psychology of Education, 24(1), 299–318. https://doi.org/10.1007/s11218-021-09612-3

Dennis, K., Bunkowski, L., & Eskey, M. (2007). The Little Engine That Could—How to start the motor? Motivating the online student. InSight: A Collection of Faculty Scholarship, 2, 37–49.

Field, A. (2024). Discovering statistics using IBM SPSS statistics (6th ed.). Sage Publishing.

Fredricks, J. A., Blumenfeld, P. C., & Paris, A. H. (2004). School engagement: Potential of the concept, state of the evidence. Review of Educational Research, 74(1), 59–109. https://doi.org/10.3102/00346543074001059

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., & Wenderoth, M. P. (2014). Active learning increases student performance in science, engineering, and mathematics. Proceedings of the National Academy of Sciences, 111(23), 8410–8415. https://doi.org/10.1073/pnas.1319030111

Fuller, K. A., Karunaratne, N. S., Naidu, S., Exintaris, B., Short, J. L., Wolcott, M. D., Singleton, S., & White, P. J. (2018). Development of a self-report instrument for measuring in-class student engagement reveals that pretending to engage is a significant unrecognized problem. PLOS One, 13(10), e0205828. https://doi.org/10.1371/journal.pone.0205828

Holmes, N. (2015). Student perceptions of their learning and engagement in response to the use of a continuous e-assessment in an undergraduate module. Assessment & Evaluation in Higher Education, 40(1), 1–14. https://doi.org/10.1080/02602938.2014.881978

Ismailov, M., & Ono, Y. (2021). Assignment design and its effects on Japanese college freshmen's motivation in L2 emergency online courses: A qualitative study. The Asia-Pacific Education Researcher, 30(3), 263–278. https://doi.org/10.1007/s40299-021-00569-7

Kelsey, K. D., & D'Souza, A. (2004). Student motivation for learning at a distance: Does interaction matter? Online Journal of Distance Learning Administration.

Kessels, G., Xu, K., Dirkx, K., & Martens, R. (2024). Flexible assessments as a tool to improve student motivation: An explorative study on student motivation for flexible assessments. Frontiers in Education, 9, 1290977. https://doi.org/10.3389/feduc.2024.1290977

Krathwohl, D. R. (2002). A revision of Bloom's taxonomy: An overview. Theory Into Practice, 41(4), 212–218. https://doi.org/10.1207/s15430421tip4104_2

Martin, F., & Bolliger, D. U. (2018). Engagement matters: Student perceptions on the importance of engagement strategies in the online learning environment. Online Learning, 22(1). https://doi.org/10.24059/olj.v22i1.1092

Muir, T., Milthorpe, N., Stone, C., Dyment, J., Freeman, E., & Hopwood, B. (2019). Chronicling engagement: Students' experience of online learning over time. Distance Education, 40(2), 262–277. https://doi.org/10.1080/01587919.2019.1600367

Pintrich, P. R. (2003). A motivational science perspective on the role of student motivation in learning and teaching contexts. Journal of Educational Psychology, 95(4), 667–686. https://doi.org/10.1037/0022-0663.95.4.667

Prince, M., Felder, R., & Brent, R. (2020). Active student engagement in online STEM classes: Approaches and recommendations. Advances in Engineering Education, 8(4), 1–25.

Randi, J., & Corno, L. (2022). Addressing student motivation and learning experiences when taking teaching online. Theory Into Practice, 61(1), 129–139. https://doi.org/10.1080/00405841.2021.1932158

Sambell, K., & Hubbard, A. (2019). The role of formative ‘low-stakes’ assessment in supporting non-traditional students' retention and progression in higher education: Student perspectives. Widening Participation and Lifelong Learning, 6(2), 25–36.

Schrank, Z. (2016). An assessment of student perceptions and responses to frequent low-stakes testing in introductory sociology classes. Teaching Sociology, 44(2), 118–127. https://doi.org/10.1177/0092055X15624745

Theobald, E. J., Hill, M. J., Tran, E., Agrawal, S., Arroyo, E. N., Behling, S., Chambwe, N., Cintrón, D. L., Cooper, J. D., Dunster, Grummer, J. A., Hennessey, K., Hsiao, J., Iranon, N., Jones, L., II, Jordt, H., Keller, M., Lacey, M. E., Littlefield, C. E., Lowe, A., Newman, S., Okolo, V., Olroyd, S., Peecook, B. R., Pickett, S. B., Slager, D. L., Caviedes-Solis, I. W., Stanchak, K. E., Sundaravardan, V., Valdebenito, C., Williams, C. R., Zinsli, K., & Freeman S. (2020). Active learning narrows achievement gaps for underrepresented students in undergraduate science, technology, engineering, and math. Proceedings of the National Academy of Sciences, 117(12), 6476–6483. https://doi.org/10.1073/pnas.1916903117

Vaessen, B. E., van den Beemt, A., van de Watering, G., van Meeuwen, L. W., Lemmens, L., & den Brok, P. (2017). Students' perception of frequent assessments and its relation to motivation and grades in a statistics course: A pilot study. Assessment & Evaluation in Higher Education, 42(6), 872–886. https://doi.org/10.1080/02602938.2016.1204532

Walker, K. A., & Koralesky, K. E. (2021). Student and instructor perceptions of engagement after the rapid online transition of teaching due to COVID-19. Natural Sciences Education, 50(1), e20038. https://doi.org/10.1002/nse2.20038

Wegmann, S. J., & Thompson, K. (2013). Scoping out interactions in blended environments. In Blended learning (1st ed., Vol. 2). Routledge.

Wester, E. R., Walsh, L. L., Arango-Caro, S., & Callis-Duehl, K. L. (2021). Student engagement declines in STEM undergraduates during COVID-19–driven remote learning. Journal of Microbiology & Biology Education, 22(1). https://doi.org/10.1128/jmbe.v22i1.2385

Zimmerman, B. J. (1989). A social cognitive view of self-regulated academic learning. Journal of Educational Psychology, 81(3), 329–339. https://doi.org/10.1037/0022-0663.81.3.329

Comments

Show All Comments