GEN-AI: A TRANSFORMATIVE PARTNER IN COLLABORATIVE COURSE DEVELOPMENT

The University of Adelaide, Adelaide, Australia

*Address all correspondence to: Richard McInnes, The University of Adelaide, Adelaide, Australia, E-mail: richard.mcinnes@adelaide.edu.au

As artificial intelligence (AI)-powered tools become increasingly integrated into higher education, universities must reimagine their institutional operations to achieve greater efficiency, enhance practice, and ensure responsible usage. This paper investigates the potential of generative AI (gen-AI) as a “partner” in collaborative course development, focusing on the changing dynamics and implications of integrating gen-AI into the development process. Drawing on a case-study approach that combines education and AI literature with the authors' experiences as learning designers, we examine the shifting dynamics of third space professional (TSP) and academic relationships when introducing gen-AI as a partner. We unpack current collaborative course development practices and illustrate how gen-AI can enhance existing processes, create efficiencies, and offer new possibilities. We also highlight the importance of academic capability-building opportunities and address the risks associated with an AI-partnered future. This case study contributes to our understanding of gen-AI's potential in transforming collaborative course development and provides practical insights that may positively shape emerging partnerships between academics and TSPs.

KEY WORDS: generative artificial intelligence, educational development, course design, online

1. BACKGROUND

The next generation of artificial intelligence (AI)-enhanced higher education is characterized by transformation. The expanding role of massified, publicly available generative AI (gen-AI) powered tools (such as ChatGPT, Google Bard, and DALL-E) will precipitate the reimagining of existing practices and processes. As the landscape of higher education undergoes this revolution, it will fundamentally change the way institutions operate as they seek to integrate AI-powered tools to create greater efficiency and enhance practice in areas such as prediction, assessment, personalization, and intelligent tutoring systems (Zawacki-Richter et al., 2019), as well as ensure responsible usage for staff and students (Halaweh, 2023). As uptake of gen-AI tools among staff and students increases (Siemens et al., 2023) and case studies are shared on gen-AI use (for example, Baidoo-Anu & Owusu Ansah, 2023), we begin to glimpse the potential of AI to change teaching and learning practice and start to comprehend how the enactment of these technologies can be best realized to support academic and student success (Zhai et al., 2021).

In higher education the initial wave of discourse on free and open gen-AI tools focused on the ways that students would use AI text generators such as ChatGPT and perceived risks and benefits (Sok & Heng, 2023). This focus was driven by the implications of students using these tools for assessments and the academic integrity concerns this presented (Cotton et al., 2023; Khalil & Er, 2023; Rudolph et al., 2023). Yet, beyond these first forays of gen-AI into the published learning and teaching literature, few other topics are researched in-depth. As we continue to see free and open gen-AI tools proliferate, some authors have proposed that institutions proactively implement AI in their learning and teaching practice (Crawford et al., 2023) based on the premise that human-AI partnerships can produce work that is “superior in terms of creativity, originality, and efficiency than if either one was to work alone” (Halaweh, 2023). If we are to follow this push to adopt AI, then we must be critical about the ways in which these human-AI partnerships are enacted. One space where human-AI partnerships are proposed as improving practices is in the design and development of academic courses (Rudolph et al., 2023; Sok & Heng, 2023). But in what way will human-AI partnerships change the existing ways that we undertake this process?

This paper explores how incorporating gen-AI technologies has begun to change the relationship between third space professionals (TSPs)—individuals occupying the liminal space between traditional professional and academic roles (Whitchurch, 2012)—and academics involved in the codesign of courses and programs. We begin by unpacking our own practice before illustrating how gen-AI can create efficiencies in existing processes and afford new possibilities while also exploring the academic capability-building opportunities of integrating AI into the collaborative course development process. We will also highlight the risks associated with an AI-partnered future, providing practical ways in which we can positively shape the emerging partnerships between academics and TSPs within the collaborative course development process.

2. LITERATURE

2.1 Collaborative Course Development

In the current landscape of higher education, the push for higher quality and increased productivity in the development of courses is increasing (Kehrwald & Parker, 2019), alongside the challenges of catering to a more diverse student population and adapting to technological change (Bennett et al., 2017). These issues are driving many higher education institutions to utilize a collaborative approach to contemporary course design in the hope that it will streamline development and ensure a level of consistency across their offerings (Martin & Bolliger, 2022).

As part of the collaborative approach, academic subject matter experts (SMEs) and TSPs (Whitchurch, 2012) work together to create enhanced learning experiences for students. Participants in an SME role primarily contribute their disciplinary expertise, and TSP working in a variety of roles such as learning design, media production, and library specialists provide support and general guidance to direct the design and development of the final course (Chen & Carliner, 2020; Martin & Bolliger, 2022). While this delineation implies discrete roles, they become more entangled and complex when looked at from the framework of day-to-day work. The collaborative approach takes into consideration the wide range of skills required to create engaging and high-quality courses and enables institutions to create codesign teams based on the need for specific specializations and expertise (McDonald et al., 2021; Richardson et al., 2019). When functioning in effective codesign partnerships, these collaborative teams, with adequate resourcing, are able to design and develop courses of a higher quality than those developed independently (McInnes et al., 2020; Richardson et al., 2019) and enact professional learning (Campbell et al., 2022). This professional learning is critical, as academic staff are increasingly expected to implement educational technologies and diversify their mode of delivery (Richardson et al., 2019), despite generally not having specific educational qualifications or training (Olesova & Campbell, 2019). Participating in collaborative course development helps embed professional learning into a scaffolded learning experience where TSPs support the development of academic capability. This occurs throughout the course development as SMEs gain opportunities for contextual experiential learning and postcourse development where communities of practice often form based on shared experiences (McInnes et al., 2020). Collaborations between TSP and SMEs also provide socialization for the integration and use of technologies (White et al., 2020), with the at-elbow support of the collaborative approach modelling and contextualizing technology use in a way that SMEs can then tangibly take forward in their learning and teaching practice. The collaborative nature of the relationship between TSP and SMEs is key to the shared acceptance of new technologies (Granić & Marangunić, 2019; Tay et al., 2023) and is critical if we are to expect SMEs to adopt technologies to support course design within the collaborative approach (Bennett et al., 2015).

Yet, despite the success of the collaborative process, it is not without its challenges. Most commonly, these involve the delineation of roles and responsibilities (Halupa, 2019), the timelines and workload associated with course development (McInnes et al., 2020), and conflict arising from communication (Mueller et al., 2022). This is especially true where there is a perception of academic unbundling for individuals as their academic role shifts towards a focus on disciplinary knowledge and TSPs take ownership of other areas of course design traditionally bundled into the academic role (White et al., 2020).

2.2 Generative Artificial Intelligence

Artificial intelligence (AI) has been around since the 1950s (Crawford et al., 2023), with AI technologies applied in education to automate administrative tasks, produce “smart content,” develop intelligent tutoring systems, provide adaptive learning, and develop immersive learning and gamification (Tahiru, 2021; Zhai et al., 2021). Yet, despite the opportunities for AI in education, Tahiru (2021) in their systematic review notes that despite advances in AI capabilities there has been “no significant advancement in the use of AI in education [up to the date of publication in 2021].” This fundamentally shifted in late 2022, when gen-AI tools that combine machine learning and big data underwent public massification (Crawford et al., 2023), with the release of ChatGPT and DALLE-E-2 in 2022 to a public market, generating millions of active users by early 2023 (Wu et al., 2023). This massification has led to these gen-AI tools being more easily incorporated across the education system by learners, teachers, and TSPs (see, for example, Amri & Hisan, 2023; Baidoo-Anu & Owusu Ansah, 2023; Khademi, 2023).

In this paper we narrow our focus to these post-2022 generative-AI tools that can be defined as generative pretrained transformers (GPT) and combined machine learning algorithms and big data to produce “original” artifacts (e.g., text, images, video) based on user prompts (Crawford et al., 2023)—this definition incorporates commonly used tools such as Google Bard, DALL-E, and ChatGPT.

As a technology within the digital information marketplace, there are ethical and privacy risks inherent in the design and implementation of all gen-AI tools. These include concerns surrounding the validity or reliability of AI-generated outputs (Grove, 2023b) or the process and intention behind their integration (Grove, 2023a). Despite this, there is acknowledgment that integration of gen-AI technologies will occur across the higher education sector and that capability development to respond to the changes brought by gen-AI is essential (Sabzalieva & Valentini, 2023). This is especially true given the somewhat unknown future of AI development and the risks posed by its misuse (Australian Human Rights Commission, 2023). Therefore it is prudent for academic and professional staff to be conscious of how gen-AI technologies will reshape their work to ensure appropriate leveraging of AI's abilities and to provide accurate and relevant advice on its impacts across higher education as the technology evolves (Patten, 2023). The ethical, copyright, and privacy risks presented by AI must be addressed by domestic legislation and international cooperation (AI Safety Summit, 2023) and cannot be an insurmountable barrier to the integration of gen-AI tools. An institution that does not continuously evaluate the role gen-AI can play in its teaching and learning—based on the assumption that these barriers are insurmountable—will find itself left behind as gen-AI development continues.

When we commenced the process of integrating gen-AI as a partner in the collaborative development process, there was a gap in the published literature on the subject. To contribute to this emerging literature, we have identified our key learning about integrating gen-AI into our work practices as we seek to explore the following questions: How might the dynamics shift as TSP and SME collaborations begin to incorporate gen-AI? What will the affordances and challenges be when introducing gen-AI powered tools into course development? And how can we continue leveraging the capability-building aspects of the existing process with the application of gen-AI?

3. METHOD

Cognizant of the complexities of integrating new technology into existing course development practices, we sought to explore the possibilities of gen-AI as a partner in collaborative course development. Our aim, therefore, was to investigate the shifting dynamics of existing TSP and academic relationships as we introduce gen-AI as another “partner” in the process. This article draws on a combination of education and gen-AI literature to explicate on gen-AI as an agent for transformation in collaborative course development. We utilize a case-study approach (Yin, 2017) to juxtapose the literature with the lived experience of the authors. The bounds of this case are explicit to the role of the three authors as learning designers collaboratively designing online courses with SMEs and utilizing gen-AI to support the process of course development at an Australian university. The authors in their role as learning designers have worked across multiple higher education institutions on the end-to-end development of courses, degree programs, and microcredentials using a collaborative approach, situated in an Australian higher education context.

This case study harnesses the author's knowledge and experience as reflective practitioners to contribute to the collective discourse around gen-AI applications in higher education (Flyvbjerg, 2006). We deemed a case-study approach most suitable to systematically analyze our processes, context, and reflection in light of scholarly literature and to make our practice visible and open to critical examination (Boyer, 1990). Data was collected through weekly community of practice (Wenger, 1998) meetings between the three authors that occurred for a period of six months. In these meetings the authors engaged in reflective practice discussions centered around a constructivist research approach (Thompson, 2017), where the authors used broad prompting questions to seed organic discussions around each other's experiences in the application of gen-AI in their practice. The lessons learned presented in this paper share themes that were discussed and formed through the author's community of practice. As practitioner research, data was collected through the author's analysis of their use of gen-AI, and as such, ethics was not deemed necessary for this study. The author's reflections were about their course design only and not connected to their interactions with others. There was no duress or perceived threat to the autonomy, safety, or confidentiality of the authors, who freely and willingly participated in this research project.

4. LESSONS LEARNED FROM TRANSFORMING THE COLLABORATIVE COURSE DEVELOPMENT WITH GEN-AI AS A PARTNER

The increasing use of gen-AI in course design and development now seems an inevitability rather than a value proposition, with the increasing uptake of gen-AI technologies among academic staff. In this paper we seek not to critique this inevitability, rather, we look to reimagine practices should higher education continue toward the gen-AI acceptance and integration currently posited (Siemens et al., 2023). Within this framing, TSPs must be leaders (Crawford et al., 2023) in establishing and enacting a new model for collaborative course development that includes gen-AI partnerships. But what does this model look like, and what shifting dynamics within existing TSP and SME relationships are introduced when AI is included as another “partner” in the process?

4.1 Our Experiences Integrating a Gen-AI Partnership

Since early 2023, as learning designers working at a large research-intensive Australian university, we have grappled with how we integrate gen-AI into our practice. In our roles as TSPs, we collaborate with SMEs to plan, map, and design courses and programs, as well as undertake end-to-end development of courses within the learning management system (LMS). Through initial experimentation and testing of text, image, video, audio, and code gen-AI software, we identified two key areas where we could partner with gen-AI to support our role in the collaborative course development process. Firstly, to seek efficiencies—i.e., use gen-AI technology to streamline administrative tasks, allowing us to focus on the more strategic and creative aspects of course design and creation. Secondly, to generate enhancements—i.e., to improve the quality of our courses by utilizing gen-AI technology to create learning tools like graphics and interactive content.

For efficiencies, we identified tasks where gen-AI could assist in streamlining our processes. This includes using gen-AI to copyedit written content and change the tone of written course material, adapt the reading level of content to make it more suitable for the intended audience, help us write course emails and announcements, refine video scripts for teleprompter use, generate first drafts of course content such as rubrics, lesson sequences, and formative assessment, and troubleshoot technical problems, such as using coding auto-suggestion/completion.

For enhancements, we utilized gen-AI in our course design practice to implement features, technologies, and strategies that enhance the learning experience for both students and educators beyond our individual capabilities. This included use cases such as generating ideas to present content more effectively through interactives, producing analogies to turn abstract concepts into concrete ones, creating reflective questions based on course readings or video scripts, generating student learning tasks and feedback based on existing course content, suggesting on-screen graphics to support video content, and generating scenarios or case studies based on existing content.

As we, the authors, increased our integration of gen-AI tools into our practice to gain efficiencies and enhancements, we began to document the use cases that we had incorporated into our practice. To methodically document these, on a weekly basis we individually self-evaluated how we had incorporated gen-AI in our workflow, then met to discuss and document these use cases. This enabled us to cyclically experiment with gen-AI, and then share, document, and refine systematically. During this work we identified that we could undertake deliberate experiments to create reusable prompts (McInnes & Kulkarni, 2023) that could be adapted across multiple courses by swapping out specific details while retaining the core structure. Through this process we were able to condense complex sets of prompts into concise commands that consistently achieve results. This streamlined our interactions and enabled us to share and collaborate on these prompts to continuously improve them. It also enabled us to test prompts across different gen-AI technologies (e.g., ChatGPT compared to Google Bard) and evaluate the results to determine the most suitable software to use.

As we scaled the integration of gen-AI into our workflow in collaborative course design, we critically reflected on our process for course design and development—the analysis, design, development, implementation, and evaluation (ADDIE) model. Although originating more as an umbrella term covering many similar models, ADDIE has garnered a widely shared recognition as a model that comprises the primary processes that are part of course development, namely, analysis, design, development, implementation, and evaluation (Molenda, 2003). It is important to note that the ADDIE model does not depict a purely sequential codesign process but one that is iterative as well. This iterative approach is demonstrated through the continuous cycles of the process, both on the macro level for the course itself, and also on the micro level, which can be applied to all areas of a course—from the module or topic level down to individual activities and artifacts. From analyzing the efficiency and enhancement use cases against the ADDIE model, we saw that there were not unique areas where gen-AI did or did not fit into our workflow model; rather, our gen-AI use cases permeated the cycles of codesign that occur during the collaborative course design and development process. As such, while we were able to theme our use cases along a linear version of the course development process for internal team purposes, due to the cyclical nature of ADDIE we were unable to articulate documentation that predicted when gen-AI would add the most value in a manner that could be shared with SMEs.

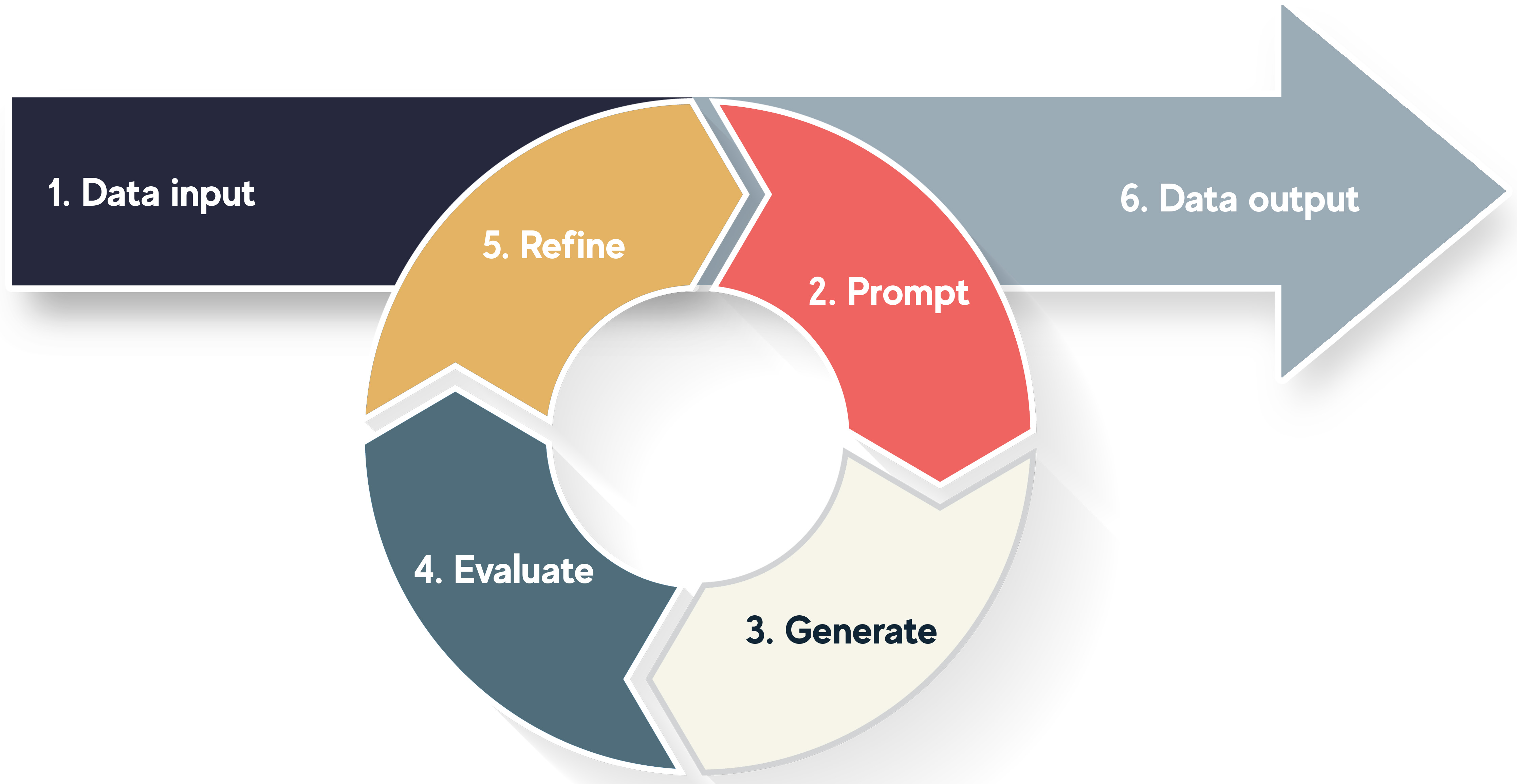

4.2 The Human-in-the-Loop Perspective on a Gen-AI Partnership

Firstly, we need to consider how gen-AI is included as a partner in the collaborative process. To achieve this we interrogated the partnership from a human-machine team lens, acknowledging that this posits a dynamic that leverages the strengths of both actors: the speed, knowledge base, and recall of gen-AI with the contextual understanding, nuance, and decision-making of a human. This builds on the common usage of the human-in-the-loop perspective for machine learning (Mosqueira-Rey et al., 2023), where human and machine actors' interactions are identified and categorized. To apply the human-in-the-loop model to a gen-AI partnership, we must start by unpacking, broadly, how gen-AI operates (Baidoo-Anu & Owusu Ansah, 2023); see Fig. 1. The process, regardless of the gen-AI model, is fundamentally the same, i.e., there is (1) data input, either from human user input or from the knowledge banks of the gen-AI; (2) a prompt, usually in the form of human input to provide the initial question, which the gen-AI model uses to formulate responses; (3) generation—gen-AI utilizes a combination of deep learning algorithms and natural language processing techniques to generate a response based on the input data and the prompt; (4) evaluation—the quality of the generated content is then evaluated by humans to ensure that it is relevant, coherent, factually accurate, unbiased, and relevant to the prompt; (5) refinement—the content generation is refined based on the feedback provided by humans, possibly through repeated cycles of prompting, generation, evaluation, and refinement (steps 2–5); and (6) data output—a human–gen-AI partnered response is output.

FIG. 1: A cyclical process model of generative AI

From here we must ask ourselves how the human–machine team functions, where the machine adds value to our existing processes, and where the human involvement occurs in such a model, that is, where is the human in this loop? To understand this we must look at the human “value-add” in the process. From our analysis and the related literature, we believe that humans play a crucial role from two perspectives. Firstly, in domains where humans offer creativity and originality beyond that of our gen-AI partners, and secondly, in areas where we are unwilling for our gen-AI partners to contribute for ethical, integrity, social, or privacy reasons (Halaweh, 2023). So where do we add a human-in-the-loop?

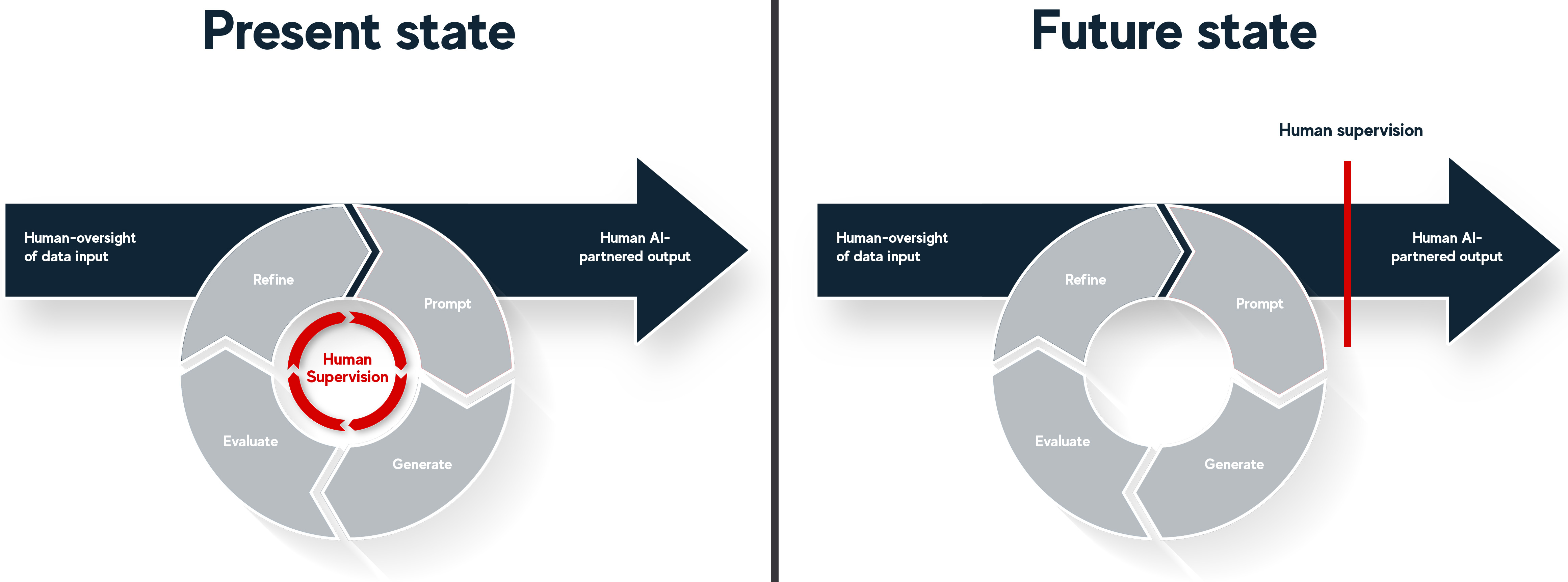

Integrating the human-in-the-loop into the model where they add the most value while accounting for the present technological capability of gen-AI (Fig. 2) requires human supervision and input in prompting, evaluation, generation, and refinement of generated content. An example from our context where this has occurred is when a TSP and SME partnered with gen-AI to develop a process flowchart for the United States Environmental Impact Assessment process. In this example, the SME provided original content that was supported by existing information available on the internet (human oversight of data input). Prompting was input by the TSP in the form of a ChatGPT prompt to create an XML file. The generation was undertaken by the gen-AI. Evaluation and refinement were handled by the SME—with some looping back to the TSP for re-prompting and gen-AI for generation. Then lastly, the TSP used the XML file to produce a vector image that they then enhanced (human oversight of output). Through this example we can observe human oversight of input and output with ongoing supervision throughout the cyclical refinement process.

FIG. 2: The present and future state of the cyclical model for human-AI partnership showing the human-in-the-loop

Yet, given the rapid progress of gen-AI tools, it is not sufficient to develop a human-in-the-loop model for the current generation of tools. We must look to the future and consider how innovations may reshape this process. Future gen-AI could complete these tasks without the same level of human supervision. Tools such as AutoGPT (Marr, 2023) can use natural language processing techniques to understand language cues and generate content, removing the need for explicit human-generated prompts. Likewise, such tools can use self-supervised learning techniques to evaluate the quality of their generated content without human intervention—they can learn from their mistakes and adjust performance accordingly. Finally, these tools will likely use reinforcement learning techniques to improve their capabilities over time, learning from feedback provided by their own evaluations and adjusting parameters to improve their generated content. While current models of gen-AI require human supervision in various aspects, future gen-AI has the potential to perform many of these tasks without human intervention, making them more efficient and less reliant on direct human supervision. But what does this mean for our model of human-in-the-loop? Regardless of these future automations and the reduction in human supervision, we advocate for a minimum level of human involvement. For example, when we ask these tools to create a course, we must consider our own personal and our institution's integrity and, more broadly, the ethical ramifications. As such, we propose that, as a minimum, we must have a human-in-the-loop at two stages: the input and output. The critical characteristic in both the present and future stages is the role of the human-in-the-loop. Regardless of the capabilities of the technology, there must be human intervention to ensure academic creativity, integrity, and rigor when developing courses. Additionally, course outcomes should prepare graduates for an unknown future state—humans are positioned as the best agents in the process to achieve this. The difference is that in the present state, the human supervision of output occurs continually throughout the process, whereas in the future state it would likely act as a gatekeeper prior to output. In both models this posits a dynamic that leverages the strengths of both human and nonhuman actors.

In the current and future versions of the human-in-the-loop model, we present a comparatively simplified version of how human and machine interactions occur. We acknowledge that there is not a simple duality between the human–machine perspective; instead, the various human roles are enacted by TSP and SME actors, depending on the situation and context. Further, with the varying capabilities of an ever-expanding range of gen-AI products, we acknowledge that gen-AI can fit within a multitude of roles (e.g., SME, codesign and brainstorming partner, or media creator) within the human-in-the-loop framework. Further work is required to fully understand these relationships and the dynamics they posit.

4.3 Considerations for Integrating Gen-AI as a Partner

As we introduced another “partner” into our collaborative course development process, we forced ourselves to reconsider existing team dynamics. Roles and responsibilities within this process can be an area of contention, with a lack of clarity around the role of TSPs bringing rise to conflict between collaborators (Mueller et al., 2022), with successful relationship building being key to the success of SME and TSP partnerships (McDonald et al., 2022). Adding another partner into this mix, in the form of gen-AI, has the potential to further compound this problem.

We therefore sought to establish a structure around the way this technology is integrated into the collaboration (Pollard, 2022). The critical challenge was the delineation of roles and responsibilities that included gen-AI. Yet, with the human-in-the-loop model, for the most part, the gen-AI does not replace or unbundle any of the existing roles. In many ways the gen-AI, in fact, is an enabler, supporting those in the existing roles to be more efficient in their work or to develop artifacts of a higher quality than they could on their own. In this way we see the technology as augmenting the capability of humans within existing roles and responsibilities. Gen-AI draws on and aggregates expertise far faster and at a greater scale than humans; however, the work of synthesis between these areas still needs to be done by humans, with the process of collecting knowledge streamlined by gen-AI. As such, we suggest the addition of gen-AI to the collaborative course development process seems unlikely to further muddy the waters of roles and responsibilities. Yet, to ensure ongoing clarity of roles, a clear structure for those operating within the collaborative development process is essential.

One of the most effective means for establishing structure around individuals' roles and responsibilities when integrating gen-AI as a partner is the establishment of guiding principles to set clear bounds on the role of these tools in collaborative course development. To establish a grounding for gen-AI use in our own model, we adopted five principles drawing from our own experiences as learning designers that utilized gen-AI, the human-in-the-loop model, and drawing on existing literature. These five principles are human oversight of the input and output of AI-generated content (i.e., the human-in-the-loop); transparency over how gen-AI is used for all stakeholders, including students; academic and research integrity; ethical use in line with privacy, consent, and unintended consequences, such as reinforcing biases or perpetuating inequalities; and only using gen-AI for improving the efficiency of tasks or supporting the enhancement of learning experiences rather than replacing SME or TSP roles.

Beyond these principles there is a need for clear guidelines and communication to ensure everyone understands their roles and responsibilities in relation to gen-AI. This will be context dependent, but given the risk of conflict arising from poor communication (Mueller et al., 2022), this needs to be prioritized as part of a structural plan towards the integration of gen-AI. Finally, the structures to support the gen-AI partnership and guiding principles about its enactment highlight the importance of training and support for team members to effectively collaborate with gen-AI systems.

We are still in the early stages of gen-AI massification, where early adoption gives way to adoption by the majority (Granić & Marangunić, 2019). Given the potential for capability building that arises from the collaborative course development model (White et al., 2020), we must ensure all relevant stakeholders are adequately skilled and knowledgeable about gen-AI opportunities, risks, and rewards. Moreover, ongoing capability development around the functionality of gen-AI tools has the potential to reduce the chance of ethical misconduct through informed practice by users.

4.4 The Rewards and Risks of a Gen-AI Partnership

Based on our experiences integrating gen-AI as a partner into the collaborative course development process, we observe that it can provide opportunities to create efficiencies in existing processes and afford new possibilities. However, with these potential rewards come risks, should enactment of the partnership not be carefully considered.

The rewards of integrating gen-AI as a partner come in the form of the efficiencies and enhancements it can enable. Of particular interest to institutions will be the ability for gen-AI to facilitate increases in productivity, thereby reducing the workload implications of course development or increasing the potential speed-to-market of new “products.” Particularly where collaborative course development occurs in an intensive environment, this increase in productivity may help mitigate some of the previous concerns around academic workload and competing priorities (McInnes et al., 2020), with increased speed-to-market, enabling institutions to more easily compete in the microcredential space, where courses (single semester-long units of study within a degree program) often need to be developed rapidly to fill a market need. In both scenarios the integration of gen-AI as a partner can assist in creating more sustainable models for course development that do not require as substantial an investment in new technologies or resources.

Another key advantage of integrating gen-AI is that we have the opportunity to explicitly facilitate professional development around these emerging technologies. The high-impact capability building of the collaborative course development process aids TSP to create highly scaffolded environments where they can empower SMEs to experientially learn how gen-AI tools can be used for efficiencies and enhancements in the design and development of courses using technologies (White et al., 2020). Through effective integration and supported capability building, we can help bridge the gap in the adoption of technologies for course design (Bennett et al., 2015), supporting SMEs in prompt engineering, ethical use of gen-AI, and curation of content, helping them gain a better understanding of how gen-AI affects their practice. With the efficiencies that gen-AI tools provide and their ease of use their level of acceptance is likely to be high—based on the technological acceptance model (Davis, 1989). In the collaborative course development process TSPs are a likely enabler for the successful adoption of these new technologies by SMEs (Tay et al., 2023).

Similarly, gen-AI partnerships have the ability to increase the capability of human partners, enabling them to create improved learning experiences that would otherwise be out of reach due to time constraints or capability. In this way, gen-AI can empower human actors to create more engaging learning experiences such as interactives, case-study-based learning activities, or branching scenarios, as well as generate artifacts such as code, graphics, or animations (Airey et al., 2023).

The key risk of integrating gen-AI into the collaborative course development model is that it is used to replace the diversity of human expertise and lived teaching and learning experience existent in the process. In our model we present a human-in-the-loop to maximize the affordances of human and nonhuman actors and mitigate this risk. However, without this type of safeguard, we risk gen-AI being used as an unbundling agent (White et al., 2020). In this scenario, the human, either the SME or TSP, is removed or minimized as part of the collaborative course development process. Where this occurs with SMEs, we risk divesting them of the supported experiential learning that provides high-impact capability building (Macfarlane, 2011; McInnes et al., 2020; Olesova & Campbell, 2019). Another barrier that presents itself whenever we consider technological adoption is resistance to change, and staff continuing to utilize practices and technologies with which they are more familiar (Mueller et al., 2022). This presents a risk when we plan for a future collaborative course development that relies on the efficiencies afforded by gen-AI. We must ensure that staff have the necessary mindset and attitudes toward the adoption of this technology and that the technology adoption remains a collaboration between SMEs and TSP (Tay et al., 2023). Beyond these risks that are specific to the collaborative course development model, we must also be aware of the risks more broadly regarding gen-AI and higher education, that is, the risk relating to areas such as ethics, integrity, student perception, institutional reputation, and the privacy and security of individual and institutional data. So, for us to be able to realize the potential of gen-AI to transform the collaborative course design model, we must seek to mitigate these risks, in order to maximize the rewards.

5. CONCLUSION

We now live in a gen-AI-enabled world with no element of our digital society beyond the reach of being transformed by gen-AI. As students, educators, and institutions grapple with the logistics of integrating gen-AI into their practice, we must envisage how each facet of teaching and learning practice will be transformed. The collaborative course development process is no exception. We must proactively seek to influence this new human-AI partnership to ensure that it is enacted with the rigor that we expect regarding ethics, integrity, and transparency. We have proposed a human-in-the-loop framework for integrating gen-AI into our work as TSPs, as well as explicated some strategies that need to be considered when implementing gen-AI. As we continue to develop courses with this new partner, it remains to be seen how our existing process will continue to be reshaped by the efficiencies and enhancements that gen-AI affords. Future work will be needed to gather experiences from students and practitioners in the field about their perspectives on a human-AI partnership for developing courses.

ACKNOWLEDGMENT

This article has benefited from the contributions of the Learning Enhancement and Innovation gen-AI working group at the University of Adelaide. We thank them for their contributions and expertise.

REFERENCES

Airey, L., Carandang, M., Kulkarni, A., Nagy, S., & McInnes, R. (2023). AI as a partner in collaborative curriculum development: Possibilities and pitfalls. International Conference on Teaching with AI: Challenges, Opportunities and Strategies, Hong Kong.

AI Safety Summit. (2023). The Bletchley Declaration by Countries Attending the AI Safety Summit, 1-2 November 2023. Published 1 November 2023. Available at: https://www.gov.uk/government/publications/ai-safety-summit-2023-the-bletchley-declaration/the-bletchley-declaration-by-countries-attending-the-ai-safety-summit-1-2-november-2023

Amri, M. M., & Hisan, U. K. (2023). Incorporating AI tools into medical education: Harnessing the benefits of ChatGPT and Dall-E. Journal of Novel Engineering Science and Technology, 2(02), 34–39.

Australian Human Rights Commission. (2023 July 14). Utilising ethical AI in the Australian Education System. Australian Human Rights Commission. Retrieved from https://humanrights.gov.au/sites/default/files/inquiry_into_the_use_of_generative_artificial_intelligence_in_the_australian_education_system_14_july_2023_0.pdf

Baidoo-Anu, D., & Owusu Ansah, L. (2023). Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.4337484

Bennett, S., Agostinho, S., & Lockyer, L. (2017). The process of designing for learning: Understanding university teachers' design work. Educational Technology Research and Development, 65(1).

Bennett, S., Agostinho, S., & Lockyer, L. (2015). Technology tools to support learning design: Implications derived from an investigation of university teachers' design practices. Computers & Education, 81, 211–220. https://doi.org/10.1016/j.compedu.2014.10.016

Boyer, E. L. (1990). Scholarship Reconsidered: Priorities of the Professoriate. Princeton, NJ: Princeton University Press.

Campbell, C., Porter, D. B., Logan-Fleming, D., & Jones, H. (2022). Scanning the Ed Tech Horizon: The 2021–2022 Contextualising Horizon Report.

Chen, Y., & Carliner, S. (2020). A special SME: An integrative literature review of the relationship between instructional designers and faculty in the design of online courses for higher education. Performance Improvement Quarterly. https://doi.org/10.1002/piq.21339

Cotton, D., Cotton, P., & Shipway, R. (2023). Chatting and Cheating: Ensuring Academic Integrity in the Era of ChatGPT. https://doi.org/10.35542/osf.io/mrz8h

Crawford, J., Cowling, M., & Allen, K.-A. (2023). Leadership is needed for ethical ChatGPT: Character, assessment, and learning using artificial intelligence (AI). Journal of University Teaching and Learning Practice, 20(3). https://doi.org/10.53761/1.20.3.02

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340. https://doi.org/10.2307/249008

Flyvbjerg, B. (2006). Five misunderstandings about case-study research. Qualitative Inquiry, 12(2), 219–245. https://doi.org/10.1177/1077800405284363

Granić, A., & Marangunić, N. (2019). Technology acceptance model in educational context: A systematic literature review. British Journal of Educational Technology, 50(5), 2572–2593. https://doi.org/10.1111/bjet.12864

Grove, J. (2023a). Academic minds ‘vital’ as fears grow over ‘out of control’ AI. Times Higher Education. Retrieved from https://www.timeshighereducation.com/news/academic-minds-vital-fears-grow-over-out-control-ai

Grove, J. (2023b). AI must acknowledge scientific uncertainty, says Nobel laureate. Times Higher Education. Retrieved from https://www.timeshighereducation.com/news/ai-must-acknowledge-scientific-uncertainty-says-nobel-laureate

Halaweh, M. (2023). ChatGPT in education: Strategies for responsible implementation. Contemporary Educational Technology, 15(2). https://doi.org/10.30935/cedtech/13036

Halupa, C. (2019). Differentiation of roles: Instructional designers and faculty in the creation of online courses. International Journal of Higher Education, 8(1). https://doi.org/10.5430/ijhe.v8n1p55

Kehrwald, B. A., & Parker, B. (2019). Editorial 16.1: Implementing online learning, stories from the field. Journal of University Teaching & Learning Practice, 16(1). https://doi.org/10.53761/1.16.1.1

Khademi, A. (2023). Can ChatGPT and Bard generate aligned assessment items? A reliability analysis against human performance. Journal of Applied Learning and Teaching, 6(1). https://doi.org/10.37074/jalt.2023.6.1.28

Khalil, M., & Er, E. (2023). Will ChatGPT get you caught? Rethinking of plagiarism detection [Preprint]. EdArXiv. https://doi.org/10.35542/osf.io/fnh48

Macfarlane, B. (2011). The morphing of academic practice: Unbundling and the rise of the para-academic. Higher Education Quarterly, 65(1). https://doi.org/10.1111/j.1468-2273.2010.00467.x

Marr, B (2023) Auto-GPT may be the strong AI tool that surpasses ChatGPT. Forbes. https://www.forbes.com/sites/bernardmarr/2023/04/24/auto-gpt-may-be-the-strong-ai-tool-that-surpasses-chatgpt/?sh=4e9f3e287640

Martin, F., & Bolliger, D. U. (2022). Designing online learning in higher education. In Handbook of Open, Distance and Digital Education (pp. 1–20). Springer Singapore. https://doi.org/10.1007/978-981-19-0351-9_72-1

McDonald, J., Elsayed-Ali, S., Bowman, K., & Rogers, A. A. (2022). Considering what faculty value when working with instructional designers and instructional design teams. Journal of Applied Instructional Design, 11(3), 41–56.

McDonald, J. K., Jackson, B. D., & Hunter, M. B. (2021). Understanding distinctions of worth in the practices of instructional design teams. Educational Technology Research and Development, 69(3). https://doi.org/10.1007/s11423-021-09995-2

McInnes, R., Aitchison, C., & Sloot, B. (2020). Building online degrees quickly: Academic experiences and institutional benefits. Journal of University Teaching and Learning Practice, 17(5). https://doi.org/10.53761/1.17.5.2

McInnes, R. & Kulkarni, A. (2023, October 10) Mastering generative AI: crafting reusable prompts for effective learning design. THE Campus Learn, Share, Connect. https://www.timeshighereducation.com/campus/mastering-generative-ai-crafting-reusable-prompts-effective-learning-design

Molenda, M. (2003). In search of the elusive ADDIE model. Performance Improvement, 42(5), 34–37. https://doi.org/10.1002/pfi.4930420508

Mosqueira-Rey, E., Hernández-Pereira, E., Alonso-Ríos, D., Bobes-Bascarán, J., & Fernández-Leal, Á. (2023). Human-in-the-loop machine learning: A state of the art. Artificial Intelligence Review, 56(4), 3005–3054. https://doi.org/10.1007/s10462-022-10246-w

Mueller, C. M., Richardson, J., Watson, S. L., & Watson, W. (2022). Instructional designers' perceptions & experiences of collaborative conflict with faculty. TechTrends, 66(4). https://doi.org/10.1007/s11528-022-00694-0

Olesova, L., & Campbell, S. (2019). The impact of the cooperative mentorship model on faculty preparedness to develop online courses. Online Learning, 23(4). https://doi.org/10.24059/olj.v23i4.2089

Patten, S. (2023). AI use by uni students three times rate of workforce. Australian Financial Review. August 22, 2023. https://www.afr.com/work-and-careers/education/how-universities-are-embracing-ai-20230822-p5dyk4

Richardson, J. C., Ashby, I., Alshammari, A. N., Cheng, Z., Johnson, B. S., Krause, T. S., Lee, D., Randolph, A. E., & Wang, H. (2019). Faculty and instructional designers on building successful collaborative relationships. Educational Technology Research and Development, 67(4). https://doi.org/10.1007/s11423-018-9636-4

Rudolph, J., Tan, S., & Tan, S. (2023). ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? Journal of Applied Learning & Teaching, 6(1). https://doi.org/10.37074/jalt.2023.6.1.9

Sabzalieva, E., & Valentini, A. (2023). ChatGPT and Artificial Intelligence in Higher Education: Quick Start Guide. United Nations Educational, Scientific and Cultural Organization (UNESCO) and the UNESCO International Institute for Higher Education in Latin America and the Caribbean (IESALC). Available at: https://www.iesalc.unesco.org/wp-content/uploads/2023/04/ChatGPT-and-Artificial-Intelligence-in-higher-education-Quick-Start-guide_EN_FINAL.pdf

Siemens, G., Palmer, E., Hunter, N., & Tan, K. L., (2023) HERGA Seminar: How Does Artificial Intelligence Fit in Education. Presented at the Higher Education and Research Group Adelaide.

Sok, S., & Heng, K. (2023). ChatGPT for education and research: A review of benefits and risks. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.4378735

Tahiru, F. (2021). AI in education: A systematic literature review. Journal of Cases on Information Technology (JCIT), 23(1), 1–20.

Tay, A. Z., Huijser, H., Dart, S., & Cathcart, A. (2023). Learning technology as contested terrain: Insights from teaching academics and learning designers in Australian higher education. Australasian Journal of Educational Technology, 39(1), 56–70. https://doi.org/10.14742/ajet.8179

Thompson, P. (2017) Foundations of Educational Technology. Oklahoma State University Libraries.

Wenger, E. (1998). Communities of Practice: Learning, Meaning, and Identity. Cambridge, UK: Cambridge University Press. https://doi.org/10.1017/CBO9780511803932

White, S., White, S., & Borthwick, K. (2020). MOOCs, learning designers and the unbundling of educator roles in higher education. Australasian Journal of Educational Technology, 36(5), 14. https://doi.org/10.14742/ajet.6111

Whitchurch, C. (2012). The concept of third space. Reconstructing identities in higher education: The rise of ‘third space’ professionals (Vol. 64, pp. 99–117), Routledge.

Wu, T., He, S., Liu, J., Sun, S., Liu, K., Han, Q. L., & Tang, Y. (2023). A brief overview of ChatGPT: The history, status quo and potential future development. IEEE/CAA Journal of Automatica Sinica, 10(5), 1122–1136.

Yin, R. K. (2017). Case study research and applications: Design and methods. Newbury Park, CA: Sage Publications.

Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education—Where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 1–27.

Zhai, X., Chu, X., Chai, C. S., Jong, M. S. Y., Istenic, A., Spector, M., Liu, J., Yuan, J., & Li, Y. (2021). A review of artificial intelligence (AI) in education from 2010 to 2020. Complexity, 2021, Article ID 8812542, 1–18, 2021. https://doi.org/10.1155/2021/8812542

Comments

Show All Comments